Workflow using PICRUSt2 for Data_Karoline_16S_2025 (v2)

-

Environment Setup: It sets up a Conda environment named picrust2, using the conda create command and then activates this environment using conda activate picrust2.

#https://github.com/picrust/picrust2/wiki/PICRUSt2-Tutorial-(v2.2.0-beta)#minimum-requirements-to-run-full-tutorial mamba create -n picrust2 -c bioconda -c conda-forge picrust2 #2.5.3 #=2.2.0_b mamba activate /home/jhuang/miniconda3/envs/picrust2

Under docker-env (qiime2-amplicon-2023.9)

-

Export QIIME2 feature table and representative sequences

#docker pull quay.io/qiime2/core:2023.9 #docker run -it --rm \ #-v /mnt/md1/DATA/Data_Karoline_16S_2025:/data \ #-v /home/jhuang/REFs:/home/jhuang/REFs \ #quay.io/qiime2/core:2023.9 bash #cd /data # === SETTINGS === FEATURE_TABLE_QZA="dada2_tests2/test_7_f240_r240/table.qza" REP_SEQS_QZA="dada2_tests2/test_7_f240_r240/rep-seqs.qza" # === STEP 1: EXPORT QIIME2 ARTIFACTS === mkdir -p qiime2_export qiime tools export --input-path $FEATURE_TABLE_QZA --output-path qiime2_export qiime tools export --input-path $REP_SEQS_QZA --output-path qiime2_export -

Convert BIOM to TSV for Picrust2 input

biom convert \ -i qiime2_export/feature-table.biom \ -o qiime2_export/feature-table.tsv \ --to-tsv

Under env (picrust2): mamba activate /home/jhuang/miniconda3/envs/picrust2

-

Run PICRUSt2 pipeline

tail -n +2 qiime2_export/feature-table.tsv > qiime2_export/feature-table-fixed.tsv picrust2_pipeline.py \ -s qiime2_export/dna-sequences.fasta \ -i qiime2_export/feature-table-fixed.tsv \ -o picrust2_out \ -p 100 #This will: #* Place sequences in the reference tree (using EPA-NG), #* Predict gene family abundances (e.g., EC, KO, PFAM, TIGRFAM), #* Predict pathway abundances. #In current PICRUSt2 (with picrust2_pipeline.py), you do not run hsp.py separately. #Instead, picrust2_pipeline.py internally runs the HSP step for all functional categories automatically. It outputs all the prediction files (16S_predicted_and_nsti.tsv.gz, COG_predicted.tsv.gz, PFAM_predicted.tsv.gz, KO_predicted.tsv.gz, EC_predicted.tsv.gz, TIGRFAM_predicted.tsv.gz, PHENO_predicted.tsv.gz) in the output directory. mkdir picrust2_out_advanced; cd picrust2_out_advanced #If you still want to run hsp.py manually (advanced use / debugging), the commands correspond directly: hsp.py -i 16S -t ../picrust2_out/out.tre -o 16S_predicted_and_nsti.tsv.gz -p 100 -n hsp.py -i COG -t ../picrust2_out/out.tre -o COG_predicted.tsv.gz -p 100 hsp.py -i PFAM -t ../picrust2_out/out.tre -o PFAM_predicted.tsv.gz -p 100 hsp.py -i KO -t ../picrust2_out/out.tre -o KO_predicted.tsv.gz -p 100 hsp.py -i EC -t ../picrust2_out/out.tre -o EC_predicted.tsv.gz -p 100 hsp.py -i TIGRFAM -t ../picrust2_out/out.tre -o TIGRFAM_predicted.tsv.gz -p 100 hsp.py -i PHENO -t ../picrust2_out/out.tre -o PHENO_predicted.tsv.gz -p 100 -

Metagenome prediction per functional category (if needed separately)

#cd picrust2_out_advanced metagenome_pipeline.py -i ../qiime2_export/feature-table.biom -m 16S_predicted_and_nsti.tsv.gz -f COG_predicted.tsv.gz -o COG_metagenome_out --strat_out metagenome_pipeline.py -i ../qiime2_export/feature-table.biom -m 16S_predicted_and_nsti.tsv.gz -f EC_predicted.tsv.gz -o EC_metagenome_out --strat_out metagenome_pipeline.py -i ../qiime2_export/feature-table.biom -m 16S_predicted_and_nsti.tsv.gz -f KO_predicted.tsv.gz -o KO_metagenome_out --strat_out metagenome_pipeline.py -i ../qiime2_export/feature-table.biom -m 16S_predicted_and_nsti.tsv.gz -f PFAM_predicted.tsv.gz -o PFAM_metagenome_out --strat_out metagenome_pipeline.py -i ../qiime2_export/feature-table.biom -m 16S_predicted_and_nsti.tsv.gz -f TIGRFAM_predicted.tsv.gz -o TIGRFAM_metagenome_out --strat_out # Add descriptions in gene family tables add_descriptions.py -i COG_metagenome_out/pred_metagenome_unstrat.tsv.gz -m COG -o COG_metagenome_out/pred_metagenome_unstrat_descrip.tsv.gz add_descriptions.py -i EC_metagenome_out/pred_metagenome_unstrat.tsv.gz -m EC -o EC_metagenome_out/pred_metagenome_unstrat_descrip.tsv.gz add_descriptions.py -i KO_metagenome_out/pred_metagenome_unstrat.tsv.gz -m KO -o KO_metagenome_out/pred_metagenome_unstrat_descrip.tsv.gz # EC and METACYC is a pair, EC for gene_annotation and METACYC for pathway_annotation add_descriptions.py -i PFAM_metagenome_out/pred_metagenome_unstrat.tsv.gz -m PFAM -o PFAM_metagenome_out/pred_metagenome_unstrat_descrip.tsv.gz add_descriptions.py -i TIGRFAM_metagenome_out/pred_metagenome_unstrat.tsv.gz -m TIGRFAM -o TIGRFAM_metagenome_out/pred_metagenome_unstrat_descrip.tsv.gz -

Pathway inference (MetaCyc pathways from EC numbers)

#cd picrust2_out_advanced pathway_pipeline.py -i EC_metagenome_out/pred_metagenome_contrib.tsv.gz -o EC_pathways_out -p 100 pathway_pipeline.py -i EC_metagenome_out/pred_metagenome_unstrat.tsv.gz -o EC_pathways_out_per_seq -p 100 --per_sequence_contrib --per_sequence_abun EC_metagenome_out/seqtab_norm.tsv.gz --per_sequence_function EC_predicted.tsv.gz #ERROR due to missing .../pathway_mapfiles/KEGG_pathways_to_KO.tsv pathway_pipeline.py -i COG_metagenome_out/pred_metagenome_contrib.tsv.gz -o KEGG_pathways_out -p 100 --no_regroup --map /home/jhuang/anaconda3/envs/picrust2/lib/python3.6/site-packages/picrust2/default_files/pathway_mapfiles/KEGG_pathways_to_KO.tsv pathway_pipeline.py -i KO_metagenome_out/pred_metagenome_strat.tsv.gz -o KEGG_pathways_out -p 100 --no_regroup --map /home/jhuang/anaconda3/envs/picrust2/lib/python3.6/site-packages/picrust2/default_files/pathway_mapfiles/KEGG_pathways_to_KO.tsv add_descriptions.py -i EC_pathways_out/path_abun_unstrat.tsv.gz -m METACYC -o EC_pathways_out/path_abun_unstrat_descrip.tsv.gz gunzip EC_pathways_out/path_abun_unstrat_descrip.tsv.gz #Error - no rows remain after regrouping input table. The default pathway and regroup mapfiles are meant for EC numbers. Note that KEGG pathways are not supported since KEGG is a closed-source database, but you can input custom pathway mapfiles if you have access. If you are using a custom function database did you mean to set the --no-regroup flag and/or change the default pathways mapfile used? #If ERROR --> USE the METACYC for downstream analyses!!! #ERROR due to missing .../description_mapfiles/KEGG_pathways_info.tsv.gz #add_descriptions.py -i KO_pathways_out/path_abun_unstrat.tsv.gz -o KEGG_pathways_out/path_abun_unstrat_descrip.tsv.gz --custom_map_table /home/jhuang/anaconda3/envs/picrust2/lib/python3.6/site-packages/picrust2/default_files/description_mapfiles/KEGG_pathways_info.tsv.gz #NOTE: Target-analysis for the pathway "mixed acid fermentation" -

Visualization

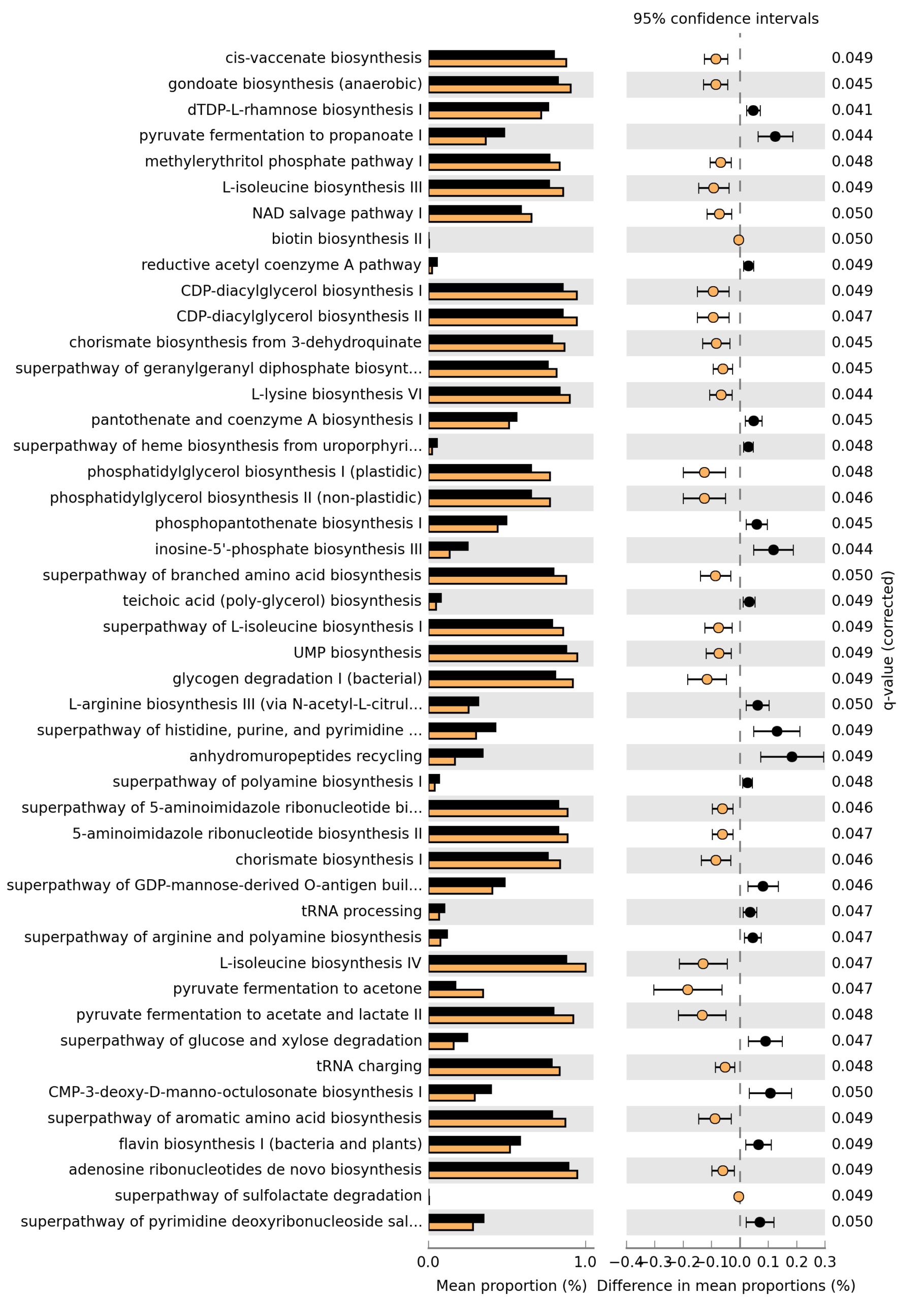

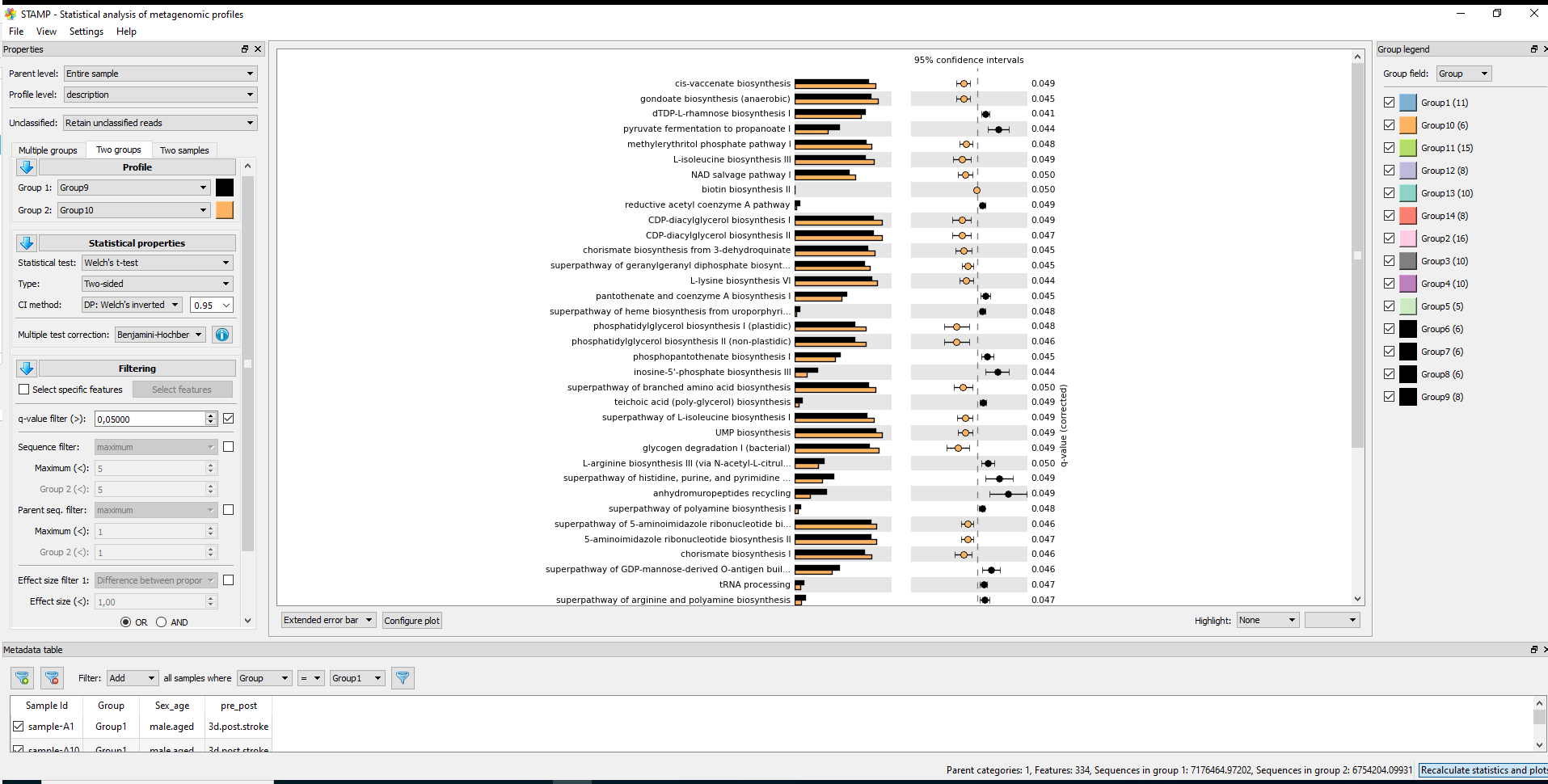

#7.1 STAMP #https://github.com/picrust/picrust2/wiki/STAMP-example #Note that STAMP can only be opened under Windows # It needs two files: path_abun_unstrat_descrip.tsv.gz as "Profile file" and metadata.tsv as "Group metadata file". cp ~/DATA/Data_Karoline_16S_2025/picrust2_out_advanced/EC_pathways_out/path_abun_unstrat_descrip.tsv ~/DATA/Access_to_Win10/ cut -d$'\t' -f1 qiime2_metadata.tsv > 1 cut -d$'\t' -f3 qiime2_metadata.tsv > 3 cut -d$'\t' -f5-6 qiime2_metadata.tsv > 5_6 paste -d$'\t' 1 3 > 1_3 paste -d$'\t' 1_3 5_6 > metadata.tsv #SampleID --> SampleID SampleID Group pre_post Sex_age sample-A1 Group1 3d.post.stroke male.aged sample-A2 Group1 3d.post.stroke male.aged sample-A3 Group1 3d.post.stroke male.aged cp ~/DATA/Data_Karoline_16S_2025/metadata.tsv ~/DATA/Access_to_Win10/ # MANULLY_EDITING: keeping the only needed records in metadata.tsv: Group 9 (J1–J4, J10, J11) and Group 10 (K1–K6). #7.2. ALDEx2 https://bioconductor.org/packages/release/bioc/html/ALDEx2.html

Under docker-env (qiime2-amplicon-2023.9)

-

(NOT_NEEDED) Convert pathway output to BIOM and re-import to QIIME2 gunzip picrust2_out/pathways_out/path_abun_unstrat.tsv.gz biom convert \ -i picrust2_out/pathways_out/path_abun_unstrat.tsv \ -o picrust2_out/path_abun_unstrat.biom \ –table-type=”Pathway table” \ –to-hdf5

qiime tools import \ --input-path picrust2_out/path_abun_unstrat.biom \ --type 'FeatureTable[Frequency]' \ --input-format BIOMV210Format \ --output-path path_abun.qza #qiime tools export --input-path path_abun.qza --output-path exported_path_abun #qiime tools peek path_abun.qza echo "✅ PICRUSt2 pipeline complete. Output in: picrust2_out" -

Short answer: unless you had a very clear, pre-specified directional hypothesis, you should use a two-sided test.

A bit more detail: * Two-sided t-test * Tests: “Are the means different?” (could be higher or lower). * Standard default in most biological and clinical studies and usually what reviewers expect. * More conservative than a one-sided test. * One-sided t-test * Tests: “Is Group A greater than Group B?” (or strictly less than). * You should only use it if before looking at the data you had a strong reason to expect a specific direction and you would ignore/consider uninterpretable a difference in the opposite direction. * Using one-sided just to gain significance is considered bad practice. For your pathway analysis (exploratory, many pathways, q-value correction), the safest and most defensible choice is to: * Use a two-sided t-test (equal variance or Welch’s, depending on variance assumptions). So I’d recommend rerunning STAMP with Type: Two-sided and reporting those results. #--> Using a two-sided Welch's t-test in STAMP, that is the unequal-variance version (does not assume equal variances and is more conservative than “t-test (equal variance)” referring to the classical unpaired Student’s t-test. -

Statistics in STAMP

* For multiple groups: * Statistical test: ANOVA, Kruskal-Wallis H-test * Post-hoc test: Games-Howell, Scheffe, Tukey-Kramer, Welch's (uncorrected) (by default 0.95) * Effect size: Eta-squared * Multiple test correction: Benjamini-Hochberg FDR, Bonferroni, No correction * For two groups * Statistical test: t-test (equal variance), Welch's t-test, White's non-parametric t-test * Type: One-sided, Two-sided * CI method: "DP: Welch's inverted" (by default 0.95) * Multiple test correction: Benjamini-Hochberg FDR, Bonferroni, No correction, Sidak, Storey FDR * For two samples * Statistical test: Bootstrap, Chi-square test, Chi-square test (w/Yates'), Difference between proportions, Fisher's exact test, G-test, G-test (w/Yates'), G-test (w/Yates') + Fisher's, Hypergeometric, Permutation * Type: One-sided, Two-sided * CI method: "DP: Asymptotic", "DP: Asymptotic-CC", "DP: Newcomber-Wilson", "DR: Haldane adjustment", "RP: Asymptotic" (by default 0.95) * Multiple test correction: Benjamini-Hochberg FDR, Bonferroni, No correction, Sidak, Storey FDR -

Since MetaCyc does not have a single pathway explicitly named “short-chain fatty acid biosynthesis”, I defined a small SCFA-related set (acetate-, propionate- and butyrate-producing pathways) and tested these between Group 9 and Group 10 (Welch’s t-test, with BH correction within this subset). These pathways can also be found in the file Welchs_t-test.xlsx attached to my email from 26.11.2025 (for Group9 (J1-4, J6-7, J10-11) vs Group10 (K1-6)).

Pathway ID Description Group 9 mean (%) Group 10 mean (%) p-value p-adj (BH, SCFA set)

P108-PWY pyruvate fermentation to propanoate I 0.5070 0.3817 0.001178 0.0071

PWY-5100 pyruvate fermentation to acetate and lactate II 0.8354 0.9687 0.007596 0.0228

CENTFERM-PWY pyruvate fermentation to butanoate 0.0766 0.0410 0.026608 0.0532

PWY-5677 succinate fermentation to butanoate 0.0065 0.0088 0.365051 0.5476

P163-PWY L-lysine fermentation to acetate and butanoate 0.0324 0.0271 0.484704 0.5816

PWY-5676 acetyl-CoA fermentation to butanoate II 0.1397 0.1441 0.927588 0.9276In this SCFA-focused set, the propionate (P108-PWY) and acetate (PWY-5100) pathways remain significantly different between Group 9 and Group 10 after adjustment, whereas the butyrate-related pathways do not show clear significant differences (CENTFERM-PWY is borderline).

from 14.01.2026 (for Group9 (J1-4, J10-11) vs Group10 (K1-6)), marked green in the Excel-files.

Pathway ID Description Group 9 mean (%) Group 10 mean (%) p-value p-adj (BH, 6-pathway set)

P108-PWY pyruvate fermentation to propanoate I 0.5142 0.3817 0.001354 0.008127

PWY-5100 pyruvate fermentation to acetate and lactate II 0.8401 0.9687 0.008763 0.026290

CENTFERM-PWY pyruvate fermentation to butanoate 0.0729 0.0410 0.069958 0.139916

PWY-5677 succinate fermentation to butanoate 0.0063 0.0088 0.367586 0.551379

P163-PWY L-lysine fermentation to acetate and butanoate 0.0308 0.0271 0.693841 0.832609

PWY-5676 acetyl-CoA fermentation to butanoate II 0.1421 0.1441 0.971290 0.971290-

Reporting

Please find attached the results of the pathway analysis. The Excel file contains the full statistics for all pathways; those with adjusted p-values (Benjamini–Hochberg) ≤ 0.05 are highlighted in yellow and are the ones illustrated in the figure. The analysis was performed using Welch’s t-test (two-sided) with Benjamini–Hochberg correction for multiple testing.

browse the 141 pipelines that are currently available as part of nf-core on 2026-01-14

Count check

- Input pipeline count: 141

- Output pipeline count (below): 141 ✅

- Categories: 16

- Sum of category counts: 141 ✅

Categorized pipelines (with counts)

1) Bulk RNA-seq & transcriptomics (19)

alleleexpression, cageseq, circrna, denovotranscript, differentialabundance, drop, dualrnaseq, evexplorer, isoseq, lncpipe, nanostring, nascent, rnafusion, rnaseq, rnasplice, rnavar, riboseq, slamseq, stableexpression

2) Small RNA-seq (1)

smrnaseq

3) Single-cell transcriptomics (6)

marsseq, scdownstream, scflow, scnanoseq, scrnaseq, smartseq2

4) Spatial omics (6)

molkart, panoramaseq, pixelator, sopa, spatialvi, spatialxe

5) Chromatin & regulation (10)

atacseq, callingcards, chipseq, clipseq, cutandrun, hic, hicar, mnaseseq, sammyseq, tfactivity

6) DNA methylation (3)

methylarray, methylong, methylseq

7) Human genomics, variants & disease (22)

abotyper, circdna, deepvariant, eager, exoseq, gwas, longraredisease, mitodetect, oncoanalyser, pacvar, phaseimpute, radseq, raredisease, rarevariantburden, rnadnavar, sarek, ssds, tumourevo, variantbenchmarking, variantcatalogue, variantprioritization, createpanelrefs

8) Viruses & pathogen surveillance (7)

pathogensurveillance, phageannotator, tbanalyzer, viralmetagenome, viralintegration, viralrecon, vipr

9) Metagenomics & microbiome (10)

ampliseq, coproid, createtaxdb, detaxizer, funcscan, mag, magmap, metapep, metatdenovo, taxprofiler

10) Genome assembly, annotation & comparative genomics (14)

bacass, bactmap, denovohybrid, genomeannotator, genomeassembler, genomeqc, genomeskim, hgtseq, multiplesequencealign, neutronstar, pangenome, pairgenomealign, phyloplace, reportho

11) Immunology & antigen presentation (4)

airrflow, epitopeprediction, hlatyping, mhcquant

12) Proteomics, metabolomics & protein informatics (11)

ddamsproteomics, diaproteomics, kmermaid, metaboigniter, proteinannotator, proteinfamilies, proteinfold, proteogenomicsdb, proteomicslfq, quantms, ribomsqc

13) Imaging & other experimental modalities (7)

cellpainting, imcyto, liverctanalysis, lsmquant, mcmicro, rangeland, troughgraph

14) Data acquisition, QC & ops / utilities (12)

bamtofastq, datasync, demo, demultiplex, fastqrepair, fastquorum, fetchngs, nanoseq, readsimulator, references, seqinspector, seqsubmit

15) Genome editing & screens (2)

crisprseq, crisprvar

16) Other methods / modelling / non-bioinformatics (7)

deepmodeloptim, deepmutscan, diseasemodulediscovery, drugresponseeval, meerpipe, omicsgenetraitassociation, spinningjenny

| Category | Name | Short description | 中文描述 | Released | Stars | Last release |

|---|---|---|---|---|---|---|

| Bulk RNA-seq & transcriptomics | alleleexpression | Allele-specific expression (ASE) analysis using STAR-WASP, UMI-tools, phaser | 等位基因特异性表达(ASE)分析:STAR-WASP 比对,UMI-tools 去重,phaser 单倍型分相与 ASE 检测 | 2 | – | |

| Bulk RNA-seq & transcriptomics | cageseq | CAGE-sequencing analysis pipeline with trimming, alignment and counting of CAGE tags. | CAGE-seq 分析:剪切、比对并统计 CAGE 标签(转录起始相关)。 | 11 | 1.0.2 | |

| Bulk RNA-seq & transcriptomics | circrna | circRNA quantification, differential expression analysis and miRNA target prediction of RNA-Seq data | 环状 RNA(circRNA)定量、差异表达分析及 miRNA 靶标预测。 | 59 | – | |

| Bulk RNA-seq & transcriptomics | denovotranscript | de novo transcriptome assembly of paired-end short reads from bulk RNA-seq | 基于 bulk RNA-seq 双端短读长的从头转录组组装。 | 19 | 1.2.1 | |

| Bulk RNA-seq & transcriptomics | differentialabundance | Differential abundance analysis for feature/observation matrices (e.g., RNA-seq) | 对特征/观测矩阵做差异丰度分析(可用于表达矩阵等)。 | 87 | 1.5.0 | |

| Bulk RNA-seq & transcriptomics | drop | Pipeline to find aberrant events in RNA-Seq data, useful for diagnosis of rare disorders | RNA-seq 异常事件检测流程(用于罕见病诊断等)。 | 7 | – | |

| Bulk RNA-seq & transcriptomics | dualrnaseq | Analysis of Dual RNA-seq data (host-pathogen interactions) | 宿主-病原双 RNA-seq 分析流程,用于研究宿主-病原相互作用。 | 25 | 1.0.0 | |

| Bulk RNA-seq & transcriptomics | evexplorer | Analyze RNA data from extracellular vesicles; QC, region detection, normalization, DRE | 胞外囊泡(EV)RNA 数据分析:质控、表达区域检测、归一化与差异 RNA 表达(DRE)。 | 1 | – | |

| Bulk RNA-seq & transcriptomics | isoseq | Genome annotation with PacBio Iso-Seq from raw subreads to FLNC and bed annotation | PacBio Iso-Seq 基因组注释:从 subreads 生成 FLNC 并产出 bed 注释。 | 50 | 2.0.0 | |

| Bulk RNA-seq & transcriptomics | lncpipe | Analysis of long non-coding RNAs from RNA-seq datasets (under development) | lncRNA(长链非编码 RNA)分析流程(开发中)。 | 34 | – | |

| Bulk RNA-seq & transcriptomics | nanostring | Analysis pipeline for Nanostring nCounter expression data. | Nanostring nCounter 表达数据分析流程。 | 16 | 1.3.1 | |

| Bulk RNA-seq & transcriptomics | nascent | Nascent Transcription Processing Pipeline | 新生转录(nascent RNA)处理与分析流程。 | 22 | 2.3.0 | |

| Bulk RNA-seq & transcriptomics | rnafusion | RNA-seq analysis pipeline for detection of gene-fusions | RNA-seq 融合基因检测流程。 | 170 | 4.0.0 | |

| Bulk RNA-seq & transcriptomics | rnaseq | RNA sequencing pipeline (STAR/RSEM/HISAT2/Salmon) with QC and counts | 常规 bulk RNA-seq 分析:比对/定量/计数与全面质控(多比对/定量器可选)。 | 1179 | 3.22.2 | |

| Bulk RNA-seq & transcriptomics | rnasplice | RNA-seq alternative splicing analysis | RNA-seq 可变剪接分析流程。 | 63 | 1.0.4 | |

| Bulk RNA-seq & transcriptomics | rnavar | gatk4 RNA variant calling pipeline | 基于 GATK4 的 RNA 变异检测(RNA variant calling)。 | 58 | 1.2.2 | |

| Bulk RNA-seq & transcriptomics | riboseq | Analysis of ribosome profiling (Ribo-seq) data | Ribo-seq(核糖体测序/核糖体 footprinting)分析流程。 | 21 | 1.2.0 | |

| Bulk RNA-seq & transcriptomics | slamseq | SLAMSeq processing and analysis pipeline | SLAM-seq(新生 RNA 标记)处理与分析流程。 | 10 | 1.0.0 | |

| Bulk RNA-seq & transcriptomics | stableexpression | Identify stable genes across datasets; useful for RT-qPCR reference genes | 寻找最稳定基因(适合作为 RT-qPCR 参考内参基因)。 | 5 | – | |

| Small RNA-seq | smrnaseq | A small-RNA sequencing analysis pipeline | 小 RNA 测序(如 miRNA 等)分析流程。 | 98 | 2.4.1 | |

| Single-cell transcriptomics | marsseq | MARS-seq v2 pre-processing pipeline with velocity | MARS-seq v2 预处理流程,支持 RNA velocity。 | 8 | 1.0.3 | |

| Single-cell transcriptomics | scdownstream | Single cell transcriptomics pipeline for QC, integration, presentation | 单细胞转录组下游:质控、整合与结果展示。 | 81 | – | |

| Single-cell transcriptomics | scflow | Please consider using/contributing to nf-core/scdownstream | 单细胞流程(建议转向/贡献 scdownstream)。 | 25 | – | |

| Single-cell transcriptomics | scnanoseq | Single-cell/nuclei pipeline for Oxford Nanopore + 10x Genomics | 单细胞/细胞核测序流程:结合 ONT 与 10x 数据。 | 52 | 1.2.1 | |

| Single-cell transcriptomics | scrnaseq | Single-cell RNA-Seq pipeline (10x/DropSeq/SmartSeq etc.) | 单细胞 RNA-seq 主流程:支持 10x、DropSeq、SmartSeq 等。 | 310 | 4.1.0 | |

| Single-cell transcriptomics | smartseq2 | Process single cell RNA-seq generated with SmartSeq2 | SmartSeq2 单细胞 RNA-seq 处理流程。 | 15 | – | |

| Spatial omics | molkart | Processing Molecular Cartography data (Resolve Bioscience combinatorial FISH) | Resolve Molecular Cartography(组合 FISH)数据处理流程。 | 14 | 1.2.0 | |

| Spatial omics | panoramaseq | Pipeline to process sequencing-based spatial transcriptomics data (in-situ arrays) | 测序型空间转录组(in-situ arrays)数据处理流程。 | 0 | – | |

| Spatial omics | pixelator | Pipeline to generate Molecular Pixelation data (Pixelgen) | Pixelgen 分子像素化(Molecular Pixelation)数据处理流程。 | 13 | 2.3.0 | |

| Spatial omics | sopa | Nextflow version of Sopa – spatial omics pipeline and analysis | Sopa 的 Nextflow 实现:空间组学流程与分析。 | 11 | – | |

| Spatial omics | spatialvi | Process spatial gene counts + spatial coordinates + image data (10x Visium) | 10x Visium 空间转录组处理:基因计数+空间坐标+图像数据。 | 70 | – | |

| Spatial omics | spatialxe | (no description shown) | 空间组学相关流程(原表未给出描述)。 | 24 | – | |

| Chromatin & regulation | atacseq | ATAC-seq peak-calling and QC analysis pipeline | ATAC-seq 峰识别与质控分析流程。 | 221 | 2.1.2 | |

| Chromatin & regulation | callingcards | A pipeline for processing calling cards data | Calling cards 实验数据处理流程。 | 6 | 1.0.0 | |

| Chromatin & regulation | chipseq | ChIP-seq peak-calling, QC and differential analysis | ChIP-seq 峰识别、质控与差异分析流程。 | 229 | 2.1.0 | |

| Chromatin & regulation | clipseq | CLIP-seq QC, mapping, UMI deduplication, peak-calling options | CLIP-seq 分析:质控、比对、UMI 去重与多种 peak calling。 | 24 | 1.0.0 | |

| Chromatin & regulation | cutandrun | CUT&RUN / CUT&TAG pipeline with QC, spike-ins, IgG controls, peak calling | CUT&RUN/CUT&TAG 分析:质控、spike-in、IgG 对照、峰识别与下游。 | 106 | 3.2.2 | |

| Chromatin & regulation | hic | Analysis of Chromosome Conformation Capture (Hi-C) data | Hi-C 染色体构象捕获数据分析流程。 | 105 | 2.1.0 | |

| Chromatin & regulation | hicar | HiCAR multi-omic co-assay pipeline | HiCAR 多组学共测(转录+染色质可及性+接触)分析流程。 | 12 | 1.0.0 | |

| Chromatin & regulation | mnaseseq | MNase-seq analysis pipeline using BWA and DANPOS2 | MNase-seq 分析流程(BWA + DANPOS2)。 | 12 | 1.0.0 | |

| Chromatin & regulation | sammyseq | SAMMY-seq pipeline to analyze chromatin state | SAMMY-seq 染色质状态分析流程。 | 5 | – | |

| Chromatin & regulation | tfactivity | Identify differentially active TFs using expression + open chromatin | 整合表达与开放染色质数据,识别差异活跃转录因子(TF)。 | 12 | – | |

| DNA methylation | methylarray | Illumina methylation array processing; QC, confounders, DMP/DMR, cell comp optional | Illumina 甲基化芯片分析:预处理、质控、混杂因素检查、DMP/DMR;可选细胞组成估计与校正。 | 6 | – | |

| DNA methylation | methylong | Extract methylation calls from long reads (ONT/PacBio) | 从长读长(ONT/PacBio)提取甲基化识别结果。 | 19 | 2.0.0 | |

| DNA methylation | methylseq | Bisulfite-seq methylation pipeline (Bismark/bwa-meth + MethylDackel/rastair) | 亚硫酸氢盐测序甲基化分析流程(Bismark/bwa-meth 等)。 | 185 | 4.2.0 | |

| Human genomics, variants & disease | abotyper | Characterise human blood group and red cell antigens using ONT | 基于 ONT 的人类血型与红细胞抗原分型/鉴定流程。 | 1 | – | |

| Human genomics, variants & disease | circdna | Identify extrachromosomal circular DNA (ecDNA) from Circle-seq/WGS/ATAC-seq | 从 Circle-seq/WGS/ATAC-seq 识别染色体外环状 DNA(ecDNA)。 | 31 | 1.1.0 | |

| Human genomics, variants & disease | createpanelrefs | Generate Panel of Normals / models / references from many samples | 从大量样本生成 PoN(Panel of Normals)/模型/参考资源。 | 11 | – | |

| Human genomics, variants & disease | deepvariant | Consider using/contributing to nf-core/sarek | DeepVariant 相关(建议使用/贡献至 sarek)。 | 40 | 1.0 | |

| Human genomics, variants & disease | eager | Ancient DNA analysis pipeline | 古 DNA(aDNA)分析流程(可重复、标准化)。 | 195 | 2.5.3 | |

| Human genomics, variants & disease | exoseq | Please consider using/contributing to nf-core/sarek | Exo-seq 相关(建议使用/贡献至 sarek)。 | 16 | – | |

| Human genomics, variants & disease | gwas | UNDER CONSTRUCTION: Genome Wide Association Studies | GWAS(全基因组关联分析)流程(建设中)。 | 27 | – | |

| Human genomics, variants & disease | longraredisease | Long-read sequencing pipeline for rare disease variant discovery | 长读长测序罕见病变异识别流程(神经发育障碍等)。 | 5 | v1.0.0-alpha | |

| Human genomics, variants & disease | mitodetect | A-Z analysis of mitochondrial NGS data | 线粒体 NGS 数据全流程分析。 | 7 | – | |

| Human genomics, variants & disease | oncoanalyser | Comprehensive cancer DNA/RNA analysis and reporting pipeline | 肿瘤 DNA/RNA 综合分析与报告生成流程。 | 97 | 2.3.0 | |

| Human genomics, variants & disease | pacvar | Long-read PacBio sequencing processing for WGS and PureTarget | PacBio 长读长 WGS/PureTarget 测序数据处理流程。 | 13 | 1.0.1 | |

| Human genomics, variants & disease | phaseimpute | Phase and impute genetic data | 遗传数据分相与基因型填补流程。 | 27 | 1.1.0 | |

| Human genomics, variants & disease | radseq | Variant-calling pipeline for RADseq | RADseq 变异检测流程。 | 7 | – | |

| Human genomics, variants & disease | raredisease | Call and score variants from WGS/WES of rare disease patients | 罕见病 WGS/WES 变异检测与打分流程。 | 112 | 2.6.0 | |

| Human genomics, variants & disease | rarevariantburden | Summary count based rare variant burden test (e.g., vs gnomAD) | 基于汇总计数的稀有变异负担检验(可与 gnomAD 等对照)。 | 0 | – | |

| Human genomics, variants & disease | rnadnavar | Integrated RNA+DNA somatic mutation detection | RNA+DNA 联合分析的体细胞突变检测流程。 | 14 | – | |

| Human genomics, variants & disease | sarek | Germline/somatic variant calling + annotation from WGS/targeted | WGS/靶向测序的生殖系/体细胞变异检测与注释(含预处理、calling、annotation)。 | 532 | 3.7.1 | |

| Human genomics, variants & disease | ssds | Single-stranded DNA Sequencing (SSDS) pipeline | SSDS(单链 DNA 测序)分析流程。 | 1 | – | |

| Human genomics, variants & disease | tumourevo | Model tumour clonal evolution from WGS (CN, subclones, signatures) | 基于 WGS 的肿瘤克隆进化建模(CN、亚克隆、突变签名等)。 | 20 | – | |

| Human genomics, variants & disease | variantbenchmarking | Evaluate/validate variant calling accuracy | 变异检测方法准确性评估与验证流程(benchmark)。 | 37 | 1.4.0 | |

| Human genomics, variants & disease | variantcatalogue | Generate population variant catalogues from WGS | 从 WGS 构建人群变异目录(变异列表及频率)。 | 13 | – | |

| Human genomics, variants & disease | variantprioritization | (no description shown) | 变异优先级筛选流程(原表未给出描述)。 | 12 | – | |

| Viruses & pathogen surveillance | pathogensurveillance | Surveillance of pathogens using population genomics and sequencing | 基于群体基因组与测序的病原体监测流程。 | 52 | 1.0.0 | |

| Viruses & pathogen surveillance | phageannotator | Identify, annotate, quantify phage sequences in (meta)genomes | 在(宏)基因组中识别、注释并定量噬菌体序列。 | 17 | – | |

| Viruses & pathogen surveillance | tbanalyzer | Pipeline for Mycobacterium tuberculosis complex analysis | 结核分枝杆菌复合群(MTBC)分析流程。 | 13 | – | |

| Viruses & pathogen surveillance | viralmetagenome | Untargeted viral genome reconstruction with iSNV detection from metagenomes | 宏基因组中无靶向病毒全基因组重建,并检测 iSNV。 | 28 | 1.0.1 | |

| Viruses & pathogen surveillance | viralintegration | Identify viral integration events using chimeric reads | 基于嵌合 reads 的病毒整合事件检测流程。 | 17 | 0.1.1 | |

| Viruses & pathogen surveillance | viralrecon | Viral assembly and intrahost/low-frequency variant calling | 病毒组装与宿主体内/低频变异检测流程。 | 151 | 3.0.0 | |

| Viruses & pathogen surveillance | vipr | Viral assembly and intrahost/low-frequency variant calling | 病毒组装与体内/低频变异检测流程(类似 viralrecon)。 | 14 | – | |

| Metagenomics & microbiome | ampliseq | Amplicon sequencing workflow using DADA2 and QIIME2 | 扩增子测序(如 16S/ITS)分析:DADA2 + QIIME2。 | 231 | 2.15.0 | |

| Metagenomics & microbiome | coproid | Coprolite host identification pipeline | 粪化石(coprolite)宿主鉴定流程。 | 13 | 2.0.0 | |

| Metagenomics & microbiome | createtaxdb | Automated construction of classifier databases for multiple tools | 自动化并行构建多种宏基因组分类工具的数据库。 | 20 | 2.0.0 | |

| Metagenomics & microbiome | detaxizer | Identify (and optionally remove) sequences; default remove human | 识别并(可选)去除特定序列(默认去除人源污染)。 | 22 | 1.3.0 | |

| Metagenomics & microbiome | funcscan | (Meta-)genome screening for functional and natural product genes | (宏)基因组功能基因与天然产物基因簇筛查。 | 99 | 3.0.0 | |

| Metagenomics & microbiome | mag | Assembly and binning of metagenomes | 宏基因组组装与分箱(MAG 构建)。 | 264 | 5.3.0 | |

| Metagenomics & microbiome | magmap | Mapping reads to large collections of genomes | 将 reads 比对到大型基因组集合的最佳实践流程。 | 10 | 1.0.0 | |

| Metagenomics & microbiome | metapep | From metagenomes to epitopes and beyond | 从宏基因组到表位(epitope)等免疫相关下游分析。 | 12 | 1.0.0 | |

| Metagenomics & microbiome | metatdenovo | De novo assembly/annotation of metatranscriptomic or metagenomic data | 宏转录组/宏基因组的从头组装与注释(支持原核/真核/病毒)。 | 34 | 1.3.0 | |

| Metagenomics & microbiome | taxprofiler | Multi-taxonomic profiling of shotgun short/long read metagenomics | shotgun 宏基因组多类群(多生物界)分类谱分析(短读长/长读长)。 | 175 | 1.2.5 | |

| Genome assembly, annotation & comparative genomics | bacass | Simple bacterial assembly and annotation pipeline | 简单的细菌组装与注释流程。 | 80 | 2.5.0 | |

| Genome assembly, annotation & comparative genomics | bactmap | Mapping-based pipeline for bacterial phylogeny from WGS | 基于比对的细菌 WGS 系统发育/建树流程。 | 61 | 1.0.0 | |

| Genome assembly, annotation & comparative genomics | denovohybrid | Hybrid genome assembly pipeline (under construction) | 混合组装流程(长+短读长)(建设中)。 | 8 | – | |

| Genome assembly, annotation & comparative genomics | genomeannotator | Identify (coding) gene structures in draft genomes | 草图基因组(draft genome)基因结构(编码基因)注释流程。 | 34 | – | |

| Genome assembly, annotation & comparative genomics | genomeassembler | Assembly and scaffolding from long ONT/PacBio HiFi reads | 长读长(ONT/PacBio HiFi)基因组组装与脚手架构建。 | 31 | 1.1.0 | |

| Genome assembly, annotation & comparative genomics | genomeqc | Compare quality of multiple genomes and annotations | 比较多个基因组及其注释质量。 | 19 | – | |

| Genome assembly, annotation & comparative genomics | genomeskim | QC/filter genome skims; organelle assembly and/or analysis | genome skim 数据质控/过滤,并进行细胞器组装或相关分析。 | 3 | – | |

| Genome assembly, annotation & comparative genomics | hgtseq | Investigate horizontal gene transfer from NGS data | 从 NGS 数据研究水平基因转移(HGT)。 | 26 | 1.1.0 | |

| Genome assembly, annotation & comparative genomics | multiplesequencealign | Systematically evaluate MSA methods | 多序列比对(MSA)方法系统评估流程。 | 40 | 1.1.1 | |

| Genome assembly, annotation & comparative genomics | neutronstar | De novo assembly for 10x linked-reads using Supernova | 10x linked-reads 从头组装流程(Supernova)。 | 3 | 1.0.0 | |

| Genome assembly, annotation & comparative genomics | pangenome | Render sequences into a pangenome graph | 将序列集合渲染为泛基因组图(pangenome graph)。 | 102 | 1.1.3 | |

| Genome assembly, annotation & comparative genomics | pairgenomealign | Pairwise genome comparison with LAST + plots | 基于 LAST 的两两基因组比对与可视化绘图。 | 10 | 2.2.1 | |

| Genome assembly, annotation & comparative genomics | phyloplace | Phylogenetic placement with EPA-NG | 使用 EPA-NG 的系统发育定位(placement)流程。 | 13 | 2.0.0 | |

| Genome assembly, annotation & comparative genomics | reportho | Comparative analysis of ortholog predictions | 直系同源(ortholog)预测结果的比较分析流程。 | 11 | 1.1.0 | |

| Immunology & antigen presentation | airrflow | AIRR-seq repertoire analysis using Immcantation | 免疫受体库(BCR/TCR,AIRR-seq)分析:基于 Immcantation。 | 73 | 4.3.1 | |

| Immunology & antigen presentation | epitopeprediction | Epitope prediction and annotation pipeline | 表位(epitope)预测与注释流程。 | 50 | 3.1.0 | |

| Immunology & antigen presentation | hlatyping | Precision HLA typing from NGS data | 基于 NGS 的高精度 HLA 分型流程。 | 76 | 2.1.0 | |

| Immunology & antigen presentation | mhcquant | Identify and quantify MHC eluted peptides from MS raw data | 从质谱原始数据识别并定量 MHC 洗脱肽段。 | 42 | 3.1.0 | |

| Proteomics, metabolomics & protein informatics | ddamsproteomics | Quantitative shotgun MS proteomics | 定量 shotgun 质谱蛋白组流程。 | 4 | – | |

| Proteomics, metabolomics & protein informatics | diaproteomics | Automated quantitative analysis of DIA proteomics MS measurements | DIA 蛋白组质谱数据自动化定量分析流程。 | 21 | 1.2.4 | |

| Proteomics, metabolomics & protein informatics | kmermaid | k-mer similarity analysis pipeline | k-mer 相似性分析流程。 | 23 | 0.1.0-alpha | |

| Proteomics, metabolomics & protein informatics | metaboigniter | Metabolomics MS pre-processing with identification/quantification (MS1/MS2) | 代谢组质谱预处理:基于 MS1/MS2 的鉴定与定量。 | 24 | 2.0.1 | |

| Proteomics, metabolomics & protein informatics | proteinannotator | Protein fasta → annotations | 蛋白序列(FASTA)到注释的自动化流程。 | 8 | – | |

| Proteomics, metabolomics & protein informatics | proteinfamilies | Generation and updating of protein families | 蛋白家族的生成与更新流程。 | 21 | 2.2.0 | |

| Proteomics, metabolomics & protein informatics | proteinfold | Protein 3D structure prediction pipeline | 蛋白三维结构预测流程。 | 94 | 1.1.1 | |

| Proteomics, metabolomics & protein informatics | proteogenomicsdb | Generate protein databases for proteogenomics analysis | 构建蛋白基因组学分析所需的蛋白数据库。 | 7 | 1.0.0 | |

| Proteomics, metabolomics & protein informatics | proteomicslfq | Proteomics label-free quantification (LFQ) analysis pipeline | 蛋白组无标记定量(LFQ)分析流程。 | 37 | 1.0.0 | |

| Proteomics, metabolomics & protein informatics | quantms | Quantitative MS workflow (DDA-LFQ, DDA-Isobaric, DIA-LFQ) | 定量蛋白组流程:支持 DDA-LFQ、等标记 DDA、DIA-LFQ 等。 | 34 | 1.2.0 | |

| Proteomics, metabolomics & protein informatics | ribomsqc | QC pipeline monitoring MS performance in ribonucleoside analysis | 核苷相关质谱分析的性能监控与质控流程。 | 0 | – | |

| Imaging & other modalities | cellpainting | (no description shown) | Cell Painting 相关流程(原表未给出描述)。 | 8 | – | |

| Imaging & other modalities | imcyto | Image Mass Cytometry analysis pipeline | 成像质谱细胞术(IMC)图像/数据分析流程。 | 26 | 1.0.0 | |

| Imaging & other modalities | liverctanalysis | UNDER CONSTRUCTION: pipeline for liver CT analysis | 肝脏 CT 影像分析流程(建设中)。 | 0 | – | |

| Imaging & other modalities | lsmquant | Process and analyze light-sheet microscopy images | 光片显微(light-sheet)图像处理与分析流程。 | 5 | – | |

| Imaging & other modalities | mcmicro | Whole-slide multi-channel image processing to single-cell data | 多通道全切片图像到单细胞数据的端到端处理流程。 | 29 | – | |

| Imaging & other modalities | rangeland | Remotely sensed imagery pipeline for land-cover trend files | 遥感影像处理流程:结合辅助数据生成土地覆盖变化趋势文件。 | 9 | 1.0.0 | |

| Imaging & other modalities | troughgraph | Quantitative assessment of permafrost landscapes and thaw level | 冻土景观与冻融程度的定量评估流程。 | 2 | – | |

| Data acquisition, QC & utilities | bamtofastq | Convert BAM/CRAM to FASTQ and perform QC | BAM/CRAM 转 FASTQ 并进行质控。 | 31 | 2.2.0 | |

| Data acquisition, QC & utilities | datasync | System operation / automation workflows | 系统运维/自动化工作流(数据同步与操作任务)。 | 10 | – | |

| Data acquisition, QC & utilities | demo | Simple nf-core style pipeline for workshops and demos | nf-core 风格的示例/教学演示流程。 | 10 | 1.0.2 | |

| Data acquisition, QC & utilities | demultiplex | Demultiplexing pipeline for sequencing data | 测序数据拆样/解复用流程。 | 52 | 1.7.0 | |

| Data acquisition, QC & utilities | fastqrepair | Recover corrupted FASTQ.gz, fix reads, remove unpaired, reorder | 修复损坏 FASTQ.gz:修正不合规 reads、移除未配对 reads、重排序等。 | 6 | 1.0.0 | |

| Data acquisition, QC & utilities | fastquorum | Produce consensus reads using UMIs/barcodes | 基于 UMI/条形码生成共识 reads 的流程。 | 27 | 1.2.0 | |

| Data acquisition, QC & utilities | fetchngs | Fetch metadata and raw FastQ files from public databases | 从公共数据库抓取元数据与原始 FASTQ。 | 185 | 1.12.0 | |

| Data acquisition, QC & utilities | nanoseq | Nanopore demultiplexing, QC and alignment pipeline | Nanopore 数据拆样、质控与比对流程。 | 218 | 3.1.0 | |

| Data acquisition, QC & utilities | readsimulator | Simulate sequencing reads (amplicon, metagenome, WGS, etc.) | 测序 reads 模拟流程(扩增子、靶向捕获、宏基因组、全基因组等)。 | 33 | 1.0.1 | |

| Data acquisition, QC & utilities | references | Build references for multiple use cases | 多用途参考资源构建流程。 | 19 | 0.1 | |

| Data acquisition, QC & utilities | seqinspector | QC-only pipeline producing global/group-specific MultiQC reports | 纯质控流程:运行多种 QC 工具并输出全局/分组 MultiQC 报告。 | 16 | – | |

| Data acquisition, QC & utilities | seqsubmit | Submit data to ENA | 向 ENA 提交数据的流程。 | 3 | – | |

| Genome editing & screens | crisprseq | CRISPR edited data analysis (targeted + screens) | CRISPR 编辑数据分析:靶向编辑质量评估与 pooled screen 关键基因发现。 | 53 | 2.3.0 | |

| Genome editing & screens | crisprvar | Evaluate outcomes from genome editing experiments (WIP) | 基因编辑实验结果评估流程(WIP)。 | 5 | – | |

| Other methods / modelling / non-bio | deepmodeloptim | Stochastic Testing and Input Manipulation for Unbiased Learning Systems | 无偏学习系统的随机测试与输入操控(机器学习相关)。 | 28 | – | |

| Other methods / modelling / non-bio | deepmutscan | Deep mutational scanning (DMS) analysis pipeline | 深度突变扫描(DMS)数据分析流程。 | 3 | – | |

| Other methods / modelling / non-bio | diseasemodulediscovery | Network-based disease module identification | 基于网络的疾病模块识别流程。 | 5 | – | |

| Other methods / modelling / non-bio | drugresponseeval | Evaluate drug response prediction models | 药物反应预测模型的评估流程(统计与生物学上更严谨)。 | 24 | 1.1.0 | |

| Other methods / modelling / non-bio | meerpipe | Astronomy pipeline for MeerKAT pulsar data | MeerKAT 脉冲星数据天文处理流程(成像与计时分析)。 | 10 | – | |

| Other methods / modelling / non-bio | omicsgenetraitassociation | Multi-omics integration and trait association analysis pipeline | 多组学整合并进行性状/表型关联分析的流程。 | 11 | – | |

| Other methods / modelling / non-bio | spinningjenny | Simulating the first industrial revolution using agent-based models | 基于主体(Agent-based)模型模拟第一次工业革命的流程。 | 4 | – |

nf-core pipelines (selected)

- nf-core/viralmetagenome is a bioinformatics best-practice analysis pipeline for reconstructing consensus genomes and to identify intra-host variants from metagenomic sequencing data or enriched based sequencing data like hybrid capture.

- nf-core/viralrecon is a bioinformatics analysis pipeline used to perform assembly and intra-host/low-frequency variant calling for viral samples.

- nf-core/vipr is a bioinformatics best-practice analysis pipeline for assembly and intrahost / low-frequency variant calling for viral samples.

- nfcore/ampliseq is a bioinformatics analysis pipeline used for amplicon sequencing, supporting denoising of any amplicon and supports a variety of taxonomic databases for taxonomic assignment including 16S, ITS, CO1 and 18S.

- nf-core/mag is a bioinformatics best-practise analysis pipeline for assembly, binning and annotation of metagenomes.

- nf-core/taxprofiler is a bioinformatics best-practice analysis pipeline for taxonomic classification and profiling of shotgun short- and long-read metagenomic data.

- nf-core/funcscan is a bioinformatics best-practice analysis pipeline for the screening of nucleotide sequences such as assembled contigs for functional genes.

- nf-core/createtaxdb is a bioinformatics pipeline that constructs custom metagenomic classifier databases for multiple classifiers and profilers from the same input reference genome set in a highly automated and parallelised manner.

- nf-core/detaxizer is a bioinformatics pipeline that checks for the presence of a specific taxon in (meta)genomic fastq files and to filter out this taxon or taxonomic subtree.

Phyloseq_Group9_10_11_pre-FMT.Rmd

author: “”

date: ‘r format(Sys.time(), "%d %m %Y")‘

header-includes:

-

\usepackage{color, fancyvrb} output: rmdformats::readthedown: highlight: kate number_sections : yes pdf_document: toc: yes toc_depth: 2 number_sections : yes

#install.packages(c("picante", "rmdformats"))

#mamba install -c conda-forge freetype libpng harfbuzz fribidi

#mamba install -c conda-forge r-systemfonts r-svglite r-kableExtra freetype fontconfig harfbuzz fribidi libpng

library(knitr)

library(rmdformats)

library(readxl)

library(dplyr)

library(kableExtra)

library(openxlsx)

library(DESeq2)

library(writexl)

options(max.print="75")

knitr::opts_chunk$set(fig.width=8,

fig.height=6,

eval=TRUE,

cache=TRUE,

echo=TRUE,

prompt=FALSE,

tidy=FALSE,

comment=NA,

message=FALSE,

warning=FALSE)

opts_knit$set(width=85)

#rmarkdown::render('Phyloseq_Group9_10_11_pre-FMT.Rmd',output_file='Phyloseq_Group9_10_11_pre-FMT.html')

# Phyloseq R library

#* Phyloseq web site : https://joey711.github.io/phyloseq/index.html

#* See in particular tutorials for

# - importing data: https://joey711.github.io/phyloseq/import-data.html

# - heat maps: https://joey711.github.io/phyloseq/plot_heatmap-examples.htmlData

Import raw data and assign sample key:

#extend qiime2_metadata_for_qza_to_phyloseq.tsv with Diet and Flora

#setwd("~/DATA/Data_Laura_16S_2/core_diversity_e4753")

#map_corrected <- read.csv("qiime2_metadata_for_qza_to_phyloseq.tsv", sep="\t", row.names=1)

#knitr::kable(map_corrected) %>% kable_styling(bootstrap_options = c("striped", "hover", "condensed", "responsive"))Prerequisites to be installed

- R : https://pbil.univ-lyon1.fr/CRAN/

- R studio : https://www.rstudio.com/products/rstudio/download/#download

install.packages("dplyr") # To manipulate dataframes

install.packages("readxl") # To read Excel files into R

install.packages("ggplot2") # for high quality graphics

install.packages("heatmaply")

source("https://bioconductor.org/biocLite.R")

biocLite("phyloseq")#mamba install -c conda-forge r-ggplot2 r-vegan r-data.table

#BiocManager::install("microbiome")

#install.packages("ggpubr")

#install.packages("heatmaply")

library("readxl") # necessary to import the data from Excel file

library("ggplot2") # graphics

library("picante")

library("microbiome") # data analysis and visualisation

library("phyloseq") # also the basis of data object. Data analysis and visualisation

library("ggpubr") # publication quality figures, based on ggplot2

library("dplyr") # data handling, filter and reformat data frames

library("RColorBrewer") # nice color options

library("heatmaply")

library(vegan)

library(gplots)

#install.packages("openxlsx")

library(openxlsx)Read the data and create phyloseq objects

Three tables are needed

- OTU

- Taxonomy

- Samples

library(tidyr)

# For QIIME1

#ps.ng.tax <- import_biom("./exported_table/feature-table.biom", "./exported-tree/tree.nwk")

# For QIIME2

#install.packages("remotes")

#remotes::install_github("jbisanz/qiime2R")

#"core_metrics_results/rarefied_table.qza", rarefying performed in the code, therefore import the raw table.

library(qiime2R)

ps_raw <- qza_to_phyloseq(

features = "table.qza", #cp ../Data_Karoline_16S_2025/dada2_tests2/test_7_f240_r240/table.qza .

tree = "rooted-tree.qza", #cp ../Data_Karoline_16S_2025/rooted-tree.qza .

metadata = "qiime2_metadata_for_qza_to_phyloseq.tsv" #cp ../Data_Karoline_16S_2025/qiime2_metadata_for_qza_to_phyloseq.tsv .

)

# or

#biom convert \

# -i ./exported_table/feature-table.biom \

# -o ./exported_table/feature-table-v1.biom \

# --to-json

#ps_raw <- import_biom("./exported_table/feature-table-v1.biom", treefilename="./exported-tree/tree.nwk")

sample <- read.csv("./qiime2_metadata_for_qza_to_phyloseq.tsv", sep="\t", row.names=1)

SAM = sample_data(sample, errorIfNULL = T)

#> setdiff(rownames(SAM), sample_names(ps_raw))

#[1] "sample-L9" should be removed since the low reads

ps_base <- merge_phyloseq(ps_raw, SAM)

print(ps_base)

taxonomy <- read.delim("taxonomy.tsv", sep="\t", header=TRUE) #cp ../Data_Karoline_16S_2025/exported-taxonomy/taxonomy.tsv .

#head(taxonomy)

# Separate taxonomy string into separate ranks

taxonomy_df <- taxonomy %>% separate(Taxon, into = c("Domain","Phylum","Class","Order","Family","Genus","Species"), sep = ";", fill = "right", extra = "drop")

# Use Feature.ID as rownames

rownames(taxonomy_df) <- taxonomy_df$Feature.ID

taxonomy_df <- taxonomy_df[, -c(1, ncol(taxonomy_df))] # Drop Feature.ID and Confidence

# Create tax_table

tax_table_final <- phyloseq::tax_table(as.matrix(taxonomy_df))

# Merge tax_table with existing phyloseq object

ps_base <- merge_phyloseq(ps_base, tax_table_final)

# Check

ps_base

#colnames(phyloseq::tax_table(ps_base)) <- c("Domain","Phylum","Class","Order","Family","Genus","Species")

saveRDS(ps_base, "./ps_base.rds")Visualize data

sample_names(ps_base)

rank_names(ps_base)

sample_variables(ps_base)

# Define sample names once

samples <- c(

#"sample-A1","sample-A2","sample-A5","sample-A6","sample-A7","sample-A8","sample-A9","sample-A10", #RESIZED: "sample-A3","sample-A4","sample-A11",

#"sample-B1","sample-B2","sample-B3","sample-B4","sample-B5","sample-B6","sample-B7", #RESIZED: "sample-B8","sample-B9","sample-B10","sample-B11","sample-B12","sample-B13","sample-B14","sample-B15","sample-B16",

#"sample-C1","sample-C2","sample-C3","sample-C4","sample-C5","sample-C6","sample-C7", #RESIZED: "sample-C8","sample-C9","sample-C10",

#"sample-E1","sample-E2","sample-E3","sample-E4","sample-E5","sample-E6","sample-E7","sample-E8","sample-E9","sample-E10", #RESIZED:

#"sample-F1","sample-F2","sample-F3","sample-F4","sample-F5",

"sample-G1","sample-G2","sample-G3","sample-G4","sample-G5","sample-G6",

"sample-H1","sample-H2","sample-H3","sample-H4","sample-H5","sample-H6",

"sample-I1","sample-I2","sample-I3","sample-I4","sample-I5","sample-I6",

"sample-J1","sample-J2","sample-J3","sample-J4","sample-J10","sample-J11", #RESIZED: "sample-J5","sample-J8","sample-J9", "sample-J6","sample-J7",

"sample-K1","sample-K2","sample-K3","sample-K4","sample-K5","sample-K6", #RESIZED: "sample-K7","sample-K8","sample-K9","sample-K10", "sample-K11","sample-K12","sample-K13","sample-K14","sample-K15",

"sample-L2","sample-L3","sample-L4","sample-L5","sample-L6" #RESIZED:"sample-L1","sample-L7","sample-L8","sample-L10","sample-L11","sample-L12","sample-L13","sample-L14","sample-L15",

#"sample-M1","sample-M2","sample-M3","sample-M4","sample-M5","sample-M6","sample-M7","sample-M8",

#"sample-N1","sample-N2","sample-N3","sample-N4","sample-N5","sample-N6","sample-N7","sample-N8","sample-N9","sample-N10",

#"sample-O1","sample-O2","sample-O3","sample-O4","sample-O5","sample-O6","sample-O7","sample-O8"

)

ps_pruned <- prune_samples(samples, ps_base)

sample_names(ps_pruned)

rank_names(ps_pruned)

sample_variables(ps_pruned)No samples were excluded as low-depth outliers (library sizes below the minimum depth threshold of 1,000 reads), and the remaining dataset (ps_filt) contains only samples meeting this depth cutoff with taxa retained only if they have nonzero total counts.

# ------------------------------------------------------------

# Filter low-depth samples (recommended for all analyses)

# ------------------------------------------------------------

min_depth <- 1000 # <-- adjust to your data / study design, keeps all!

ps_filt <- prune_samples(sample_sums(ps_pruned) >= min_depth, ps_pruned)

ps_filt <- prune_taxa(taxa_sums(ps_filt) > 0, ps_filt)

# Keep a depth summary for reporting / QC

depth_summary <- summary(sample_sums(ps_filt))

depth_summaryDifferential abundance (DESeq2) → ps_deseq: non-rarefied integer counts derived from ps_filt, with optional count-based taxon prefilter

(default: taxa total counts ≥ 10 across all samples)

From ps_filt (e.g. 5669 taxa and 239 samples), we branch into analysis-ready objects in two directions:

-

Direction 1 for diversity analyses

- Alpha diversity:

ps_rarefied✅ (common) - Beta diversity:

- Unweighted UniFrac / Jaccard:

ps_rarefied✅ (often recommended) - Bray–Curtis / ordination on abundances:

ps_relor Hellinger ✅ (rarefaction optional) - Aitchison (CLR): CLR-transformed (non-rarefied) ✅ (no rarefaction)

- Alpha diversity:

Normalize number of reads in each sample using median sequencing depth.

# RAREFACTION

set.seed(9242) # This will help in reproducing the filtering and nomalisation.

ps_rarefied <- rarefy_even_depth(ps_filt, sample.size = 6389)

#total <- 6389

# # NORMALIZE number of reads in each sample using median sequencing depth.

# total = median(sample_sums(ps.ng.tax))

# #> total

# #[1] 42369

# standf = function(x, t=total) round(t * (x / sum(x)))

# ps.ng.tax = transform_sample_counts(ps.ng.tax, standf)

# ps_rel <- microbiome::transform(ps.ng.tax, "compositional")

#

# saveRDS(ps.ng.tax, "./ps.ng.tax.rds")-

Direction 2 for taxonomic composition plots

- Taxonomic composition →

ps_rel: relative abundance (compositional) computed after sample filtering (e.g. 5669 taxa and 239 samples) - Optional cleaner composition plots →

ps_abund/ps_abund_rel: taxa filtered for plotting (e.g., keep taxa with mean relative abundance > 0.1%); (e.g. 95 taxa and 239 samples)ps_abund= counts,ps_abund_rel= relative abundance (use for visualization, not DESeq2)

- Taxonomic composition →

For the heatmaps, we focus on the most abundant OTUs by first converting counts to relative abundances within each sample. We then filter to retain only OTUs whose mean relative abundance across all samples exceeds 0.1% (0.001). We are left with 199 OTUs which makes the reading much more easy.

# 1) Convert to relative abundances

ps_rel <- transform_sample_counts(ps_filt, function(x) x / sum(x))

# 2) Get the logical vector of which OTUs to keep (based on relative abundance)

keep_vector <- phyloseq::filter_taxa(

ps_rel,

function(x) mean(x) > 0.001,

prune = FALSE

)

# 3) Use the TRUE/FALSE vector to subset absolute abundance data

ps_abund <- prune_taxa(names(keep_vector)[keep_vector], ps_filt)

# 4) Normalize the final subset to relative abundances per sample

ps_abund_rel <- transform_sample_counts(

ps_abund,

function(x) x / sum(x)

) library(stringr)

#for id in 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206; do

#for id in 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62; do

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Domain\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Domain\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Phylum\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Phylum\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Class\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Class\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Order\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Order\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Family\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Family\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Genus\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Genus\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Species\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Species\"], \"__\")[[1]][2]"

#done

phyloseq::tax_table(ps_abund_rel)[1,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Class"], "__")[[1]][2]