Microbiome analysis

Sample collection and storage

Fecal samples were collected from mice between 9–10 am at the indicated timepoints and immediately frozen at −80 °C until use.

DNA extraction

Genomic DNA was extracted from fecal pellets using the QIAamp Fast DNA Stool Mini Kit (Qiagen, #51604) following a protocol optimized for murine stool. Briefly, pellets were mechanically homogenized in a Precellys® 24 homogenizer (Bertin Technologies) using Soil grinding tubes (Bertin Technologies, #SK38), and DNA was extracted according to the manufacturer’s instructions. DNA yield was measured with NanoDrop, and the concentration was adjusted to 10 ng/µl.

16S rRNA gene amplicon library preparation and sequencing

16S rRNA amplicons (V3–V4 region) were generated using degenerate primers that contain Illumina adapter overhang sequences: forward (5′-TCGTCGGCAGCGTCAGATGTGTATAAGAGACAGCCTACGGGNGGCWGCAG-3′) and reverse (5′-GTCTCGTGGGCTCGGAGATGTGTATAAGAGACAGGACTACHVGGGTATCTAATCC-3′), following established Illumina 16S library preparation guidance. [1] Samples were multiplexed using the Nextera XT Index Kit (Illumina, #FC-131-1001) and sequenced by 500-cycle paired-end sequencing (2×250 bp) on an Illumina MiSeq (Illumina, #MS-102-2003).

Bioinformatics processing and QC

Raw FASTQ files were inspected with FastQC. [2] Sequence processing was performed in QIIME 2. [3] Demultiplexed paired-end reads were imported using a Phred33 manifest and per-base quality profiles were reviewed to guide trimming/truncation. ASVs were inferred using the QIIME 2 DADA2 workflow. [4] Primer/adapter-proximal bases were removed using fixed 5′ trimming (17 bp forward; 21 bp reverse), reads were filtered using expected-error thresholds, and multiple forward/reverse truncation length combinations were evaluated to optimize read retention and paired-end merging for the ~460 bp V3–V4 amplicon. Final denoising parameters were selected based on denoising statistics (successful merging and proportion of non-chimeric reads across samples), and the resulting feature table and representative ASV sequences were used for downstream analyses.

Taxonomy was assigned by VSEARCH-based consensus classification [5] against SILVA (release 132) [6] at 97% identity. Downstream visualization and statistics were performed in R using phyloseq. [7]

Diversity analyses, ordination, and statistics

Alpha diversity (Observed features/OTU richness, Shannon diversity, and Faith’s phylogenetic diversity) was calculated on rarefied data and compared between groups using nonparametric rank-based tests (Wilcoxon rank-sum for two-group comparisons; Kruskal–Wallis for ≥3 groups with post-hoc pairwise Wilcoxon tests). [8–10]

Group differences in community composition (beta-diversity) were assessed using Bray–Curtis dissimilarities [11] (computed after Hellinger transformation of abundance profiles) [12] and tested using PERMANOVA (adonis2 in vegan) with permutation-based significance. [13–14] Ordination was performed by principal coordinates analysis (PCoA) on the Bray–Curtis distance matrix to visualize sample-to-sample relationships. [15]

Differential abundance testing was performed using DESeq2 (negative binomial GLM). [16] Across analyses involving multiple hypothesis tests, p-values were adjusted using the Benjamini–Hochberg false discovery rate procedure. [17]

Pathway inference

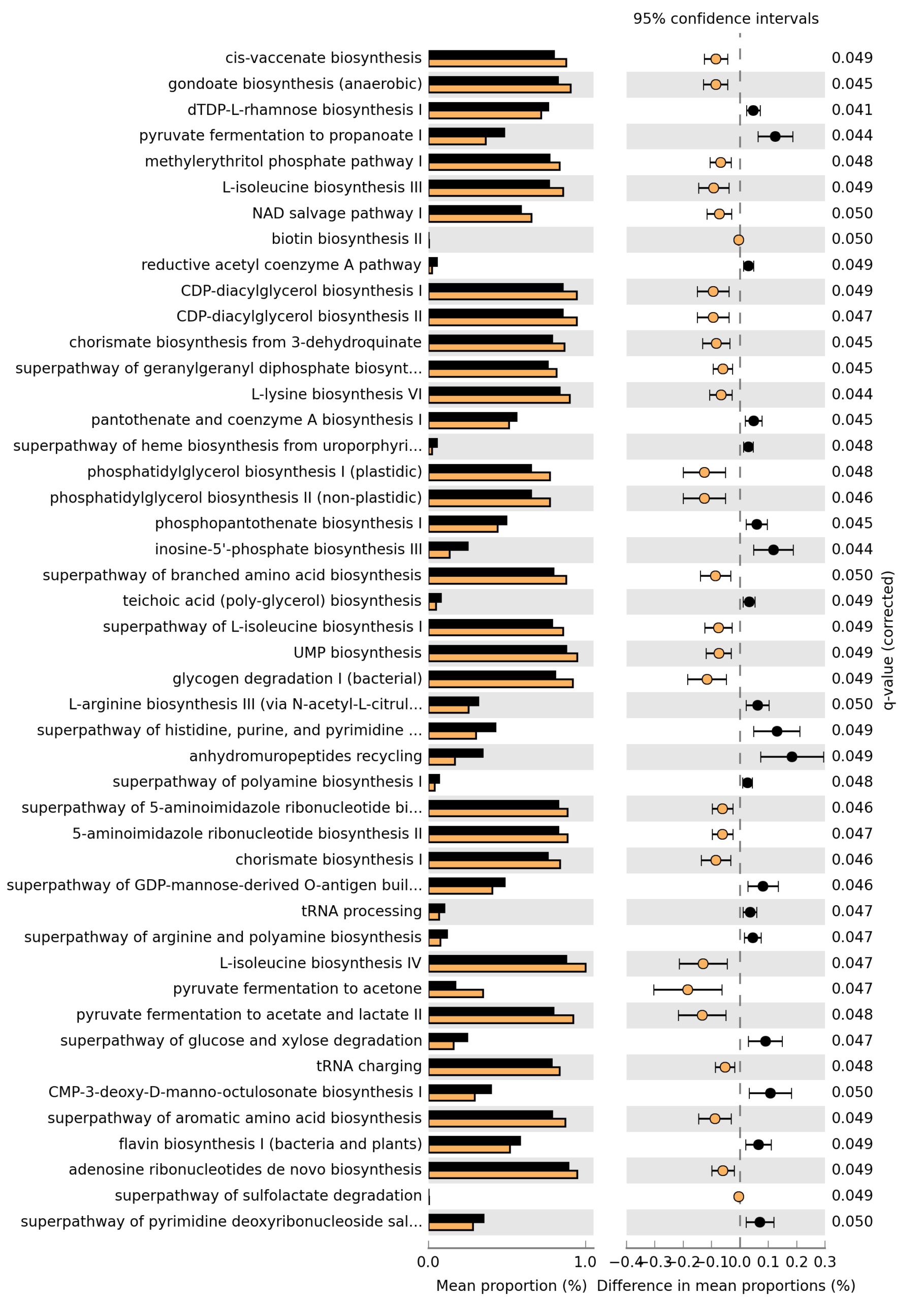

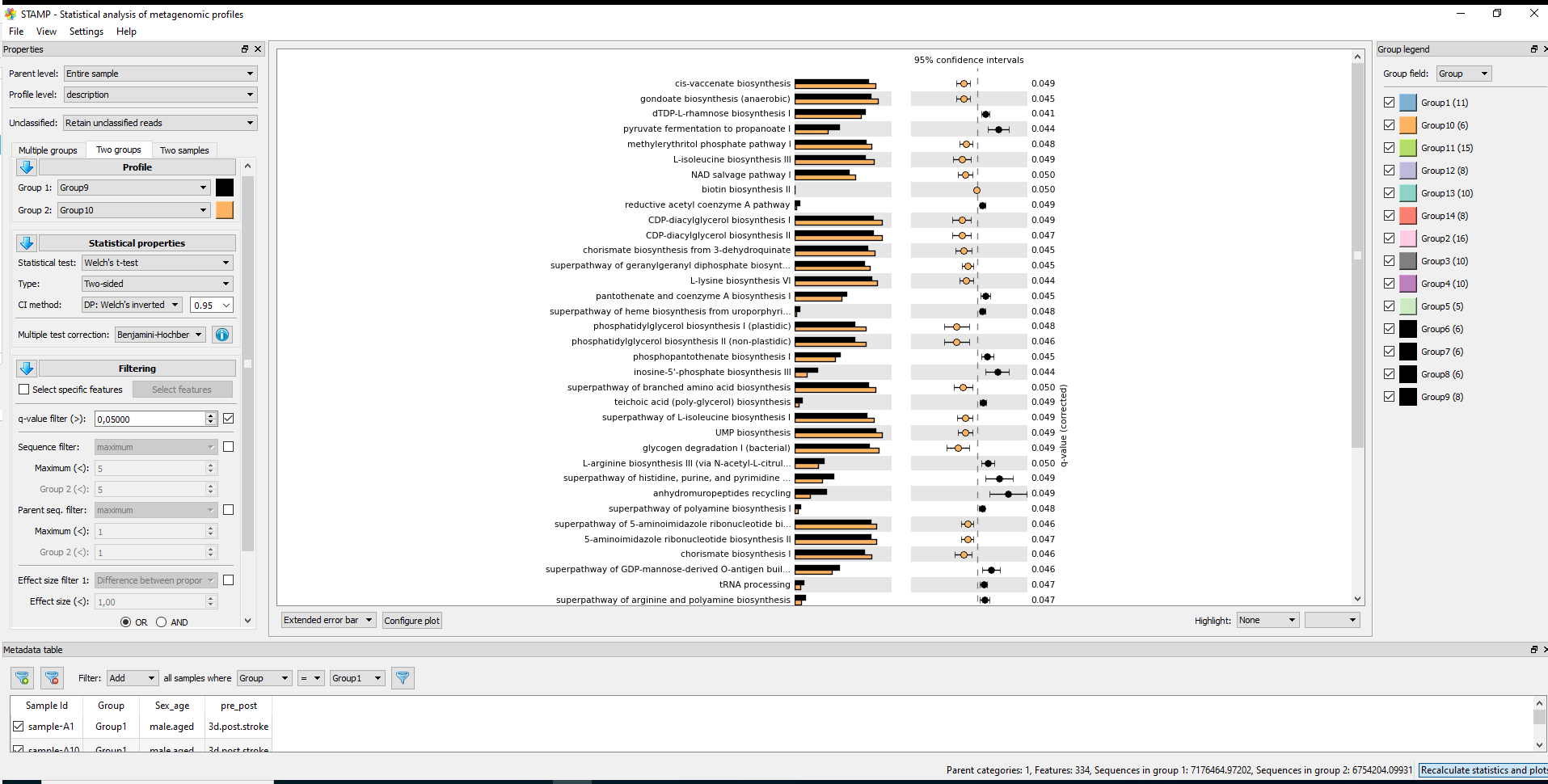

Predicted functional potential (gene families and pathways) was inferred from 16S ASVs using PICRUSt2. [18]

References

Illumina. 16S Metagenomic Sequencing Library Preparation (Part #15044223 Rev. B) [Internet]. Illumina; 2013. (Includes the overhang adapter primer structure and V3–V4 primer sequences).

Andrews S. FastQC: a quality control tool for high throughput sequence data [Internet]. Babraham Bioinformatics; 2010 [cited 2025 Dec 18]. Available from: https://www.bioinformatics.babraham.ac.uk/projects/fastqc/

Bolyen E, Rideout JR, Dillon MR, Bokulich NA, Abnet CC, Al-Ghalith GA, et al. Reproducible, interactive, scalable and extensible microbiome data science using QIIME 2. Nat Biotechnol. 2019;37(8):852–857. doi:10.1038/s41587-019-0209-9.

Callahan BJ, McMurdie PJ, Rosen MJ, Han AW, Johnson AJA, Holmes SP. DADA2: High-resolution sample inference from Illumina amplicon data. Nat Methods. 2016;13(7):581–583. doi:10.1038/nmeth.3869.

Rognes T, Flouri T, Nichols B, Quince C, Mahé F. VSEARCH: a versatile open source tool for metagenomics. PeerJ. 2016;4:e2584. doi:10.7717/peerj.2584.

Quast C, Pruesse E, Yilmaz P, Gerken J, Schweer T, Yarza P, et al. The SILVA ribosomal RNA gene database project: improved data processing and web-based tools. Nucleic Acids Res. 2013;41(D1):D590–D596. doi:10.1093/nar/gks1219.

McMurdie PJ, Holmes S. phyloseq: An R package for reproducible interactive analysis and graphics of microbiome census data. PLoS One. 2013;8(4):e61217. doi:10.1371/journal.pone.0061217.

Wilcoxon F. Individual comparisons by ranking methods. Biometrics Bulletin. 1945;1(6):80–83. doi:10.2307/3001968.

Kruskal WH, Wallis WA. Use of ranks in one-criterion variance analysis. J Am Stat Assoc. 1952;47:583–621. doi:10.1080/01621459.1952.10483441.

(If you want an additional general nonparametric/post-hoc reference beyond Wilcoxon/Kruskal–Wallis, tell me your preferred one and I’ll format it consistently.)

Bray JR, Curtis JT. An ordination of the upland forest communities of southern Wisconsin. Ecol Monogr. 1957;27:325–349. doi:10.2307/1942268.

Legendre P, Gallagher ED. Ecologically meaningful transformations for ordination of species data. Oecologia. 2001;129(2):271–280. doi:10.1007/s004420100716.

Anderson MJ. A new method for non-parametric multivariate analysis of variance. Austral Ecol. 2001;26:32–46. doi:10.1111/j.1442-9993.2001.01070.pp.x.

Oksanen J, Simpson GL, Blanchet FG, Kindt R, Legendre P, Minchin PR, et al. vegan: Community Ecology Package [Internet]. R package. Available from: https://CRAN.R-project.org/package=vegan. doi:10.32614/CRAN.package.vegan.

Gower JC. Some distance properties of latent root and vector methods used in multivariate analysis. Biometrika. 1966;53(3–4):325–338. doi:10.1093/biomet/53.3-4.325.

Love MI, Huber W, Anders S. Moderated estimation of fold change and dispersion for RNA-seq data with DESeq2. Genome Biol. 2014;15:550. doi:10.1186/s13059-014-0550-8.

Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Series B. 1995;57(1):289–300. doi:10.1111/j.2517-6161.1995.tb02031.x.

Douglas GM, Maffei VJ, Zaneveld JR, Yurgel SN, Brown JR, Taylor CM, et al. PICRUSt2 for prediction of metagenome functions. Nat Biotechnol. 2020;38(6):685–688. doi:10.1038/s41587-020-0548-6. (slamo.biochem.dal.ca)

Phyloseq_Group9_10_11_pre-FMT.Rmd

author: “”

date: ‘r format(Sys.time(), "%d %m %Y")‘

header-includes:

-

\usepackage{color, fancyvrb}

output:

rmdformats::readthedown:

highlight: kate

number_sections : yes

pdf_document:

toc: yes

toc_depth: 2

number_sections : yes

#install.packages(c("picante", "rmdformats"))

#mamba install -c conda-forge freetype libpng harfbuzz fribidi

#mamba install -c conda-forge r-systemfonts r-svglite r-kableExtra freetype fontconfig harfbuzz fribidi libpng

library(knitr)

library(rmdformats)

library(readxl)

library(dplyr)

library(kableExtra)

library(openxlsx)

library(DESeq2)

library(writexl)

options(max.print="75")

knitr::opts_chunk$set(fig.width=8,

fig.height=6,

eval=TRUE,

cache=TRUE,

echo=TRUE,

prompt=FALSE,

tidy=FALSE,

comment=NA,

message=FALSE,

warning=FALSE)

opts_knit$set(width=85)

#rmarkdown::render('Phyloseq_Group9_10_11_pre-FMT.Rmd',output_file='Phyloseq_Group9_10_11_pre-FMT.html')

# Phyloseq R library

#* Phyloseq web site : https://joey711.github.io/phyloseq/index.html

#* See in particular tutorials for

# - importing data: https://joey711.github.io/phyloseq/import-data.html

# - heat maps: https://joey711.github.io/phyloseq/plot_heatmap-examples.html

Data

Import raw data and assign sample key:

#extend qiime2_metadata_for_qza_to_phyloseq.tsv with Diet and Flora

#setwd("~/DATA/Data_Laura_16S_2/core_diversity_e4753")

#map_corrected <- read.csv("qiime2_metadata_for_qza_to_phyloseq.tsv", sep="\t", row.names=1)

#knitr::kable(map_corrected) %>% kable_styling(bootstrap_options = c("striped", "hover", "condensed", "responsive"))

Prerequisites to be installed

install.packages("dplyr") # To manipulate dataframes

install.packages("readxl") # To read Excel files into R

install.packages("ggplot2") # for high quality graphics

install.packages("heatmaply")

source("https://bioconductor.org/biocLite.R")

biocLite("phyloseq")

#mamba install -c conda-forge r-ggplot2 r-vegan r-data.table

#BiocManager::install("microbiome")

#install.packages("ggpubr")

#install.packages("heatmaply")

library("readxl") # necessary to import the data from Excel file

library("ggplot2") # graphics

library("picante")

library("microbiome") # data analysis and visualisation

library("phyloseq") # also the basis of data object. Data analysis and visualisation

library("ggpubr") # publication quality figures, based on ggplot2

library("dplyr") # data handling, filter and reformat data frames

library("RColorBrewer") # nice color options

library("heatmaply")

library(vegan)

library(gplots)

#install.packages("openxlsx")

library(openxlsx)

Read the data and create phyloseq objects

Three tables are needed

library(tidyr)

# For QIIME1

#ps.ng.tax <- import_biom("./exported_table/feature-table.biom", "./exported-tree/tree.nwk")

# For QIIME2

#install.packages("remotes")

#remotes::install_github("jbisanz/qiime2R")

#"core_metrics_results/rarefied_table.qza", rarefying performed in the code, therefore import the raw table.

library(qiime2R)

ps_raw <- qza_to_phyloseq(

features = "table.qza", #cp ../Data_Karoline_16S_2025/dada2_tests2/test_7_f240_r240/table.qza .

tree = "rooted-tree.qza", #cp ../Data_Karoline_16S_2025/rooted-tree.qza .

metadata = "qiime2_metadata_for_qza_to_phyloseq.tsv" #cp ../Data_Karoline_16S_2025/qiime2_metadata_for_qza_to_phyloseq.tsv .

)

# or

#biom convert \

# -i ./exported_table/feature-table.biom \

# -o ./exported_table/feature-table-v1.biom \

# --to-json

#ps_raw <- import_biom("./exported_table/feature-table-v1.biom", treefilename="./exported-tree/tree.nwk")

sample <- read.csv("./qiime2_metadata_for_qza_to_phyloseq.tsv", sep="\t", row.names=1)

SAM = sample_data(sample, errorIfNULL = T)

#> setdiff(rownames(SAM), sample_names(ps_raw))

#[1] "sample-L9" should be removed since the low reads

ps_base <- merge_phyloseq(ps_raw, SAM)

print(ps_base)

taxonomy <- read.delim("taxonomy.tsv", sep="\t", header=TRUE) #cp ../Data_Karoline_16S_2025/exported-taxonomy/taxonomy.tsv .

#head(taxonomy)

# Separate taxonomy string into separate ranks

taxonomy_df <- taxonomy %>% separate(Taxon, into = c("Domain","Phylum","Class","Order","Family","Genus","Species"), sep = ";", fill = "right", extra = "drop")

# Use Feature.ID as rownames

rownames(taxonomy_df) <- taxonomy_df$Feature.ID

taxonomy_df <- taxonomy_df[, -c(1, ncol(taxonomy_df))] # Drop Feature.ID and Confidence

# Create tax_table

tax_table_final <- phyloseq::tax_table(as.matrix(taxonomy_df))

# Merge tax_table with existing phyloseq object

ps_base <- merge_phyloseq(ps_base, tax_table_final)

# Check

ps_base

#colnames(phyloseq::tax_table(ps_base)) <- c("Domain","Phylum","Class","Order","Family","Genus","Species")

saveRDS(ps_base, "./ps_base.rds")

Visualize data

sample_names(ps_base)

rank_names(ps_base)

sample_variables(ps_base)

# Define sample names once

samples <- c(

#"sample-A1","sample-A2","sample-A5","sample-A6","sample-A7","sample-A8","sample-A9","sample-A10", #RESIZED: "sample-A3","sample-A4","sample-A11",

#"sample-B1","sample-B2","sample-B3","sample-B4","sample-B5","sample-B6","sample-B7", #RESIZED: "sample-B8","sample-B9","sample-B10","sample-B11","sample-B12","sample-B13","sample-B14","sample-B15","sample-B16",

#"sample-C1","sample-C2","sample-C3","sample-C4","sample-C5","sample-C6","sample-C7", #RESIZED: "sample-C8","sample-C9","sample-C10",

#"sample-E1","sample-E2","sample-E3","sample-E4","sample-E5","sample-E6","sample-E7","sample-E8","sample-E9","sample-E10", #RESIZED:

#"sample-F1","sample-F2","sample-F3","sample-F4","sample-F5",

"sample-G1","sample-G2","sample-G3","sample-G4","sample-G5","sample-G6",

"sample-H1","sample-H2","sample-H3","sample-H4","sample-H5","sample-H6",

"sample-I1","sample-I2","sample-I3","sample-I4","sample-I5","sample-I6",

"sample-J1","sample-J2","sample-J3","sample-J4","sample-J10","sample-J11", #RESIZED: "sample-J5","sample-J8","sample-J9", "sample-J6","sample-J7",

"sample-K1","sample-K2","sample-K3","sample-K4","sample-K5","sample-K6", #RESIZED: "sample-K7","sample-K8","sample-K9","sample-K10", "sample-K11","sample-K12","sample-K13","sample-K14","sample-K15",

"sample-L2","sample-L3","sample-L4","sample-L5","sample-L6" #RESIZED:"sample-L1","sample-L7","sample-L8","sample-L10","sample-L11","sample-L12","sample-L13","sample-L14","sample-L15",

#"sample-M1","sample-M2","sample-M3","sample-M4","sample-M5","sample-M6","sample-M7","sample-M8",

#"sample-N1","sample-N2","sample-N3","sample-N4","sample-N5","sample-N6","sample-N7","sample-N8","sample-N9","sample-N10",

#"sample-O1","sample-O2","sample-O3","sample-O4","sample-O5","sample-O6","sample-O7","sample-O8"

)

ps_pruned <- prune_samples(samples, ps_base)

sample_names(ps_pruned)

rank_names(ps_pruned)

sample_variables(ps_pruned)

No samples were excluded as low-depth outliers (library sizes below the minimum depth threshold of 1,000 reads), and the remaining dataset (ps_filt) contains only samples meeting this depth cutoff with taxa retained only if they have nonzero total counts.

# ------------------------------------------------------------

# Filter low-depth samples (recommended for all analyses)

# ------------------------------------------------------------

min_depth <- 1000 # <-- adjust to your data / study design, keeps all!

ps_filt <- prune_samples(sample_sums(ps_pruned) >= min_depth, ps_pruned)

ps_filt <- prune_taxa(taxa_sums(ps_filt) > 0, ps_filt)

# Keep a depth summary for reporting / QC

depth_summary <- summary(sample_sums(ps_filt))

depth_summary

Differential abundance (DESeq2) → ps_deseq: non-rarefied integer counts derived from ps_filt, with optional count-based taxon prefilter

(default: taxa total counts ≥ 10 across all samples)

From ps_filt (e.g. 5669 taxa and 239 samples), we branch into analysis-ready objects in two directions:

Normalize number of reads in each sample using median sequencing depth.

# RAREFACTION

set.seed(9242) # This will help in reproducing the filtering and nomalisation.

ps_rarefied <- rarefy_even_depth(ps_filt, sample.size = 6389)

#total <- 6389

# # NORMALIZE number of reads in each sample using median sequencing depth.

# total = median(sample_sums(ps.ng.tax))

# #> total

# #[1] 42369

# standf = function(x, t=total) round(t * (x / sum(x)))

# ps.ng.tax = transform_sample_counts(ps.ng.tax, standf)

# ps_rel <- microbiome::transform(ps.ng.tax, "compositional")

#

# saveRDS(ps.ng.tax, "./ps.ng.tax.rds")

For the heatmaps, we focus on the most abundant OTUs by first converting counts to relative abundances within each sample. We then filter to retain only OTUs whose mean relative abundance across all samples exceeds 0.1% (0.001). We are left with 199 OTUs which makes the reading much more easy.

# 1) Convert to relative abundances

ps_rel <- transform_sample_counts(ps_filt, function(x) x / sum(x))

# 2) Get the logical vector of which OTUs to keep (based on relative abundance)

keep_vector <- phyloseq::filter_taxa(

ps_rel,

function(x) mean(x) > 0.001,

prune = FALSE

)

# 3) Use the TRUE/FALSE vector to subset absolute abundance data

ps_abund <- prune_taxa(names(keep_vector)[keep_vector], ps_filt)

# 4) Normalize the final subset to relative abundances per sample

ps_abund_rel <- transform_sample_counts(

ps_abund,

function(x) x / sum(x)

)

library(stringr)

#for id in 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206; do

#for id in 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62; do

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Domain\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Domain\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Phylum\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Phylum\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Class\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Class\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Order\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Order\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Family\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Family\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Genus\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Genus\"], \"__\")[[1]][2]"

# echo "phyloseq::tax_table(ps_abund_rel)[${id},\"Species\"] <- str_split(phyloseq::tax_table(ps_abund_rel)[${id},\"Species\"], \"__\")[[1]][2]"

#done

phyloseq::tax_table(ps_abund_rel)[1,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[1,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[1,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[2,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[2,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[3,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[3,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[4,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[4,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[5,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[5,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[6,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[6,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[7,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[7,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[8,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[8,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[9,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[9,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[10,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[10,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[11,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[11,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[12,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[12,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[13,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[13,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[14,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[14,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[15,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[15,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[16,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[16,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[17,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[17,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[18,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[18,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[19,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[19,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[20,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[20,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[21,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[21,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[22,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[22,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[23,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[23,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[24,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[24,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[25,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[25,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[26,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[26,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[27,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[27,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[28,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[28,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[29,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[29,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[30,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[30,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[31,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[31,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[32,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[32,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[32,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[32,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[32,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[32,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[32,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[32,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[32,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[32,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[32,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[32,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[32,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[32,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[33,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[33,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[33,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[33,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[33,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[33,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[33,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[33,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[33,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[33,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[33,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[33,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[33,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[33,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[34,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[34,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[34,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[34,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[34,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[34,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[34,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[34,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[34,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[34,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[34,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[34,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[34,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[34,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[35,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[35,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[35,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[35,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[35,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[35,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[35,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[35,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[35,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[35,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[35,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[35,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[35,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[35,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[36,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[36,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[36,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[36,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[36,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[36,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[36,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[36,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[36,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[36,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[36,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[36,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[36,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[36,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[37,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[37,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[37,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[37,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[37,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[37,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[37,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[37,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[37,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[37,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[37,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[37,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[37,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[37,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[38,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[38,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[38,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[38,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[38,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[38,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[38,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[38,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[38,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[38,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[38,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[38,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[38,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[38,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[39,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[39,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[39,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[39,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[39,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[39,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[39,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[39,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[39,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[39,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[39,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[39,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[39,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[39,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[40,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[40,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[40,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[40,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[40,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[40,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[40,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[40,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[40,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[40,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[40,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[40,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[40,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[40,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[41,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[41,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[41,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[41,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[41,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[41,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[41,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[41,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[41,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[41,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[41,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[41,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[41,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[41,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[42,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[42,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[42,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[42,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[42,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[42,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[42,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[42,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[42,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[42,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[42,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[42,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[42,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[42,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[43,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[43,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[43,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[43,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[43,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[43,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[43,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[43,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[43,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[43,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[43,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[43,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[43,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[43,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[44,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[44,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[44,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[44,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[44,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[44,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[44,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[44,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[44,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[44,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[44,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[44,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[44,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[44,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[45,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[45,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[45,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[45,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[45,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[45,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[45,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[45,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[45,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[45,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[45,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[45,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[45,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[45,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[46,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[46,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[46,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[46,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[46,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[46,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[46,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[46,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[46,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[46,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[46,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[46,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[46,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[46,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[47,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[47,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[47,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[47,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[47,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[47,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[47,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[47,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[47,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[47,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[47,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[47,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[47,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[47,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[48,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[48,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[48,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[48,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[48,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[48,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[48,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[48,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[48,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[48,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[48,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[48,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[48,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[48,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[49,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[49,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[49,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[49,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[49,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[49,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[49,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[49,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[49,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[49,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[49,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[49,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[49,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[49,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[50,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[50,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[50,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[50,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[50,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[50,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[50,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[50,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[50,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[50,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[50,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[50,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[50,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[50,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[51,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[51,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[51,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[51,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[51,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[51,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[51,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[51,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[51,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[51,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[51,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[51,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[51,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[51,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[52,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[52,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[52,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[52,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[52,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[52,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[52,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[52,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[52,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[52,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[52,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[52,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[52,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[52,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[53,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[53,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[53,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[53,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[53,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[53,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[53,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[53,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[53,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[53,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[53,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[53,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[53,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[53,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[54,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[54,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[54,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[54,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[54,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[54,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[54,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[54,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[54,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[54,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[54,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[54,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[54,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[54,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[55,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[55,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[55,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[55,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[55,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[55,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[55,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[55,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[55,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[55,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[55,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[55,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[55,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[55,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[56,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[56,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[56,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[56,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[56,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[56,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[56,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[56,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[56,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[56,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[56,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[56,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[56,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[56,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[57,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[57,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[57,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[57,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[57,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[57,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[57,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[57,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[57,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[57,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[57,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[57,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[57,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[57,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[58,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[58,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[58,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[58,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[58,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[58,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[58,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[58,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[58,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[58,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[58,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[58,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[58,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[58,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[59,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[59,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[59,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[59,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[59,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[59,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[59,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[59,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[59,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[59,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[59,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[59,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[59,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[59,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[60,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[60,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[60,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[60,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[60,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[60,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[60,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[60,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[60,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[60,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[60,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[60,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[60,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[60,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[61,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[61,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[61,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[61,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[61,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[61,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[61,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[61,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[61,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[61,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[61,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[61,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[61,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[61,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[62,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[62,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[62,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[62,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[62,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[62,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[62,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[62,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[62,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[62,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[62,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[62,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[62,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[62,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[63,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[63,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[63,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[63,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[63,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[63,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[63,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[63,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[63,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[63,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[63,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[63,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[63,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[63,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[64,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[64,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[64,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[64,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[64,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[64,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[64,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[64,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[64,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[64,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[64,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[64,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[64,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[64,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[65,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[65,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[65,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[65,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[65,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[65,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[65,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[65,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[65,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[65,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[65,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[65,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[65,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[65,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[66,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[66,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[66,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[66,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[66,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[66,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[66,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[66,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[66,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[66,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[66,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[66,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[66,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[66,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[67,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[67,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[67,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[67,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[67,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[67,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[67,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[67,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[67,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[67,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[67,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[67,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[67,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[67,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[68,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[68,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[68,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[68,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[68,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[68,"Class"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[68,"Order"] <- str_split(phyloseq::tax_table(ps_abund_rel)[68,"Order"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[68,"Family"] <- str_split(phyloseq::tax_table(ps_abund_rel)[68,"Family"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[68,"Genus"] <- str_split(phyloseq::tax_table(ps_abund_rel)[68,"Genus"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[68,"Species"] <- str_split(phyloseq::tax_table(ps_abund_rel)[68,"Species"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[69,"Domain"] <- str_split(phyloseq::tax_table(ps_abund_rel)[69,"Domain"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[69,"Phylum"] <- str_split(phyloseq::tax_table(ps_abund_rel)[69,"Phylum"], "__")[[1]][2]

phyloseq::tax_table(ps_abund_rel)[69,"Class"] <- str_split(phyloseq::tax_table(ps_abund_rel)[69,"Class"], "__")[[1]][2]