Category Archives: Articles

FASTQ / raw sequencing datasets overview (T. and F.)

1) Per-dataset sample inventory (compact lists)

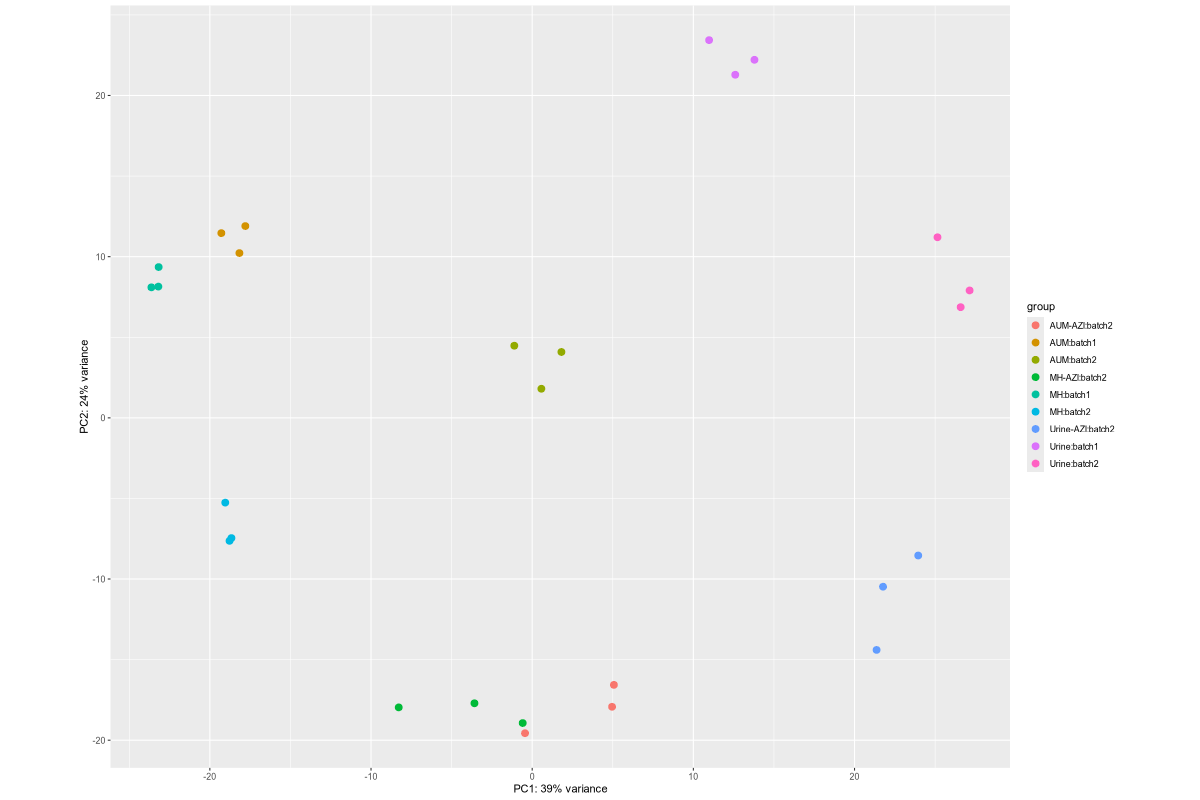

1. Data_Tam_RNAseq_2024_AUM_MHB_Urine_on_ATCC19606

X101SC24105589-Z01-J001:AUM-1..3,MHB-1..3,Urine-1..3(all PE)X101SC25062155-Z01-J002:AUM-1..3,AUM-AZI-1..3,MH-1..3,MH-AZI-1..3,Urine-1..3,Urine-AZI-1..3(all PE)

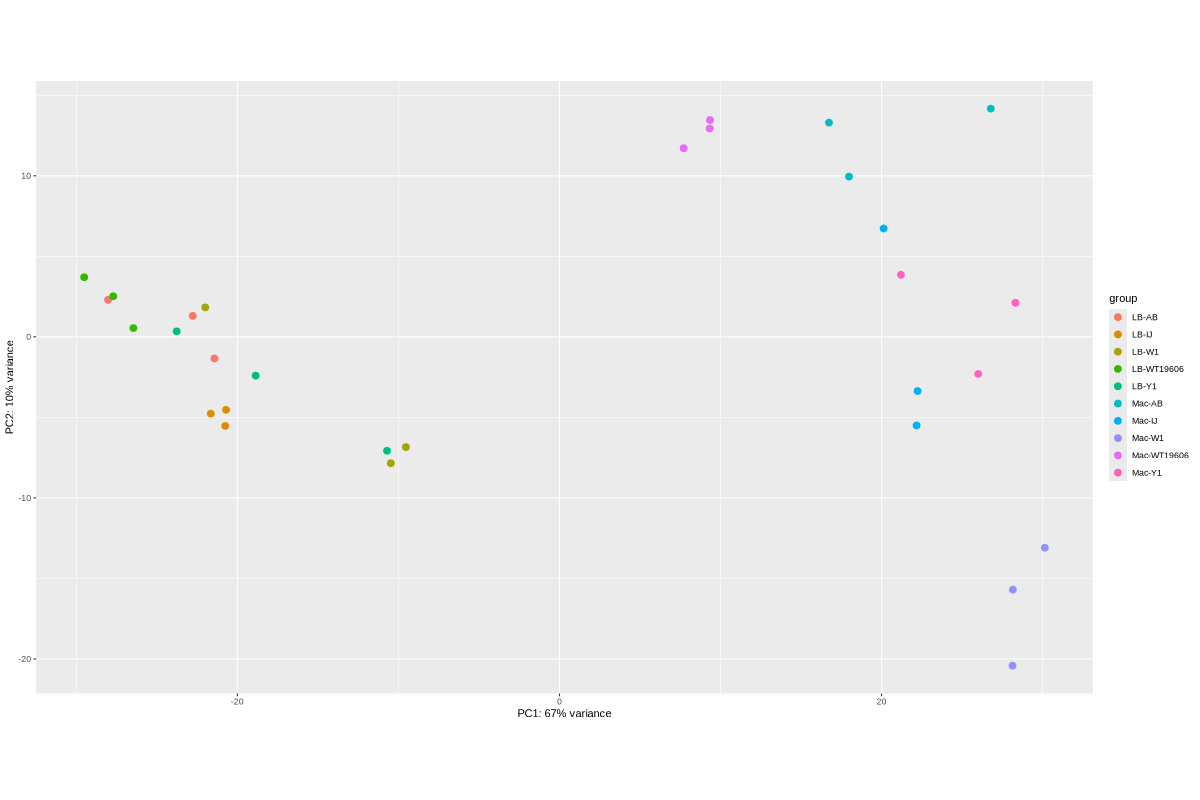

2. Data_Tam_RNAseq_2025_LB-AB_IJ_W1_Y1_WT_vs_Mac-AB_IJ_W1_Y1_WT_on_ATCC19606

- LB:

LB-AB-1..3,LB-IJ-(1,2,4),LB-W1-1..3,LB-WT19606-2..4,LB-Y1-2..4 - Mac:

Mac-AB-1..3,Mac-IJ-(1,2,4),Mac-W1-1..3,Mac-WT19606-2..4,Mac-Y1-2..4

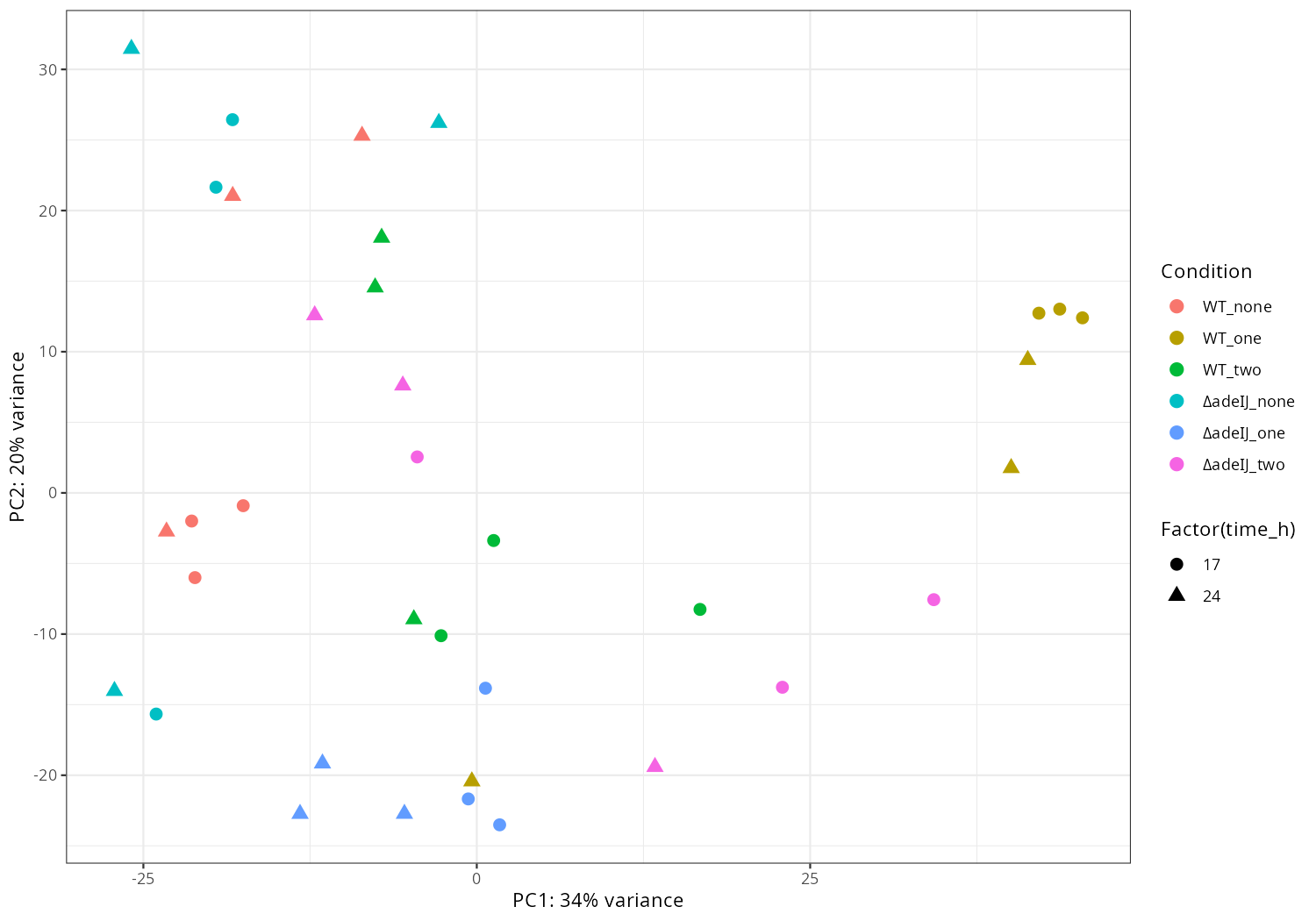

3. Data_Tam_RNAseq_2025_subMIC_exposure_on_ATCC19606

Each with reps -1..-3 (all PE):

0_5ΔIJ-17,0_5ΔIJ-24preWT-17,preWT-24preΔIJ-17,preΔIJ-24WT0_5-17,WT0_5-24WT-17,WT-24ΔIJ-17,ΔIJ-24

4. Data_Tam_DNAseq_2023_lab_strains

- A6WT – Acinetobacter baumannii ATCC19606

- A10CraA – Acinetobacter baumannii ATCC19606

- A12AYE – Acinetobacter baumannii AYE

- A1917978 – Acinetobacter baumannii ATCC17978

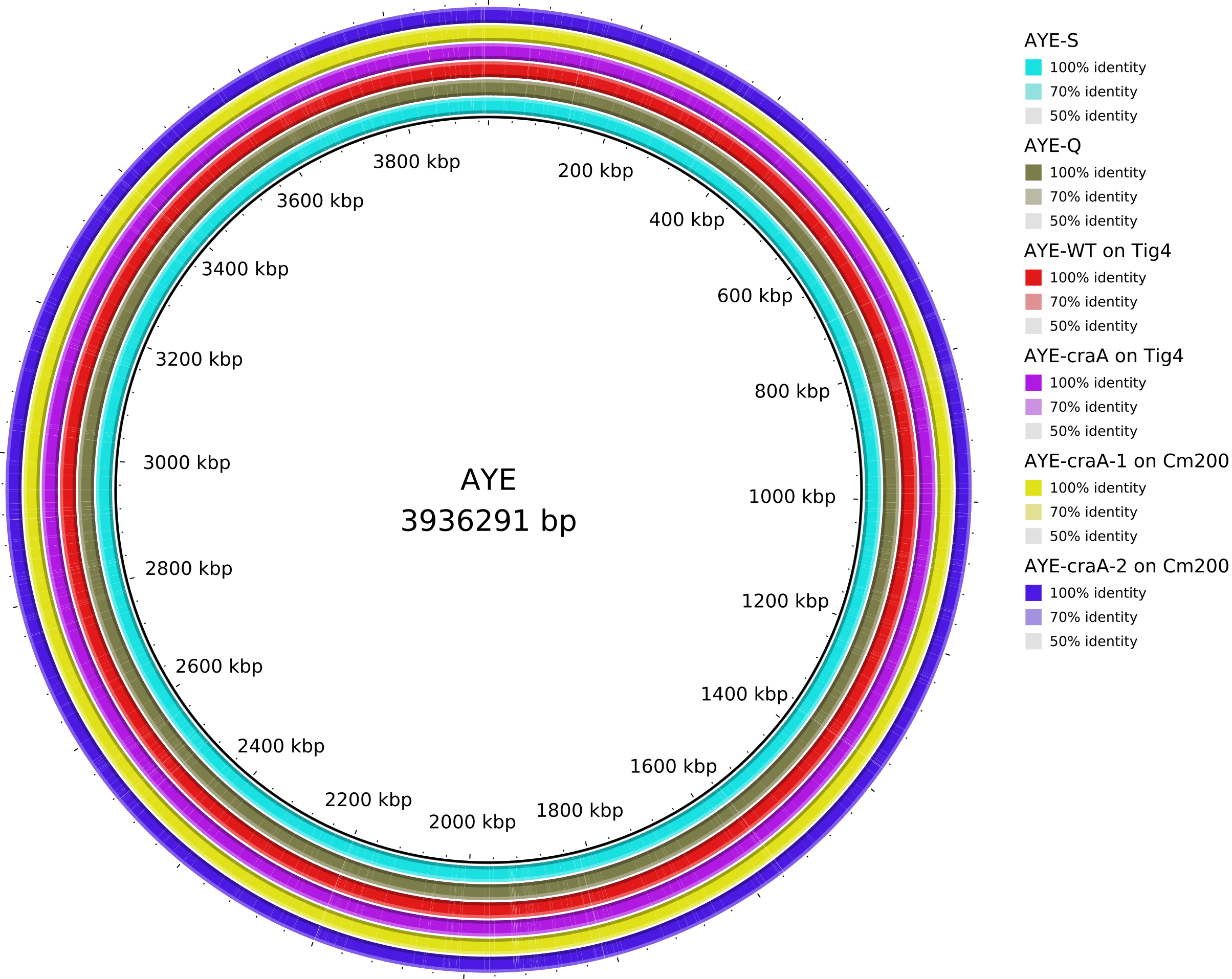

5. Data_Tam_DNAseq_2025_AYE-WT_Q_S_craA-Tig4_craA-1-Cm200_craA-2-Cm200

AYE-Q,AYE-S,AYE-WTonTig4,AYE-craAonTig4,AYE-craA-1onCm200,AYE-craA-2onCm200,clinical(all PE)

6. Data_Tam_DNAseq_2025_E.hormaechei-adeABadeIJ_adeIJK_CM1_CM2_on_ATCC19606

adeABadeIJ,adeIJK,CM1,CM2,HF(all PE)

7. Data_Tam_DNAseq_2025_ATCC19606-Y1Y2Y3Y4W1W2W3W4

- Illumina PE:

△adeIJ,Tig1,Tig2,W,W2,W3,W4,Y,Y2,Y3,Y4 -

Nanopore (

*_fastq_pass.tar):W1(3 tar files),W2(1),W3(2),W4(1)Y1(3),Y2(1),Y3(1),Y4(1)

8. Data_Tam_DNAseq_2026_19606deltaIJfluE

All PE; grouped by background:

19606△ABfluE:cef-1,cipro-2,dori-2,nitro-3,pip-1,polyB-3,tet-119606△IJfluE:cef-4,cipro-3,dori-1,nitro-3,pip-4,polyB-419606wtfluE:cef-1,cipro-2,dori-1,nitro-1,pip-4,polyB-4,tet-2

9. Data_Tam_DNAseq_2026_Acinetobacter_harbinensis

An6(PE)

10. Data_Tam_Metagenomics_2026

A1,A1a,A2,B1,B2(PE)

11. Data_Foong_RNAseq_2021_ATCC19606_Cm (mapping list provided)

- Batch1:

WT_1,WT_2B,C_1B,C_2,J_1,J_2 - Batch2:

Control,WT_1B,WT_2B,WT_3B,Cra_1,Cra_2,Cra_3,IJ_1B,IJ_2B,IJ_3 - Batch3:

adIJ_1,adIJ_2,crA2,crA_ab_1,crA_ab_2,crA_ab_3,adAB_1,adAB_2,adAB_ab1,adAB_ab2,adAB_ab3

12. Data_Foong_DNAseq_2025_AYE_Dark_vs_Light

Dark,Light(PE)

2) Dataset-level summary (quick lookup)

| Dataset folder | Year | Data type | Platform / format | Run / project IDs present | Samples (n) | Files (n) | Sample groups / notes |

|---|---|---|---|---|---|---|---|

Data_Tam_RNAseq_2024_AUM_MHB_Urine_on_ATCC19606/ |

2024 | RNA-seq | Illumina PE (*_1.fq.gz, *_2.fq.gz) |

X101SC24105589-Z01-J001, X101SC25062155-Z01-J002 |

27 | 54 | J001: AUM/MHB/Urine (each 1–3). J002: AUM, AUM-AZI, MH, MH-AZI, Urine, Urine-AZI (each 1–3). |

Data_Tam_RNAseq_2025_LB-AB_IJ_W1_Y1_WT_vs_Mac-AB_IJ_W1_Y1_WT_on_ATCC19606/ |

2025 | RNA-seq | Illumina PE | X101SC25015922-Z02-J002 |

30 | 60 | LB vs Mac sets; conditions AB, IJ, W1, Y1, WT19606 with listed replicates (mostly 1–3 or 2–4; IJ uses 1,2,4). |

Data_Tam_RNAseq_2025_subMIC_exposure_on_ATCC19606/ |

2025 | RNA-seq | Illumina PE | X101SC25062155-Z01-J001 |

36 | 72 | 12 condition blocks × 3 reps: preWT, preΔIJ, WT, ΔIJ, WT0_5, 0_5ΔIJ at timepoints 17 and 24. |

Data_Tam_DNAseq_2025_ATCC19606-Y1Y2Y3Y4W1W2W3W4/ |

2025 | DNA-seq | Illumina PE + Nanopore (*_fastq_pass.tar) |

Illumina: X101SC24065637-Z01-J001/J002; Nanopore: X101SC25080408-Z01-J001 |

11 (Illumina) + 13 tar archives | 22 + 13 | Illumina: △adeIJ, Tig1, Tig2, W, W2–W4, Y, Y2–Y4. Nanopore: W1(3), W2(1), W3(2), W4(1), Y1(3), Y2(1), Y3(1), Y4(1) tar files. |

Data_Tam_DNAseq_2025_AYE-WT_Q_S_craA-Tig4_craA-1-Cm200_craA-2-Cm200/ |

2025 | DNA-seq | Illumina PE | X101SC25015922-Z01-J001 |

7 | 14 | AYE variants: AYE-Q, AYE-S, AYE-WTonTig4, AYE-craAonTig4, AYE-craA-1onCm200, AYE-craA-2onCm200, plus clinical. |

Data_Tam_DNAseq_2025_E.hormaechei-adeABadeIJ_adeIJK_CM1_CM2 |

2025 | DNA-seq | Illumina PE | X101SC24115801-Z01-J001 |

5 | 10 | adeABadeIJ, adeIJK, CM1, CM2, HF. |

Data_Tam_DNAseq_2026_19606deltaIJfluE/ |

2026 | DNA-seq | Illumina PE | X101SC25116512-Z01-J003 |

20 | 40 | Three backgrounds: 19606△ABfluE* (7), 19606△IJfluE* (6), 19606wtfluE* (7) across drug tags (cef/cipro/dori/nitro/pip/polyB/tet) with replicate suffixes. |

Data_Tam_DNAseq_2026_Acinetobacter_harbinensis/ |

2026 | DNA-seq | Illumina PE | X101SC25116512-Z01-J002 |

1 | 2 | An6 (paired-end). |

Data_Tam_Metagenomics_2026/ |

2026 | Metagenomics | Illumina PE | X101SC25123808-Z01-J001 |

5 | 10 | A1, A1a, A2, B1, B2. |

Data_Foong_RNAseq_2021_ATCC19606_Cm/ |

2021 | RNA-seq | Illumina PE (symlink/mapping list shown) | (paths point to raw_data_batch1/2/3) |

27 | 54 | Batch1: WT/craA/adeIJ (each 2 reps). Batch2: Control + WT.abx + craA.abx + adeIJ.abx (various reps). Batch3: adeIJ, craA, craA.abx, adeAB, adeAB.abx (various reps). |

Data_Foong_DNAseq_2025_AYE_Dark_vs_Light/ |

2025 | DNA-seq | Illumina PE | X101SC25116512-Z01-J001 |

2 | 4 | Dark, Light. |

3) Complete list

Data_Tam_RNAseq_2024_AUM_MHB_Urine_on_ATCC19606/

./X101SC24105589-Z01-J001/01.RawData/AUM-1/AUM-1_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/AUM-1/AUM-1_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/AUM-2/AUM-2_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/AUM-2/AUM-2_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/AUM-3/AUM-3_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/AUM-3/AUM-3_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/MHB-1/MHB-1_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/MHB-1/MHB-1_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/MHB-2/MHB-2_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/MHB-2/MHB-2_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/MHB-3/MHB-3_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/MHB-3/MHB-3_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/Urine-1/Urine-1_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/Urine-1/Urine-1_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/Urine-2/Urine-2_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/Urine-2/Urine-2_2.fq.gz

./X101SC24105589-Z01-J001/01.RawData/Urine-3/Urine-3_1.fq.gz

./X101SC24105589-Z01-J001/01.RawData/Urine-3/Urine-3_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-1/AUM-1_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-1/AUM-1_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-2/AUM-2_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-2/AUM-2_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-3/AUM-3_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-3/AUM-3_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-AZI-1/AUM-AZI-1_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-AZI-1/AUM-AZI-1_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-AZI-2/AUM-AZI-2_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-AZI-2/AUM-AZI-2_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-AZI-3/AUM-AZI-3_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/AUM-AZI-3/AUM-AZI-3_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-1/MH-1_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-1/MH-1_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-2/MH-2_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-2/MH-2_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-3/MH-3_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-3/MH-3_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-AZI-1/MH-AZI-1_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-AZI-1/MH-AZI-1_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-AZI-2/MH-AZI-2_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-AZI-2/MH-AZI-2_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-AZI-3/MH-AZI-3_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/MH-AZI-3/MH-AZI-3_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-1/Urine-1_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-1/Urine-1_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-2/Urine-2_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-2/Urine-2_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-3/Urine-3_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-3/Urine-3_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-AZI-1/Urine-AZI-1_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-AZI-1/Urine-AZI-1_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-AZI-2/Urine-AZI-2_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-AZI-2/Urine-AZI-2_2.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-AZI-3/Urine-AZI-3_1.fq.gz

./X101SC25062155-Z01-J002/01.RawData/Urine-AZI-3/Urine-AZI-3_2.fq.gz

Data_Tam_RNAseq_2025_LB-AB_IJ_W1_Y1_WT_vs_Mac-AB_IJ_W1_Y1_WT_on_ATCC19606/

./X101SC25015922-Z02-J002/01.RawData/LB-AB-1/LB-AB-1_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-AB-1/LB-AB-1_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-AB-2/LB-AB-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-AB-2/LB-AB-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-AB-3/LB-AB-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-AB-3/LB-AB-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-IJ-1/LB-IJ-1_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-IJ-1/LB-IJ-1_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-IJ-2/LB-IJ-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-IJ-2/LB-IJ-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-IJ-4/LB-IJ-4_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-IJ-4/LB-IJ-4_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-W1-1/LB-W1-1_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-W1-1/LB-W1-1_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-W1-2/LB-W1-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-W1-2/LB-W1-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-W1-3/LB-W1-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-W1-3/LB-W1-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-WT19606-2/LB-WT19606-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-WT19606-2/LB-WT19606-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-WT19606-3/LB-WT19606-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-WT19606-3/LB-WT19606-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-WT19606-4/LB-WT19606-4_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-WT19606-4/LB-WT19606-4_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-Y1-2/LB-Y1-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-Y1-2/LB-Y1-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-Y1-3/LB-Y1-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-Y1-3/LB-Y1-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-Y1-4/LB-Y1-4_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/LB-Y1-4/LB-Y1-4_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-AB-1/Mac-AB-1_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-AB-1/Mac-AB-1_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-AB-2/Mac-AB-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-AB-2/Mac-AB-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-AB-3/Mac-AB-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-AB-3/Mac-AB-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-IJ-1/Mac-IJ-1_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-IJ-1/Mac-IJ-1_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-IJ-2/Mac-IJ-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-IJ-2/Mac-IJ-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-IJ-4/Mac-IJ-4_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-IJ-4/Mac-IJ-4_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-W1-1/Mac-W1-1_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-W1-1/Mac-W1-1_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-W1-2/Mac-W1-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-W1-2/Mac-W1-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-W1-3/Mac-W1-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-W1-3/Mac-W1-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-WT19606-2/Mac-WT19606-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-WT19606-2/Mac-WT19606-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-WT19606-3/Mac-WT19606-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-WT19606-3/Mac-WT19606-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-WT19606-4/Mac-WT19606-4_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-WT19606-4/Mac-WT19606-4_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-Y1-2/Mac-Y1-2_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-Y1-2/Mac-Y1-2_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-Y1-3/Mac-Y1-3_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-Y1-3/Mac-Y1-3_2.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-Y1-4/Mac-Y1-4_1.fq.gz

./X101SC25015922-Z02-J002/01.RawData/Mac-Y1-4/Mac-Y1-4_2.fq.gz

Data_Tam_RNAseq_2025_subMIC_exposure_on_ATCC19606/

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-17-1/0_5ΔIJ-17-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-17-1/0_5ΔIJ-17-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-17-2/0_5ΔIJ-17-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-17-2/0_5ΔIJ-17-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-17-3/0_5ΔIJ-17-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-17-3/0_5ΔIJ-17-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-24-1/0_5ΔIJ-24-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-24-1/0_5ΔIJ-24-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-24-2/0_5ΔIJ-24-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-24-2/0_5ΔIJ-24-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-24-3/0_5ΔIJ-24-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/0_5ΔIJ-24-3/0_5ΔIJ-24-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-17-1/preWT-17-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-17-1/preWT-17-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-17-2/preWT-17-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-17-2/preWT-17-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-17-3/preWT-17-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-17-3/preWT-17-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-24-1/preWT-24-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-24-1/preWT-24-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-24-2/preWT-24-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-24-2/preWT-24-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-24-3/preWT-24-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preWT-24-3/preWT-24-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-17-1/preΔIJ-17-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-17-1/preΔIJ-17-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-17-2/preΔIJ-17-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-17-2/preΔIJ-17-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-17-3/preΔIJ-17-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-17-3/preΔIJ-17-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-24-1/preΔIJ-24-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-24-1/preΔIJ-24-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-24-2/preΔIJ-24-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-24-2/preΔIJ-24-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-24-3/preΔIJ-24-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/preΔIJ-24-3/preΔIJ-24-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-17-1/WT0_5-17-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-17-1/WT0_5-17-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-17-2/WT0_5-17-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-17-2/WT0_5-17-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-17-3/WT0_5-17-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-17-3/WT0_5-17-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-24-1/WT0_5-24-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-24-1/WT0_5-24-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-24-2/WT0_5-24-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-24-2/WT0_5-24-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-24-3/WT0_5-24-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT0_5-24-3/WT0_5-24-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-17-1/WT-17-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-17-1/WT-17-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-17-2/WT-17-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-17-2/WT-17-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-17-3/WT-17-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-17-3/WT-17-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-24-1/WT-24-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-24-1/WT-24-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-24-2/WT-24-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-24-2/WT-24-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-24-3/WT-24-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/WT-24-3/WT-24-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-17-1/ΔIJ-17-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-17-1/ΔIJ-17-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-17-2/ΔIJ-17-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-17-2/ΔIJ-17-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-17-3/ΔIJ-17-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-17-3/ΔIJ-17-3_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-24-1/ΔIJ-24-1_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-24-1/ΔIJ-24-1_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-24-2/ΔIJ-24-2_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-24-2/ΔIJ-24-2_2.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-24-3/ΔIJ-24-3_1.fq.gz

./X101SC25062155-Z01-J001/01.RawData/ΔIJ-24-3/ΔIJ-24-3_2.fq.gz

Data_Tam_DNAseq_2025_ATCC19606-Y1Y2Y3Y4W1W2W3W4/

Illumina short-sequencing:

./X101SC24065637-Z01-J001/01.RawData/△adeIJ/△adeIJ_1.fq.gz

./X101SC24065637-Z01-J001/01.RawData/△adeIJ/△adeIJ_2.fq.gz

./X101SC24065637-Z01-J001/01.RawData/Tig1/Tig1_1.fq.gz

./X101SC24065637-Z01-J001/01.RawData/Tig1/Tig1_2.fq.gz

./X101SC24065637-Z01-J001/01.RawData/Tig2/Tig2_1.fq.gz

./X101SC24065637-Z01-J001/01.RawData/Tig2/Tig2_2.fq.gz

./X101SC24065637-Z01-J001/01.RawData/W/W_1.fq.gz

./X101SC24065637-Z01-J001/01.RawData/W/W_2.fq.gz

./X101SC24065637-Z01-J002/01.RawData/W2/W2_1.fq.gz

./X101SC24065637-Z01-J002/01.RawData/W2/W2_2.fq.gz

./X101SC24065637-Z01-J002/01.RawData/W3/W3_1.fq.gz

./X101SC24065637-Z01-J002/01.RawData/W3/W3_2.fq.gz

./X101SC24065637-Z01-J002/01.RawData/W4/W4_1.fq.gz

./X101SC24065637-Z01-J002/01.RawData/W4/W4_2.fq.gz

./X101SC24065637-Z01-J001/01.RawData/Y/Y_1.fq.gz

./X101SC24065637-Z01-J001/01.RawData/Y/Y_2.fq.gz

./X101SC24065637-Z01-J002/01.RawData/Y2/Y2_1.fq.gz

./X101SC24065637-Z01-J002/01.RawData/Y2/Y2_2.fq.gz

./X101SC24065637-Z01-J002/01.RawData/Y3/Y3_1.fq.gz

./X101SC24065637-Z01-J002/01.RawData/Y3/Y3_2.fq.gz

./X101SC24065637-Z01-J002/01.RawData/Y4/Y4_1.fq.gz

./X101SC24065637-Z01-J002/01.RawData/Y4/Y4_2.fq.gz

Nanopore long-sequencing:

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/W1/0710_2F_PBG50143_74807b09/W1_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/W1/0629_2H_PBG55359_f19e323f/W1_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/W1/0631_2C_PBG05153_55abe88b/W1_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/W2/0620_2C_PBG17000_6bfd0048/W2_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/W3/0710_2F_PBG50143_74807b09/W3_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/W3/0629_2H_PBG55359_f19e323f/W3_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/W4/0620_2C_PBG17000_6bfd0048/W4_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/Y1/0655_3B_PBE70655_6bbd09a4/Y1_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/Y1/0620_2C_PBG17000_6bfd0048/Y1_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/Y1/0631_2C_PBG05153_55abe88b/Y1_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/Y2/0620_2C_PBG17000_6bfd0048/Y2_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/Y3/0620_2C_PBG17000_6bfd0048/Y3_fastq_pass.tar

./X101SC25080408-Z01-J001/Release-X101SC25080408-Z01-J001-20251009/Data-X101SC25080408-Z01-J001/Y4/0620_2C_PBG17000_6bfd0048/Y4_fastq_pass.tar

Data_Tam_DNAseq_2025_AYE-WT_Q_S_craA-Tig4_craA-1-Cm200_craA-2-Cm200/

./X101SC25015922-Z01-J001/01.RawData/AYE-craA-1onCm200/AYE-craA-1onCm200_1.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-craA-1onCm200/AYE-craA-1onCm200_2.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-craA-2onCm200/AYE-craA-2onCm200_1.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-craA-2onCm200/AYE-craA-2onCm200_2.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-craAonTig4/AYE-craAonTig4_1.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-craAonTig4/AYE-craAonTig4_2.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-Q/AYE-Q_1.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-Q/AYE-Q_2.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-S/AYE-S_1.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-S/AYE-S_2.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-WTonTig4/AYE-WTonTig4_1.fq.gz

./X101SC25015922-Z01-J001/01.RawData/AYE-WTonTig4/AYE-WTonTig4_2.fq.gz

./X101SC25015922-Z01-J001/01.RawData/clinical/clinical_1.fq.gz

./X101SC25015922-Z01-J001/01.RawData/clinical/clinical_2.fq.gz

Data_Tam_DNAseq_2025_E.hormaechei-adeABadeIJ_adeIJK_CM1_CM2

./X101SC24115801-Z01-J001/01.RawData/adeABadeIJ/adeABadeIJ_1.fq.gz

./X101SC24115801-Z01-J001/01.RawData/adeABadeIJ/adeABadeIJ_2.fq.gz

./X101SC24115801-Z01-J001/01.RawData/adeIJK/adeIJK_1.fq.gz

./X101SC24115801-Z01-J001/01.RawData/adeIJK/adeIJK_2.fq.gz

./X101SC24115801-Z01-J001/01.RawData/CM1/CM1_1.fq.gz

./X101SC24115801-Z01-J001/01.RawData/CM1/CM1_2.fq.gz

./X101SC24115801-Z01-J001/01.RawData/CM2/CM2_1.fq.gz

./X101SC24115801-Z01-J001/01.RawData/CM2/CM2_2.fq.gz

./X101SC24115801-Z01-J001/01.RawData/HF/HF_1.fq.gz

./X101SC24115801-Z01-J001/01.RawData/HF/HF_2.fq.gz

Data_Tam_DNAseq_2026_19606deltaIJfluE/

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEcef-1/19606△ABfluEcef-1_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEcef-1/19606△ABfluEcef-1_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEcipro-2/19606△ABfluEcipro-2_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEcipro-2/19606△ABfluEcipro-2_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEdori-2/19606△ABfluEdori-2_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEdori-2/19606△ABfluEdori-2_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEnitro-3/19606△ABfluEnitro-3_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEnitro-3/19606△ABfluEnitro-3_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEpip-1/19606△ABfluEpip-1_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEpip-1/19606△ABfluEpip-1_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEpolyB-3/19606△ABfluEpolyB-3_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEpolyB-3/19606△ABfluEpolyB-3_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEtet-1/19606△ABfluEtet-1_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△ABfluEtet-1/19606△ABfluEtet-1_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEcef-4/19606△IJfluEcef-4_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEcef-4/19606△IJfluEcef-4_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEcipro-3/19606△IJfluEcipro-3_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEcipro-3/19606△IJfluEcipro-3_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEdori-1/19606△IJfluEdori-1_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEdori-1/19606△IJfluEdori-1_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEnitro-3/19606△IJfluEnitro-3_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEnitro-3/19606△IJfluEnitro-3_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEpip-4/19606△IJfluEpip-4_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEpip-4/19606△IJfluEpip-4_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEpolyB-4/19606△IJfluEpolyB-4_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606△IJfluEpolyB-4/19606△IJfluEpolyB-4_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEcef-1/19606wtfluEcef-1_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEcef-1/19606wtfluEcef-1_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEcipro-2/19606wtfluEcipro-2_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEcipro-2/19606wtfluEcipro-2_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEdori-1/19606wtfluEdori-1_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEdori-1/19606wtfluEdori-1_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEnitro-1/19606wtfluEnitro-1_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEnitro-1/19606wtfluEnitro-1_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEpip-4/19606wtfluEpip-4_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEpip-4/19606wtfluEpip-4_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEpolyB-4/19606wtfluEpolyB-4_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEpolyB-4/19606wtfluEpolyB-4_2.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEtet-2/19606wtfluEtet-2_1.fq.gz

./X101SC25116512-Z01-J003/01.RawData/19606wtfluEtet-2/19606wtfluEtet-2_2.fq.gz

Data_Tam_DNAseq_2026_Acinetobacter_harbinensis/

./X101SC25116512-Z01-J002/01.RawData/An6/An6_1.fq.gz

./X101SC25116512-Z01-J002/01.RawData/An6/An6_2.fq.gz

Data_Tam_Metagenomics_2026/

./X101SC25123808-Z01-J001/01.RawData/A1/A1_1.fq.gz

./X101SC25123808-Z01-J001/01.RawData/A1/A1_2.fq.gz

./X101SC25123808-Z01-J001/01.RawData/A1a/A1a_1.fq.gz

./X101SC25123808-Z01-J001/01.RawData/A1a/A1a_2.fq.gz

./X101SC25123808-Z01-J001/01.RawData/A2/A2_1.fq.gz

./X101SC25123808-Z01-J001/01.RawData/A2/A2_2.fq.gz

./X101SC25123808-Z01-J001/01.RawData/B1/B1_1.fq.gz

./X101SC25123808-Z01-J001/01.RawData/B1/B1_2.fq.gz

./X101SC25123808-Z01-J001/01.RawData/B2/B2_1.fq.gz

./X101SC25123808-Z01-J001/01.RawData/B2/B2_2.fq.gz

Data_Foong_RNAseq_2021_ATCC19606_Cm/

wt_r1_R1.fq.gz -> ../raw_data_batch1/WT_1_1.fq.gz

wt_r1_R2.fq.gz -> ../raw_data_batch1/WT_1_2.fq.gz

wt_r2_R1.fq.gz -> ../raw_data_batch1/WT_2B_1.fq.gz

wt_r2_R2.fq.gz -> ../raw_data_batch1/WT_2B_2.fq.gz

craA_r1_R1.fq.gz -> ../raw_data_batch1/C_1B_1.fq.gz

craA_r1_R2.fq.gz -> ../raw_data_batch1/C_1B_2.fq.gz

craA_r2_R1.fq.gz -> ../raw_data_batch1/C_2_1.fq.gz

craA_r2_R2.fq.gz -> ../raw_data_batch1/C_2_2.fq.gz

adeIJ_r1_R1.fq.gz -> ../raw_data_batch1/J_1_1.fq.gz

adeIJ_r1_R2.fq.gz -> ../raw_data_batch1/J_1_2.fq.gz

adeIJ_r2_R1.fq.gz -> ../raw_data_batch1/J_2_1.fq.gz

adeIJ_r2_R2.fq.gz -> ../raw_data_batch1/J_2_2.fq.gz

wt_r3_R1.fq.gz -> ../raw_data_batch2/Control_1.fq.gz

wt_r3_R2.fq.gz -> ../raw_data_batch2/Control_2.fq.gz

wt.abx_r1_R1.fq.gz -> ../raw_data_batch2/WT_1B_1.fq.gz

wt.abx_r1_R2.fq.gz -> ../raw_data_batch2/WT_1B_2.fq.gz

wt.abx_r2_R1.fq.gz -> ../raw_data_batch2/WT_2B_1.fq.gz

wt.abx_r2_R2.fq.gz -> ../raw_data_batch2/WT_2B_2.fq.gz

wt.abx_r3_R1.fq.gz -> ../raw_data_batch2/WT_3B_1.fq.gz

wt.abx_r3_R2.fq.gz -> ../raw_data_batch2/WT_3B_2.fq.gz

craA.abx_r1_R1.fq.gz -> ../raw_data_batch2/Cra_1_1.fq.gz

craA.abx_r1_R2.fq.gz -> ../raw_data_batch2/Cra_1_2.fq.gz

craA.abx_r2_R1.fq.gz -> ../raw_data_batch2/Cra_2_1.fq.gz

craA.abx_r2_R2.fq.gz -> ../raw_data_batch2/Cra_2_2.fq.gz

craA.abx_r3_R1.fq.gz -> ../raw_data_batch2/Cra_3_1.fq.gz

craA.abx_r3_R2.fq.gz -> ../raw_data_batch2/Cra_3_2.fq.gz

adeIJ.abx_r1_R1.fq.gz -> ../raw_data_batch2/IJ_1B_1.fq.gz

adeIJ.abx_r1_R2.fq.gz -> ../raw_data_batch2/IJ_1B_2.fq.gz

adeIJ.abx_r2_R1.fq.gz -> ../raw_data_batch2/IJ_2B_1.fq.gz

adeIJ.abx_r2_R2.fq.gz -> ../raw_data_batch2/IJ_2B_2.fq.gz

adeIJ.abx_r3_R1.fq.gz -> ../raw_data_batch2/IJ_3_1.fq.gz

adeIJ.abx_r3_R2.fq.gz -> ../raw_data_batch2/IJ_3_2.fq.gz

adeIJ_r3_R1.fq.gz -> ../raw_data_batch3/adIJ_1_1.fq.gz

adeIJ_r3_R2.fq.gz -> ../raw_data_batch3/adIJ_1_2.fq.gz

adeIJ_r4_R1.fq.gz -> ../raw_data_batch3/adIJ_2_1.fq.gz

adeIJ_r4_R2.fq.gz -> ../raw_data_batch3/adIJ_2_2.fq.gz

craA_r3_R1.fq.gz -> ../raw_data_batch3/crA2_1.fq.gz

craA_r3_R2.fq.gz -> ../raw_data_batch3/crA2_2.fq.gz

craA.abx_r4_R1.fq.gz -> ../raw_data_batch3/crA_ab_1_1.fq.gz

craA.abx_r4_R2.fq.gz -> ../raw_data_batch3/crA_ab_1_2.fq.gz

craA.abx_r5_R1.fq.gz -> ../raw_data_batch3/crA_ab_2_1.fq.gz

craA.abx_r5_R2.fq.gz -> ../raw_data_batch3/crA_ab_2_2.fq.gz

craA.abx_r6_R1.fq.gz -> ../raw_data_batch3/crA_ab_3_1.fq.gz

craA.abx_r6_R2.fq.gz -> ../raw_data_batch3/crA_ab_3_2.fq.gz

adeAB_r1_R1.fq.gz -> ../raw_data_batch3/adAB_1_1.fq.gz

adeAB_r1_R2.fq.gz -> ../raw_data_batch3/adAB_1_2.fq.gz

adeAB_r2_R1.fq.gz -> ../raw_data_batch3/adAB_2_1.fq.gz

adeAB_r2_R2.fq.gz -> ../raw_data_batch3/adAB_2_2.fq.gz

adeAB.abx_r1_R1.fq.gz -> ../raw_data_batch3/adAB_ab1_1.fq.gz

adeAB.abx_r1_R2.fq.gz -> ../raw_data_batch3/adAB_ab1_2.fq.gz

adeAB.abx_r2_R1.fq.gz -> ../raw_data_batch3/adAB_ab2_1.fq.gz

adeAB.abx_r2_R2.fq.gz -> ../raw_data_batch3/adAB_ab2_2.fq.gz

adeAB.abx_r3_R1.fq.gz -> ../raw_data_batch3/adAB_ab3_1.fq.gz

adeAB.abx_r3_R2.fq.gz -> ../raw_data_batch3/adAB_ab3_2.fq.gz

Data_Foong_DNAseq_2025_AYE_Dark_vs_Light/

./X101SC25116512-Z01-J001/01.RawData/Dark/Dark_1.fq.gz

./X101SC25116512-Z01-J001/01.RawData/Dark/Dark_2.fq.gz

./X101SC25116512-Z01-J001/01.RawData/Light/Light_1.fq.gz

./X101SC25116512-Z01-J001/01.RawData/Light/Light_2.fq.gzDirectory Listings Summary (Disk Directories)

/media/jhuang/INTENSO

(empty; data now on

~/DATA_Intenso)

| # | Name |

|---|---|

| 1 | (empty) |

~/DATA

| # | Name |

|---|---|

| 1 | Data_Ute_MKL1 |

| 2 | Data_Ute_RNA_4_2022-11_test |

| 3 | Data_Ute_RNA_3 |

| 4 | Data_Susanne_Carotis_RNASeq_PUBLISHING |

| 5 | Data_Jiline_Yersinia_SNP |

| 6 | Data_Tam_ABAYE_RS05070_on_A_calcoaceticus_baumannii_complex_DUPLICATED_DEL |

| 7 | Data_Nicole_CRC1648 |

| 8 | Mouse_HS3ST1_12373_out |

| 9 | Mouse_HS3ST1_12175_out |

| 10 | Data_Biobakery |

| 11 | Data_Xiaobo_10x_2 |

| 12 | Data_Xiaobo_10x_3 |

| 13 | Talk_Nicole_CRC1648 |

| 14 | Talks_Bioinformatics_Meeting |

| 15 | Talks_resources |

| 16 | Data_Susanne_MPox_DAMIAN |

| 17 | Data_host_transcriptional_response |

| 18 | Talks_including_DEEP-DV |

| 19 | DOKTORARBEIT |

| 20 | Data_Susanne_MPox |

| 21 | Data_Jiline_Transposon |

| 22 | Data_Jiline_Transposon2 |

| 23 | Data_Matlab |

| 24 | deepseek-ai |

| 25 | Stick_Mi_DEL |

| 26 | TODO_shares |

| 27 | Data_Ute_RNA_4 |

| 28 | Data_Liu_PCA_plot |

| 29 | README_run_viral-ngs_inside_Docker |

| 30 | README_compare_genomes |

| 31 | mapped.bam |

| 32 | Data_Serpapi |

| 33 | Data_Ute_RNA_1_2 |

| 34 | Data_Marc_RNAseq_2024 |

| 35 | Data_Nicole_CaptureProbeSequencing |

| 36 | LOG_mapping |

| 37 | Data_Huang_Human_herpesvirus_3 |

| 38 | Data_Nicole_DAMIAN_Post-processing_Pathoprobe_FluB_Links |

| 39 | Access_to_Win7 |

| 40 | Data_DAMIAN_Post-processing_Flavivirus_and_FSME_and_Haemophilus |

| 41 | Data_Luise_Sepi_STKN |

| 42 | Data_Patricia_Sepi_7samples |

| 43 | Data_Soeren_2025_PUBLISHING |

| 44 | Data_Ben_RNAseq_2025 |

| 45 | Data_Tam_DNAseq_2025_AYE-WT_Q_S_craA-Tig4_craA-1-Cm200_craA-2-Cm200 |

| 46 | Data_Patricia_Transposon |

| 47 | Data_Patricia_Transposon_2025 |

| 48 | Colocation_Space |

| 49 | Data_Tam_Methylation_2025_empty |

| 50 | 2025-11-03_eVB-Schreiben_12-57.pdf |

| 51 | DEGs_Group1_A1-A3+A8-A10_vs_Group2_B10-B16.png |

| 52 | README.pdf |

| 53 | Data_Hannes_JCM00612 |

| 54 | 167_redundant_DEL |

| 55 | Lehre_Bioinformatik |

| 56 | Data_Ben_Boruta_Analysis |

| 57 | Data_Childrensclinic_16S_2025_DEL |

| 58 | Data_Ben_Mycobacterium_pseudoscrofulaceum |

| 59 | Foong_RNA_mSystems_Huang_Changed.txt |

| 60 | Data_Pietro_Scatturo_and_Charlotte_Uetrecht_16S_2025 |

| 61 | Data_JuliaBerger_RNASeq_SARS-CoV-2 |

| 62 | Data_PaulBongarts_S.epidermidis_HDRNA |

| 63 | Data_Ute |

| 64 | Data_Foong_DNAseq_2025_AYE_Dark_vs_Light_TODO |

| 65 | Data_Foong_RNAseq_2021_ATCC19606_Cm |

| 66 | Data_Tam_Funding |

| 67 | Data_Tam_RNAseq_2025_LB-AB_IJ_W1_Y1_WT_vs_Mac-AB_IJ_W1_Y1_WT_on_ATCC19606 |

| 68 | Data_Tam_RNAseq_2025_subMIC_exposure_on_ATCC19606 |

| 69 | Data_Tam.txt |

| 70 | Data_Tam_RNAseq_2024_AUM_MHB_Urine_on_ATCC19606 |

| 71 | Data_Tam_Metagenomics_2026 |

| 72 | Data_Michelle |

| 73 | Data_Nicole_16S_2025_Childrensclinic |

| 74 | Data_Sophie_HDV_Sequences |

| 75 | Data_Tam_DNAseq_2026_19606deltaIJfluE |

| 76 | README_nf-core |

| 77 | Data_Vero_Kymographs |

| 78 | Access_to_Win10 |

| 79 | Data_Patricia_AMRFinderPlus_2025 |

| 80 | Data_Tam_DNAseq_2025_Unknown-adeABadeIJ_adeIJK_CM1_CM2 |

| 81 | Data_Damian |

| 82 | Data_Karoline_16S |

| 83 | Data_JuliaFuchs_RNAseq_2025 |

| 84 | Data_Tam_DNAseq_2025_ATCC19606-Y1Y2Y3Y4W1W2W3W4_TODO |

| 85 | Data_Tam_DNAseq_2026_Acinetobacter_harbinensis |

| 86 | Data_Benjamin_DNAseq_2026_GE11174 |

| 87 | Data_Susanne_spatialRNA_2022.9.1_backup |

| 88 | Data_Susanne_spatialRNA |

~/DATA_A

| # | Name |

|---|---|

| 1 | Data_Damian_NEW_CREATED |

| 2 | Data_R_bubbleplots |

| 3 | Data_Ute_TRANSFERED_DEL |

| 4 | Paper_Target_capture_sequencing_MHH_PUBLISHED |

| 5 | Data_Nicole8_Lamprecht_new_PUBLISHED |

| 6 | Data_Samira_RNAseq |

~/DATA_B

| # | Name |

|---|---|

| 1 | Data_DAMIAN_endocarditis_encephalitis |

| 2 | Data_Denise_sT_PUBLISHING |

| 3 | Data_Fran2_16S_func |

| 4 | Data_Holger_5179-R1_vs_5179 |

| 5 | Antraege_ |

| 6 | Data_16S_Nicole_210222 |

| 7 | Data_Adam_Influenza_A_virus |

| 8 | Data_Anna_Efaecium_assembly |

| 9 | Data_Bactopia |

| 10 | Data_Ben_RNAseq |

| 11 | Data_Johannes_PIV3 |

| 12 | Data_Luise_Epidome_longitudinal_nose |

| 13 | Data_Manja_Hannes_Probedesign |

| 14 | Data_Marc_AD_PUBLISHING |

| 15 | Data_Marc_RNA-seq_Saureus_Review |

| 16 | Data_Nicole_16S |

| 17 | Data_Nicole_cfDNA_pathogens |

| 18 | Data_Ring_and_CSF_PegivirusC_DAMIAN |

| 19 | Data_Song_Microarray |

| 20 | Data_Susanne_Omnikron |

| 21 | Data_Viro |

| 22 | Doktorarbeit |

| 23 | Poster_Rohde_20230724 |

| 24 | Data_Django |

| 25 | Data_Holger_S.epidermidis_1585_5179_HD05 |

| 26 | Data_Manja_RNAseq_Organoids_Virus |

| 27 | Data_Holger_MT880870_MT880872_Annotation |

| 28 | Data_Soeren_RNA-seq_2022 |

| 29 | Data_Manja_RNAseq_Organoids_Merged |

| 30 | Data_Gunnar_Yersiniomics |

| 31 | Data_Manja_RNAseq_Organoids |

| 32 | Data_Susanne_Carotis_MS |

~/DATA_C

(names only; as listed)

| # | Name |

|---|---|

| 1 | 2022-10-27_IRI_manuscript_v03_JH.docx |

| 2 | 16304905.fasta |

| 3 | ’16S data manuscript_NF.docx’ |

| 4 | 180820_2_supp_4265595_sw6zjk.docx |

| 5 | 180820_2_supp_4265596_sw6zjk.docx |

| 6 | 1a_vs_3.csv |

| 7 | ‘2.05.01.05-A01 Urlaubsantrag-Shuting-beantragt.pdf’ |

| 8 | 2014SawickaBBA.pdf |

| 9 | 20160509Manuscript_NDM_OXA_mitKomm.doc |

| 10 | 220607_Agenda_monthly_meeting.pdf |

| 11 | ‘20221129 Table mutations.docx’ |

| 12 | 230602_NB501882_0428_AHKG53BGXT.zip |

| 13 | 362383173.rar |

| 14 | 562.9459.1.fa |

| 15 | 562.9459.1_rc.fa |

| 16 | ASA3P.pdf |

| 17 | All_indels_annotated_vHR.xlsx |

| 18 | ‘Amplikon_indeces_Susanne +groups.xlsx’ |

| 19 | Amplikon_indeces_Susanne.xlsx |

| 20 | GAMOLA2 |

| 21 | Data_Susanne_Carotis_spatialRNA_PUBLISHING (dead link) |

| 22 | Data_Paul_Staphylococcus_epidermidis |

| 23 | Data_Nicola_Schaltenberg_PICRUSt |

| 24 | Data_Nicola_Schaltenberg |

| 25 | Data_Nicola_Gagliani |

| 26 | Data_methylome_MMc |

| 27 | Data_Jingang |

| 28 | Data_Indra_RNASeq_GSM2262901 |

| 29 | Data_Holger_VRE |

| 30 | Data_Holger_Pseudomonas_aeruginosa_SNP |

| 31 | Data_Hannes_ChIPSeq |

| 32 | Data_Emilia_MeDIP |

| 33 | Data_ChristophFR_HepE_published |

| 34 | Data_Christopher_MeDIP_MMc_published |

| 35 | Data_Anna_Kieler_Sepi_Staemme |

| 36 | Data_Anna12_HAPDICS_final |

| 37 | Data_Anastasia_RNASeq_PUBLISHING |

| 38 | Aufnahmeantrag_komplett_10_2022.pdf |

| 39 | Astrovirus.pdf |

| 40 | COMMANDS |

| 41 | Bacterial_pipelines.txt |

| 42 | COMPSRA_uke_DEL.jar |

| 43 | ChIPSeq_pipeline_desc.docx |

| 44 | ChIPSeq_pipeline_desc.pdf |

| 45 | Comparative_genomic_analysis_of_eight_novel_haloal.pdf |

| 46 | CvO_Klassenliste_7_3.pdf |

| 47 | ‘Copy of pool_b1_CGATGT_300.xlsx’ |

| 48 | Fran_16S_Exp8-17-21-27.txt |

| 49 | HPI_DRIVE |

| 50 | HEV_aligned.fasta |

| 51 | INTENSO_DIR |

| 52 | HPI_samples_for_NGS_29.09.22.xlsx |

| 53 | Hotmail_to_Gmail |

| 54 | Indra_Thesis_161020.pdf |

| 55 | ‘LT K331A.gbk’ |

| 56 | LOG_p954_stat |

| 57 | LOG |

| 58 | Manuscript_10_02_2021.docx |

| 59 | Metagenomics_Tools_and_Insights.pdf |

| 60 | ‘Miseq Amplikon LAuf April.xlsx’ |

| 61 | NGS.tar.gz |

| 62 | Nachweis_Bakterien_Viren_im_Hochdurchsatz.pdf |

| 63 | Nicole8_Lamprecht_logs |

| 64 | Nanopore.handouts.pdf |

| 65 | ‘Norovirus paper Susanne 191105.docx’ |

| 66 | PhyloRNAalifold.pdf |

| 67 | README_R |

| 68 | README_RNAHiSwitch_DEL |

| 69 | RNA-NGS_Analysis_modul3_NanoStringNorm.zip |

| 70 | RNAConSLOptV1.2.tar.gz |

| 71 | ‘RSV GFP5 including 3`UTR.docx’ |

| 72 | SNPs_on_pangenome.txt |

| 73 | SERVER |

| 74 | R_tutorials-master.zip |

| 75 | Rawdata_Readme.pdf |

| 76 | SUB10826945_record_preview.txt |

| 77 | S_staphylococcus_annotated_diff_expr.xls |

| 78 | Snakefile_list |

| 79 | Source_Classification_Code.rds |

| 80 | Supplementary_Table_S3.xlsx |

| 81 | Untitled.ipynb |

| 82 | UniproUGENE_UserManual.pdf |

| 83 | Untitled1.ipynb |

| 84 | Untitled2.ipynb |

| 85 | Untitled3.ipynb |

| 86 | WAC6h_vs_WAP6h_down.txt |

| 87 | damian_nodbs |

| 88 | WAC6h_vs_WAP6h_up.txt |

| 89 | ‘add. Figures Hamburg_UKE.pptx’ |

| 90 | all_gene_counts_with_annotation.xlsx |

| 91 | app_flask.py |

| 92 | bengal-bay-0.1.json |

| 93 | bengal3_ac3.yml |

| 94 | call_shell_from_Ruby.png |

| 95 | bengal3ac3.yml |

| 96 | empty.fasta |

| 97 | coefficients_csaw_vs_diffreps.xlsx |

| 98 | exchange.txt |

| 99 | exdata-data-NEI_data.zip |

| 100 | genes_wac6_wap6.xls |

| 101 | go1.13.linux-amd64.tar.gz.1 |

| 102 | hev_p2-p5.fa |

| 103 | map_corrected_backup.txt |

| 104 | install_nginx_on_hamm |

| 105 | hg19.rmsk.bed |

| 106 | metadata-9563675-processed-ok.tsv |

| 107 | mkg_sprechstundenflyer_ver1b_dezember_2019.pdf |

| 108 | multiqc_config.yaml |

| 109 | p11326_OMIKRON3398_corsurv.gb |

| 110 | p11326_OMIKRON3398_corsurv.gb_converted.fna |

| 111 | parseGenbank_reformat.py |

| 112 | pangenome-snakemake-master.zip |

| 113 | ‘phylo tree draft.pdf’ |

| 114 | qiime_params.txt |

| 115 | pool_b1_CGATGT_300.zip |

| 116 | qiime_params_backup.txt |

| 117 | qiime_params_s16_s18.txt |

| 118 | snakePipes |

| 119 | results_description.html |

| 120 | rnaalihishapes.tar.gz |

| 121 | rnaseq_length_bias.pdf |

| 122 | 3932-Leber |

| 123 | BioPython |

| 124 | Biopython |

| 125 | DEEP-DV |

| 126 | DOKTORARBEIT |

| 127 | Data_16S_Arck_vaginal_stool |

| 128 | Data_16S_BS052 |

| 129 | Data_16S_Birgit |

| 130 | Data_16S_Christner |

| 131 | Data_16S_Leonie |

| 132 | Data_16S_PatientA-G_CSF |

| 133 | Data_16S_Schaltenberg |

| 134 | Data_16S_benchmark |

| 135 | Data_16S_benchmark2 |

| 136 | Data_16S_gcdh_BKV |

| 137 | Data_Alex1_Amplicon |

| 138 | Data_Alex1_SNP |

| 139 | Data_Analysis_for_Life_Science |

| 140 | Data_Anna13_vanA-Element |

| 141 | Data_Anna14_PACBIO_methylation |

| 142 | Data_Anna_C.acnes2_old_DEL |

| 143 | Data_Anna_MT880872_update |

| 144 | Data_Anna_gap_filling_agrC |

| 145 | Data_Baechlein_Hepacivirus_2018 |

| 146 | Data_Bornavirus |

| 147 | Data_CSF |

| 148 | Data_Christine_cz19-178-rothirsch-bovines-hepacivirus |

| 149 | Data_Daniela_adenovirus_WGS |

| 150 | Data_Emilia_MeDIP_DEL |

| 151 | Data_Francesco2021_16S |

| 152 | Data_Francesco2021_16S_re |

| 153 | Data_Gunnar_MS |

| 154 | Data_Hannes_RNASeq |

| 155 | Data_Holger_Efaecium_variants_PUBLISHED |

| 156 | Data_Holger_VRE_DEL |

| 157 | Data_Icebear_Damian |

| 158 | Data_Indra3_H3K4_p2_DEL |

| 159 | Data_Indra6_RNASeq_ChipSeq_Integration_DEL |

| 160 | Data_Indra_Figures |

| 161 | Data_KatjaGiersch_new_HDV |

| 162 | Data_MHH_Encephalitits_DAMIAN |

| 163 | Data_Manja_RPAChIPSeq_public |

| 164 | Data_Manuel_WGS_Yersinia |

| 165 | Data_Manuel_WGS_Yersinia2_DEL |

| 166 | Data_Manuel_WGS_Yersinia_DEL |

| 167 | Data_Marcus_tracrRNA_structures |

| 168 | Data_Mausmaki_Damian |

| 169 | Data_Nicole1_Tropheryma_whipplei |

| 170 | Data_Nicole5 |

| 171 | Data_Nicole5_77-92 |

| 172 | Data_PaulBecher_Rotavirus |

| 173 | Data_Pietschmann_HCV_Amplicon_bigFile |

| 174 | Data_Piscine_Orthoreovirus_3_in_Brown_Trout |

| 175 | Data_Proteomics |

| 176 | Data_RNABioinformatics |

| 177 | Data_RNAKinetics |

| 178 | Data_R_courses |

| 179 | Data_SARS-CoV-2 |

| 180 | Data_SARS-CoV-2_Genome_Announcement_PUBLISHED |

| 181 | Data_Seite |

| 182 | Data_Song_aggregate_sum |

| 183 | Data_Susanne_Amplicon_RdRp_orf1_2_re |

| 184 | Data_Tabea_RNASeq |

| 185 | Data_Thaiss1_Microarray_new |

| 186 | Data_Tintelnot_16S |

| 187 | Data_Wuenee_Plots |

| 188 | Data_Yang_Poster |

| 189 | Data_jupnote |

| 190 | Data_parainfluenza |

| 191 | Data_snakemake_recipe |

| 192 | Data_temp |

| 193 | Data_viGEN |

| 194 | Genomic_Data_Science |

| 195 | Learn_UGENE |

| 196 | MMcPaper |

| 197 | Manuscript_Epigenetics_Macrophage_Yersinia |

| 198 | Manuscript_RNAHiSwitch |

| 199 | MeDIP_Emilia_copy_DEL |

| 200 | Method_biopython |

| 201 | NGS |

| 202 | Okazaki-Seq_Processing |

| 203 | RNA-NGS_Analysis_modul3_NanoStringNorm |

| 204 | RNAConSLOptV1.2 |

| 205 | RNAHeliCes |

| 206 | RNA_li_HeliCes |

| 207 | RNAliHeliCes |

| 208 | RNAliHeliCes_Relatedshapes_modified |

| 209 | R_refcard |

| 210 | R_DataCamp |

| 211 | R_cats_package |

| 212 | R_tutorials-master |

| 213 | SnakeChunks |

| 214 | align_4l_on_FJ705359 |

| 215 | align_4p_on_FJ705359 |

| 216 | assembly |

| 217 | bacto |

| 218 | bam2fastq_mapping_again |

| 219 | chipster |

| 220 | damian_GUI |

| 221 | enhancer-snakemake-demo |

| 222 | hg19_gene_annotations |

| 223 | interlab_comparison_DEL |

| 224 | my_flask |

| 225 | papers |

| 226 | pangenome-snakemake_zhaoc1 |

| 227 | pyflow-epilogos |

| 228 | raw_data_rnaseq_Indra |

| 229 | test_raw_data_dnaseq |

| 230 | test_raw_data_rnaseq |

| 231 | to_Francesco |

| 232 | ukepipe |

| 233 | ukepipe_nf |

| 234 | var_www_DjangoApp_mysite2_2023-05 |

| 235 | roentgenpass.pdf |

| 236 | salmon_tx2gene_GRCh38.tsv |

| 237 | salmon_tx2gene_chrHsv1.tsv |

| 238 | ‘sample IDs_Lamprecht.xlsx’ |

| 239 | summarySCC_PM25.rds |

| 240 | untitled.py |

| 241 | tutorial-rnaseq.pdf |

| 242 | x.log |

| 243 | webapp.tar.gz |

| 244 | temp |

| 245 | temp2 |

| 246 | Data_Susanne_Amplicon_haplotype_analyses_RdRp_orf1_2_re |

| 247 | Data_Susanne_WGS_unbiased |

~/DATA_D

| # | Name |

|---|---|

| 1 | Data_Soeren_RNA-seq_2023_PUBLISHING |

| 2 | Data_Ute |

| 3 | Data_Marc_RNA-seq_Sepidermidis |

| 4 | Data_Patricia_Transposon |

| 5 | Books_DA_for_Life |

| 6 | Data_Sven |

| 7 | Datasize_calculation_based_on_coverage.txt |

| 8 | Data_Paul_HD46_1-wt_resequencing |

| 9 | Data_Sanam_DAMIAN |

| 10 | Data_Tam_variant_calling |

| 11 | Data_Samira_Manuscripts |

| 12 | Data_Silvia_VoltRon_Debug |

| 13 | Data_Pietschmann_229ECoronavirus_Mutations_2024 |

| 14 | Data_Pietschmann_229ECoronavirus_Mutations_2025 |

| 15 | Data_Birthe_Svenja_RSV_Probe3_PUBLISHING |

~/DATA_E

| # | Name |

|---|---|

| 1 | j_huang_until_201904 |

| 2 | Data_2019_April |

| 3 | Data_2019_May |

| 4 | Data_2019_June |

| 5 | Data_2019_July |

| 6 | Data_2019_August |

| 7 | Data_2019_September |

| 8 | Data_Song_RNASeq_PUBLISHED |

| 9 | Data_Laura_MP_RNASeq |

| 10 | Data_Nicole6_HEV_Swantje2 |

| 11 | Data_Becher_Damian_Picornavirus_BovHepV |

| 12 | bacteria_refseq.zip |

| 13 | bacteria_refseq |

| 14 | Data_Rotavirus |

| 15 | Data_Xiaobo_10x |

| 16 | Data_Becher_Damian_Picornavirus_BovHepV_INCOMPLETE_DEL |

~/DATA_Intenso

| # | Name |

|---|---|

| 1 | HOME_FREIBURG_DEL |

| 2 | 150810_M03701_0019_000000000-AFJFK |

| 3 | Data_Thaiss2_Microarray |

| 4 | VirtualBox_VMs_DEL |

| 5 | ‘VirtualBox VMs_DEL’ |

| 6 | ‘VirtualBox VMs2_DEL’ |

| 7 | websites |

| 8 | DATA |

| 9 | Data_Laura |

| 10 | Data_Laura_2 |

| 11 | Data_Laura_3 |

| 12 | galaxy_tools |

| 13 | Downloads2 |

| 14 | Downloads |

| 15 | mom-baby_com_cn |

| 16 | ‘VirtualBox VMs2’ |

| 17 | VirtualBox_VMs |

| 18 | CLC_Data |

| 19 | Work_Dir2 |

| 20 | Work_Dir2_SGE |

| 21 | Data_SPANDx1_Kpneumoniae_vs_Assembly1 |

| 22 | MauveOutput |

| 23 | Fastqs |

| 24 | Data_Anna3_VRE_Ausbruch |

| 25 | Work_Dir_mock_broad_mockinput |

| 26 | Work_Dir_dM_broad_mockinput |

| 27 | Data_Anna8_RNASeq_static_shake_deprecated |

| 28 | PENDRIVE_cont |

| 29 | Work_Dir_WAP_broad_mockinput |

| 30 | Work_Dir_WAC_broad_mockinput |

| 31 | Work_Dir_dP_broad_mockinput |

| 32 | Data_Nicole10_16S_interlab |

| 33 | PAPERS |

| 34 | TB |

| 35 | Data_Anna4_SNP |

| 36 | Data_Carolin1_16S |

| 37 | ChipSeq_Raw_Data3_171009_NB501882_0024_AHNGTYBGX3 |

| 38 | m_aepfelbacher_DEL.zip |

| 39 | Data_Anna7_RNASeq_Cytoscape |

| 40 | Data_Nicole9_Hund_Katze_Mega |

| 41 | Data_Anna2_CO6114 |

| 42 | Data_Nicole3_TH17_orig |

| 43 | Data_Nicole1_Tropheryma_whipplei |

| 44 | results_K27 |

| 45 | ‘VirtualBox VMs’ |

| 46 | Data_Anna6_RNASeq |

| 47 | Data_Anna1_1585_RNAseq |

| 48 | Data_Thaiss1_Microarray |

| 49 | Data_Nicole7_Anelloviruses_Polyomavirus |

| 50 | Data_Nina1_Nicole5_1-76 |

| 51 | Data_Nina1_merged |

| 52 | Data_Nicole8_Lamprecht |

| 53 | Data_Anna5_SNP |

| 54 | chipseq |

| 55 | Downloads_DEL |

| 56 | Data_Gagliani2_enriched_16S |

| 57 | Data_Gagliani1_18S_16S |

| 58 | m_aepfelbacher |

| 59 | Data_Susanne_WGS_3amplicons |

/media/jhuang/Titisee

| # | Name |

|---|---|

| 1 | Data_Anna4_SNP |

| 2 | Data_Anna5_SNP_rsync_error |

| 3 | TRASH |

| 4 | Data_Nicole6_HEV_4_SNP_calling_PE_DEL |

| 5 | Data_Nina1_Nicole7 |

| 6 | Data_Nicole6_HEV_4_SNP_calling_SE_DEL |

| 7 | 180119_M03701_0115_000000000-BFG46.zip |

| 8 | Data_Nicole10_16S_interlab_PUBLISHED |

| 9 | Anna11_assemblies |

| 10 | Anna11_trees |

| 11 | Data_Nicole6_HEV_new_orig_fastqs |

| 12 | Data_Anna9_OXA-48_or_OXA-181 |

| 13 | bengal_results_v1_2018 |

| 14 | DO.pdf |

| 15 | damian_DEL |

| 16 | MAGpy_db |

| 17 | UGENE_v1_32_data_cistrome |

| 18 | UGENE_v1_32_data_ngs_classification |

| 19 | Data_Nicole6_HEV_Swantje |

| 20 | Data_Nico_Gagliani |

| 21 | GAMOLA2_prototyp |

| 22 | Thomas_methylation_EPIC_DO |

| 23 | Data_Nicola_Schaltenberg |

| 24 | Data_Nicola_Schaltenberg_PICRUSt |

| 25 | HOME_FREIBURG |

| 26 | Data_Francesco_16S |

| 27 | 3rd_party |

| 28 | ConsPred_prokaryotic_genome_annotation |

| 29 | ‘System Volume Information’ |

| 30 | damian_v201016 |

| 31 | Data_Holger_VRE |

| 32 | Data_Holger_Pseudomonas_aeruginosa_SNP |

| 33 | Eigene_Ordner_HR |

| 34 | GAMOLA2 |

| 35 | Data_Anastasia_RNASeq |

| 36 | Data_Amir_PUBLISHED |

| 37 | ‘$RECYCLE.BIN’ |

| 38 | Data_Xiaobo_10x_3 |

| 39 | Data_Tam_DNAseq_2023_Comparative_ATCC19606_AYE_ATCC17978 |

| 40 | Data_Holger_S.epidermidis_short |

| 41 | TEMP |

| 42 | Data_Holger_S.epidermidis_long |

/media/jhuang/Elements(Denise_ChIPseq)

| # | Name |

|---|---|

| 1 | Data_Denise_LTtrunc_H3K27me3_2_results_DEL |

| 2 | Data_Denise_LTtrunc_H3K4me3_2_results_DEL |

| 3 | Data_Anna12_HAPDICS_final_not_finished_DEL |

| 4 | m_aepfelbacher_DEL |

| 5 | Data_Damian |

| 6 | ST772_DEL |

| 7 | ALL_trimmed_part_DEL |

| 8 | Data_Denise_ChIPSeq_Protocol1 |

| 9 | Data_Pietschmann_HCV_Amplicon |

| 10 | Data_Nicole6_HEV_ownMethod_new |

| 11 | HD04-1.fasta |

| 12 | RNAHiSwitch_ |

| 13 | RNAHiSwitch__ |

| 14 | RNAHiSwitch___ |

| 15 | RNAHiSwitchpaper |

| 16 | RNAHiSwitch_milestone1_DELETED |

| 17 | RNAHiSwitch_paper.tar.gz |

| 18 | RNAHiSwitch_paper_DELETED |

| 19 | RNAHiSwitch_milestone1 |

| 20 | RNAHiSwitch_paper |

| 21 | Ute_RNASeq_results |

| 22 | Ute_miRNA_results_38 |

| 23 | RNAHiSwitch |

| 24 | Data_HepE_Freiburg_PUBLISHED |

| 25 | Data_INTENSO_2022-06 |

| 26 | ‘$RECYCLE.BIN’ |

| 27 | ‘System Volume Information’ |

| 28 | Data_Anna_Mixta_hanseatica_PUBLISHED |

| 29 | coi_disclosure.docx |

| 30 | Data_Jingang |

| 31 | **Data_Susanne_16S_re_UNPUBLISHED *** |

| 32 | Data_Denise_ChIPSeq_Protocol2 |

| 33 | Data_Caroline_RNAseq_wt_timecourse |

| 34 | Data_Caroline_RNAseq_brain_organoids |

| 35 | Data_Amir_PUBLISHED_DEL |

| 36 | Data_download_virus_fam |

| 37 | Data_Gunnar_Yersiniomics_COPYFAILED_DEL |

| 38 | Data_Paul_and_Marc_Epidome_batch3 |

| 39 | ifconfig_hamm.txt |

| 40 | Data_Soeren_2023_PUBLISHING |

| 41 | Data_Birthe_Svenja_RSV_Probe3_PUBLISHING |

| 42 | Data_Ute |

| 43 | **Data_Susanne_16S_UNPUBLISHED *** |

/media/jhuang/Seagate Expansion Drive(HOffice)

| # | Name |

|---|---|

| 1 | SeagateExpansion.ico |

| 2 | Autorun.inf |

| 3 | Start_Here_Win.exe |

| 4 | Warranty.pdf |

| 5 | Start_Here_Mac.app |

| 6 | Seagate |

| 7 | HomeOffice_DIR (Data_Anna_HAPDICS_RNASeq, From_Samsung_T5) |

| 8 | DATA_COPY_FROM_178528 (copy_and_clean.sh, logfile_jhuang.log, jhuang) |

| 9 | ‘System Volume Information’ |

| 10 | ‘$RECYCLE.BIN’ |

/media/jhuang/Elements(Anna_C.arnes)

| # | Name |

|---|---|

| 1 | Data_Swantje_HEV_using_viral-ngs |

| 2 | VIPER_static_DEL |

| 3 | Data_Nicole6_HEV_Swantje1_blood |

| 4 | Data_Nicole6_HEV_benchmark |

| 5 | Data_Denise_RNASeq_GSE79958 |

| 6 | Data_16S_Leonie_from_Nico_Gaglianis |

| 7 | Fastqs_19-21 |

| 8 | ‘System Volume Information’ |

| 9 | Data_Luise_Epidome_test |

| 10 | Data_Anna_C.acnes_PUBLISHED |

| 11 | Data_Denise_LT_DNA_Bindung |

| 12 | Data_Denise_LT_K331A_RNASeq |

| 13 | Data_Luise_Epidome_batch1 |

| 14 | Data_Luise_Pseudomonas_aeruginosa_PUBLISHED |

| 15 | Data_Luise_Epidome_batch2 |

| 16 | picrust2_out_2024_2 |

| 17 | ‘$RECYCLE.BIN’ |

/media/jhuang/Seagate Expansion Drive(DATA_COPY_FROM_hamburg)

| # | Name |

|---|---|

| 1 | Autorun.inf |

| 2 | Start_Here_Win.exe |

| 3 | Warranty.pdf |

| 4 | Start_Here_Mac.app |

| 5 | Seagate |

| 6 | DATA_COPY_TRANSFER_INCOMPLETE_DEL |

| 7 | DATA_COPY_FROM_hamburg |

/media/jhuang/Seagate Expansion Drive(Seagate_1)

| # | Name |

|---|---|

| 1 | RNA_seq_analysis_tools_2013 |

| 2 | Data_Laura0 |

| 3 | Data_Petra_Arck |

| 4 | Data_Martin_mycoplasma |

| 5 | chromhmm-enhancers |

| 6 | ChromHMM_Dir |

| 7 | Data_Denise_sT_H3K4me3 |

| 8 | Data_Denise_sT_H3K27me3 |

| 9 | Start_Here_Mac.app |

| 10 | Seagate |

| 11 | Data_Nicole16_parapoxvirus |

| 12 | Project_h_rohde_Susanne_WGS_unbiased_DEL.zip |

| 13 | Data_Denise_ChIPSeq_Protocol1 |

| 14 | Data_ENNGS_pathogen_detection_pipeline_comparison |

| 15 | j_huang_201904_202002 |

| 16 | Data_Laura_ChIPseq_GSE120945 |

| 17 | batch_200314_incomplete |

| 18 | m_aepfelbacher.zip |

| 19 | m_error_DEL |

| 20 | batch_200325 |

| 21 | batch_200319 |

| 22 | GAMOLA2_prototyp |

| 23 | Data_Nicola_Gagliani |

| 24 | 2017-18_raw_data |

| 25 | Data_Arck_MeDIP |

| 26 | trimmed |

| 27 | Data_Nicole_16S_Christmas_2020_2 |

| 28 | j_huang_202007_202012 |

| 29 | Data_Nicole_16S_Christmas_2020 |

| 30 | Downloads_2021-01-18_DEL |

| 31 | Data_Laura_plasmid |

| 32 | Data_Laura_16S_2_re |

| 33 | Data_Laura_16S_2 |

| 34 | Data_Laura_16S_2re |

| 35 | Data_Laura_16S_merged |

| 36 | Downloads_DEL |

| 37 | Data_Laura_16S |

| 38 | Data_Anna12_HAPDICS_final |

| 39 | ‘$RECYCLE.BIN’ |

| 40 | ‘System Volume Information’ |

/media/jhuang/Seagate Expansion Drive(Seagate_2)

| # | Name |

|---|---|

| 1 | Data_Nicole4_TH17 |

| 2 | Start_Here_Win.exe |

| 3 | Autorun.inf |

| 4 | Warranty.pdf |

| 5 | Start_Here_Mac.app |

| 6 | Seagate |

| 7 | Data_Denise_RNASeq_trimmed_DEL |

| 8 | HD12 |

| 9 | Qi_panGenome |

| 10 | ALL |

| 11 | fastq_HPI_bw_2019_08_and_2020_02 |

| 12 | f1_R1_link.sh |

| 13 | f1_R2_link.sh |

| 14 | rtpd_files |

| 15 | m_aepfelbacher.zip |

| 16 | Data_Nicole_16S_Hamburg_Odense_Cornell_Muenster |

| 17 | HyAsP_incomplete_genomes |

| 18 | HyAsP_normal_sampled_input |

| 19 | HyAsP_complete_genomes |

| 20 | video.zip |

| 21 | sam2bedgff.pl |

| 22 | HD04.infection.hS_vs_HD04.nose.hS_annotated_degenes.xls |

| 23 | ALL83 |

| 24 | Data_Pietschmann_RSV_Probe_PUBLISHED |

| 25 | HyAsP_normal |

| 26 | Data_Manthey_16S |

| 27 | rtpd_files_DEL |

| 28 | HyAsP_bold |

| 29 | Data_HEV |

| 30 | Seq_VRE_hybridassembly |

| 31 | Data_Anna12_HAPDICS_raw_data_shovill_prokka |

| 32 | Data_Anna_HAPDICS_WGS_ALL |

| 33 | Data_HEV_Freiburg_2020 |

| 34 | Data_Nicole_HDV_Recombination_PUBLISHED |

| 35 | s_hero2x |

| 36 | 201030_M03701_0207_000000000-J57B4.zip |

| 37 | README |

| 38 | ‘README(1)’ |

| 39 | dna2.fasta.fai |

| 40 | 91.pep |

| 41 | 91.orf |

| 42 | 91.orf.fai |

| 43 | dgaston-dec-06-2012-121211124858-phpapp01.pdf |

| 44 | tileshop.fcgi |

| 45 | ppat.1009304.s016.tif |

| 46 | sequence.txt |

| 47 | ‘sequence(1).txt’ |

| 48 | GSE128169_series_matrix.txt.gz |

| 49 | GSE128169_family.soft.gz |

| 50 | Data_Anna_HAPDICS_RNASeq |

| 51 | Data_Christopher_MeDIP_MMc_PUBLISHED |

| 52 | Data_Gunnar_Yersiniomics_IMCOMPLETE_DEL |

| 53 | Data_Denise_RNASeq |

| 54 | ‘System Volume Information’ |

| 55 | ‘$RECYCLE.BIN’ |

/media/jhuang/Elements(An14_RNAs)

| # | Name |

|---|---|

| 1 | Data_Anna10_RP62A |

| 2 | Data_Nicole12_16S_Kluwe_Bunders |

| 3 | chromhmm-enhancers |

| 4 | Data_Denise_sT_Methylation |

| 5 | Data_Denise_LTtrunc_Methylation |

| 6 | Data_16S_arckNov |

| 7 | Data_Tabea_RNASeq |

| 8 | nr_gz_README |

| 9 | j_huang_raw_fq |

| 10 | ‘System Volume Information’ |

| 11 | ‘$RECYCLE.BIN’ |

| 12 | host_refs |

| 13 | Vraw |

| 14 | **Data_Susanne_Amplicon_RdRp_orf1_2 *** |

| 15 | tmp |

| 16 | Data_RNA188_Paul_Becher |

| 17 | Data_ChIPSeq_Laura |

| 18 | Data_16S_arckNov_review_PUBLISHED |

| 19 | Data_16S_arckNov_re |

| 20 | Fastqs |

| 21 | Data_Tabea_RNASeq_submission |

| 22 | Data_Anna_Cutibacterium_acnes_DEL |

| 23 | Data_Silvia_RNASeq_SUBMISSION |

| 24 | Data_Hannes_ChIPSeq |

| 25 | Data_Anna14_RNASeq_to_be_DEL |

| 26 | Data_Pietschmann_RSV_Probe2_PUBLISHED |

| 27 | Data_Holger_Klebsiella_pneumoniae_SNP_PUBLISHING |

| 28 | Data_Anna14_RNASeq_plus_public |

/media/jhuang/Elements(Indra_HAPDICS)

| # | Name |

|---|---|

| 1 | Data_Anna11_Sepdermidis_DEL |

| 2 | HD15_without_10 |

| 3 | HD31 |

| 4 | HD33 |

| 5 | HD39 |

| 6 | HD43 |

| 7 | HD46 |

| 8 | HD15_with_10 |

| 9 | HD26 |

| 10 | HD59 |

| 11 | HD25 |

| 12 | HD21 |

| 13 | HD17 |

| 14 | HD04 |

| 15 | Data_Anna11_Pair1-6_P6 |

| 16 | Data_Anna12_HAPDICS_HyAsP |

| 17 | HAPDICS_hyasp_plasmids |

| 18 | Data_Anna_HAPDICS_review |

| 19 | data_overview.txt |

| 20 | align_assem_res_DEL |

| 21 | ‘System Volume Information’ |

| 22 | EXCHANGE_DEL |

| 23 | Data_Indra_H3K4me3_public |

| 24 | Data_Gunnar_MS |

| 25 | ‘$RECYCLE.BIN’ |

| 26 | UKE_DELLWorkstation_C_Users_indbe_Desktop |

| 27 | Linux_DELLWorkstation_C_Users_indbe_VirtualBoxVMs |

| 28 | Data_Anna_HAPDICS_RNASeq_rawdata |

| 29 | Data_Indra_H3K27ac_public |

| 30 | Data_Holger_Klebsiella_pneumoniae_SNP_PUBLISHING |

| 31 | DATA_INDRA_RNASEQ |

| 32 | DATA_INDRA_CHIPSEQ |

/media/jhuang/Elements(jhuang_*)

| # | Name |

|---|---|

| 1 | ‘Install Western Digital Software for Windows.exe’ |

| 2 | ‘Install Western Digital Software for Mac.dmg’ |

| 3 | ‘System Volume Information’ |

| 4 | ‘$RECYCLE.BIN’ |

| 5 | 20250203_FS10003086_95_BTR67811-0621 |

/media/jhuang/Smarty

| # | Name |

|---|---|

| 1 | lost+found |

| 2 | Blast_db |

| 3 | temporary_files_DEL |

| 4 | ALIGN_ASSEM |

| 5 | Data_Paul_Staphylococcus_epidermidis |

| 6 | Data_16S_Degenhardt_Marius_DEL |

| 7 | Data_Gunnar_Yersiniomics_DEL |

| 8 | Data_Manja_RNAseq_Organoids_Virus |

| 9 | Data_Emilia_MeDIP |

| 10 | DjangoApp_Backup_2023-10-30 |

| 11 | ref |

| 12 | Data_Michelle_RNAseq_2025_raw_data_DEL_AFTER_UPLOAD_GEO |

Original input (as one point)

/media/jhuang/INTENSO is empty --> Now the data are on ~/DATA_Intenso

/dev/sdg1 3,7T 512K 3,7T 1% /media/jhuang/INTENSO

jhuang@WS-2290C:~/DATA$ ls -tlrh

total 1,6M

drwxrwxrwx 6 jhuang jhuang 4,0K Okt 26 2022 Data_Ute_MKL1

drwxrwxrwx 8 jhuang jhuang 4,0K Jan 13 2023 Data_Ute_RNA_4_2022-11_test

drwxrwxr-x 7 jhuang jhuang 4,0K Mär 8 2023 Data_Ute_RNA_3

drwxr-xr-x 11 jhuang jhuang 4,0K Dez 19 2023 Data_Susanne_Carotis_RNASeq_PUBLISHING

drwxr-xr-x 21 jhuang jhuang 4,0K Jun 18 2024 Data_Jiline_Yersinia_SNP

drwxrwxr-x 5 jhuang jhuang 4,0K Jul 22 2024 Data_Tam_ABAYE_RS05070_on_A_calcoaceticus_baumannii_complex_DUPLICATED_DEL

drwxr-xr-x 2 jhuang jhuang 4,0K Jul 23 2024 Data_Nicole_CRC1648

drwxr-xr-x 4 jhuang jhuang 4,0K Sep 6 2024 Mouse_HS3ST1_12373_out

drwxr-xr-x 4 jhuang jhuang 4,0K Sep 6 2024 Mouse_HS3ST1_12175_out

drwxrwxr-x 10 jhuang jhuang 4,0K Sep 12 2024 Data_Biobakery

drwxrwxr-x 6 jhuang jhuang 4,0K Sep 23 2024 Data_Xiaobo_10x_2

drwxr-xr-x 4 jhuang jhuang 4,0K Sep 23 2024 Data_Xiaobo_10x_3

drwxr-xr-x 3 jhuang jhuang 4,0K Sep 26 2024 Talk_Nicole_CRC1648

drwxr-xr-x 2 jhuang jhuang 4,0K Sep 26 2024 Talks_Bioinformatics_Meeting

drwxr-xr-x 2 jhuang jhuang 12K Sep 26 2024 Talks_resources

drwxrwxr-x 6 jhuang jhuang 12K Okt 10 2024 Data_Susanne_MPox_DAMIAN

drwxrwxr-x 3 jhuang jhuang 4,0K Okt 14 2024 Data_host_transcriptional_response

drwxr-xr-x 13 jhuang jhuang 4,0K Okt 23 2024 Talks_including_DEEP-DV

drwxrwxr-x 2 jhuang jhuang 4,0K Okt 24 2024 DOKTORARBEIT

drwxrwxr-x 18 jhuang jhuang 4,0K Nov 11 2024 Data_Susanne_MPox

drwxrwxr-x 25 jhuang jhuang 12K Nov 11 2024 Data_Jiline_Transposon

drwxrwxr-x 16 jhuang jhuang 20K Nov 25 2024 Data_Jiline_Transposon2

drwxrwxr-x 3 jhuang jhuang 4,0K Dez 13 2024 Data_Matlab

drwxrwxr-x 5 jhuang jhuang 4,0K Jan 28 2025 deepseek-ai

drwx------ 4 jhuang jhuang 4,0K Feb 5 2025 Stick_Mi_DEL

-rw-rw-r-- 1 jhuang jhuang 1,1K Feb 18 2025 TODO_shares

drwxrwxrwx 13 jhuang jhuang 4,0K Mär 3 2025 Data_Ute_RNA_4

drwxrwxr-x 2 jhuang jhuang 4,0K Mär 31 2025 Data_Liu_PCA_plot

-rw-rw-r-- 1 jhuang jhuang 43K Apr 3 2025 README_run_viral-ngs_inside_Docker

-rw-rw-r-- 1 jhuang jhuang 8,7K Apr 9 2025 README_compare_genomes

-rw-rw-r-- 1 jhuang jhuang 0 Apr 11 2025 mapped.bam

drwxrwxr-x 3 jhuang jhuang 4,0K Apr 24 2025 Data_Serpapi

drwxrwxrwx 22 jhuang jhuang 4,0K Apr 30 2025 Data_Ute_RNA_1_2

drwxrwxr-x 15 jhuang jhuang 4,0K Apr 30 2025 Data_Marc_RNAseq_2024

drwxrwxr-x 45 jhuang jhuang 12K Mai 15 2025 Data_Nicole_CaptureProbeSequencing

-rw-rw-r-- 1 jhuang jhuang 657 Mai 23 2025 LOG_mapping

drwxrwxr-x 46 jhuang jhuang 4,0K Mai 26 2025 Data_Huang_Human_herpesvirus_3

drwxrwxr-x 8 jhuang jhuang 4,0K Jun 13 2025 Data_Nicole_DAMIAN_Post-processing_Pathoprobe_FluB_Links

lrwxrwxrwx 1 jhuang jhuang 37 Jun 16 2025 Access_to_Win7 -> ./Data_Marius_16S/picrust2_out_2024_2

drwxrwxr-x 17 jhuang jhuang 4,0K Jun 18 2025 Data_DAMIAN_Post-processing_Flavivirus_and_FSME_and_Haemophilus

drwxr-xr-x 42 jhuang jhuang 36K Jun 23 2025 Data_Luise_Sepi_STKN

drwxrwxr-x 29 jhuang jhuang 20K Jul 22 2025 Data_Patricia_Sepi_7samples

drwxr-xr-x 9 jhuang jhuang 4,0K Aug 8 2025 Data_Soeren_2025_PUBLISHING

drwxrwxr-x 9 jhuang jhuang 4,0K Aug 13 2025 Data_Ben_RNAseq_2025

drwxrwxr-x 34 jhuang jhuang 12K Sep 3 12:18 Data_Tam_DNAseq_2025_AYE-WT_Q_S_craA-Tig4_craA-1-Cm200_craA-2-Cm200

drwxrwxr-x 50 jhuang jhuang 16K Okt 6 17:59 Data_Patricia_Transposon

drwxrwxr-x 23 jhuang jhuang 12K Okt 20 13:27 Data_Patricia_Transposon_2025

drwxrwxr-x 2 jhuang jhuang 4,0K Okt 23 12:21 Colocation_Space

drwxrwxr-x 2 jhuang jhuang 4,0K Okt 27 12:56 Data_Tam_Methylation_2025_empty

-rw-rw-r-- 1 jhuang jhuang 151K Nov 3 13:01 2025-11-03_eVB-Schreiben_12-57.pdf

-rw-rw-r-- 1 jhuang jhuang 67K Nov 5 16:59 DEGs_Group1_A1-A3+A8-A10_vs_Group2_B10-B16.png

-rw-rw-r-- 1 jhuang jhuang 687K Nov 14 09:55 README.pdf

drwxrwxr-x 2 jhuang jhuang 4,0K Nov 24 15:43 Data_Hannes_JCM00612

drwxrwxr-x 3 jhuang jhuang 4,0K Dez 4 17:03 167_redundant_DEL

drwxrwxr-x 2 jhuang jhuang 4,0K Dez 8 10:33 Lehre_Bioinformatik

drwxrwxr-x 27 jhuang jhuang 12K Dez 8 11:29 Data_Ben_Boruta_Analysis

drwxrwxr-x 18 jhuang jhuang 4,0K Dez 8 17:39 Data_Childrensclinic_16S_2025_DEL

drwxrwxr-x 2 jhuang jhuang 4,0K Dez 10 10:05 Data_Ben_Mycobacterium_pseudoscrofulaceum

-rw-rw-r-- 1 jhuang jhuang 8,9K Dez 15 12:42 Foong_RNA_mSystems_Huang_Changed.txt

drwxrwxr-x 22 jhuang jhuang 4,0K Dez 17 13:07 Data_Pietro_Scatturo_and_Charlotte_Uetrecht_16S_2025

drwxrwxr-x 8 jhuang jhuang 4,0K Dez 18 10:45 Data_JuliaBerger_RNASeq_SARS-CoV-2

drwxrwxr-x 19 jhuang jhuang 4,0K Jan 3 17:42 Data_PaulBongarts_S.epidermidis_HDRNA

lrwxrwxrwx 1 jhuang jhuang 31 Jan 12 14:30 Data_Ute -> /media/jhuang/Elements/Data_Ute

drwxrwxr-x 12 jhuang jhuang 4,0K Jan 16 12:44 Data_Foong_DNAseq_2025_AYE_Dark_vs_Light_TODO

drwxrwxrwx 22 jhuang jhuang 4,0K Jan 16 12:48 Data_Foong_RNAseq_2021_ATCC19606_Cm

drwxrwxr-x 2 jhuang jhuang 4,0K Jan 16 13:02 Data_Tam_Funding

drwxrwxr-x 9 jhuang jhuang 4,0K Jan 16 13:32 Data_Tam_RNAseq_2025_LB-AB_IJ_W1_Y1_WT_vs_Mac-AB_IJ_W1_Y1_WT_on_ATCC19606

drwxrwxr-x 12 jhuang jhuang 4,0K Jan 16 13:32 Data_Tam_RNAseq_2025_subMIC_exposure_on_ATCC19606

-rw-rw-r-- 1 jhuang jhuang 1,2K Jan 16 13:34 Data_Tam.txt

drwxrwxr-x 16 jhuang jhuang 4,0K Jan 16 13:37 Data_Tam_RNAseq_2024_AUM_MHB_Urine_on_ATCC19606

drwxrwxr-x 10 jhuang jhuang 4,0K Jan 16 18:22 Data_Tam_Metagenomics_2026

drwxrwxr-x 6 jhuang jhuang 16K Jan 23 16:35 Data_Michelle

drwxrwxr-x 38 jhuang jhuang 12K Jan 28 15:20 Data_Nicole_16S_2025_Childrensclinic

drwxr-xr-x 145 jhuang jhuang 36K Jan 29 10:49 Data_Sophie_HDV_Sequences

drwxrwxr-x 4 jhuang jhuang 4,0K Jan 30 11:44 Data_Tam_DNAseq_2026_19606deltaIJfluE

-rw-rw-r-- 1 jhuang jhuang 63K Jan 30 17:53 README_nf-core

drwxrwxr-x 22 jhuang jhuang 4,0K Feb 4 10:43 Data_Vero_Kymographs

drwxrwxr-x 13 jhuang jhuang 4,0K Feb 4 14:06 Access_to_Win10

drwxrwxr-x 7 jhuang jhuang 4,0K Feb 5 11:59 Data_Patricia_AMRFinderPlus_2025

drwxrwxr-x 45 jhuang jhuang 4,0K Feb 6 11:54 Data_Tam_DNAseq_2025_Unknown-adeABadeIJ_adeIJK_CM1_CM2

drwxrwxr-x 41 jhuang jhuang 12K Feb 9 15:11 Data_Damian

drwxrwxr-x 6 jhuang jhuang 4,0K Feb 13 12:48 Data_Karoline_16S

drwxrwxr-x 13 jhuang jhuang 12K Feb 13 18:09 Data_JuliaFuchs_RNAseq_2025

drwxrwxr-x 18 jhuang jhuang 4,0K Feb 16 11:19 Data_Tam_DNAseq_2025_ATCC19606-Y1Y2Y3Y4W1W2W3W4_TODO

drwxrwxr-x 34 jhuang jhuang 4,0K Feb 16 15:54 Data_Tam_DNAseq_2026_Acinetobacter_harbinensis

drwxrwxr-x 19 jhuang jhuang 4,0K Feb 16 17:13 Data_Benjamin_DNAseq_2026_GE11174

drwxrwxrwx 36 jhuang jhuang 12K Feb 17 15:02 Data_Susanne_spatialRNA_2022.9.1_backup

drwxrwxr-x 39 jhuang jhuang 12K Feb 17 15:12 Data_Susanne_spatialRNA

jhuang@WS-2290C:~/DATA_A$ ls -ltrh

total 24K

drwxr-xr-x 7 jhuang jhuang 4,0K Jun 18 2024 Data_Damian_NEW_CREATED

drwxr-xr-x 2 jhuang jhuang 4,0K Jun 18 2024 Data_R_bubbleplots

drwxr-xr-x 16 jhuang jhuang 4,0K Jun 18 2024 Data_Ute_TRANSFERED_DEL

drwxr-xr-x 2 jhuang jhuang 4,0K Okt 7 2024 Paper_Target_capture_sequencing_MHH_PUBLISHED

drwxr-xr-x 20 jhuang jhuang 4,0K Okt 8 2024 Data_Nicole8_Lamprecht_new_PUBLISHED

drwxrwxrwx 8 jhuang jhuang 4,0K Mai 21 2025 Data_Samira_RNAseq

jhuang@WS-2290C:~/DATA_B$ ls -tlrh

total 136K

drwxr-xr-x 3 jhuang jhuang 4,0K Jun 18 2024 Data_DAMIAN_endocarditis_encephalitis

drwxr-xr-x 8 jhuang jhuang 4,0K Jun 18 2024 Data_Denise_sT_PUBLISHING

drwxr-xr-x 12 jhuang jhuang 4,0K Jun 18 2024 Data_Fran2_16S_func

drwxr-xr-x 2 jhuang jhuang 4,0K Jun 18 2024 Data_Holger_5179-R1_vs_5179

drwxr-xr-x 16 jhuang jhuang 4,0K Jun 18 2024 Antraege_

drwxr-xr-x 18 jhuang jhuang 4,0K Jun 18 2024 Data_16S_Nicole_210222

drwxr-xr-x 6 jhuang jhuang 4,0K Jun 18 2024 Data_Adam_Influenza_A_virus

drwxr-xr-x 14 jhuang jhuang 12K Jun 18 2024 Data_Anna_Efaecium_assembly

drwxr-xr-x 2 jhuang jhuang 4,0K Jun 18 2024 Data_Bactopia

drwxr-xr-x 5 jhuang jhuang 4,0K Jun 18 2024 Data_Ben_RNAseq

drwxr-xr-x 7 jhuang jhuang 4,0K Jun 18 2024 Data_Johannes_PIV3

drwxr-xr-x 19 jhuang jhuang 4,0K Jun 18 2024 Data_Luise_Epidome_longitudinal_nose

drwxr-xr-x 6 jhuang jhuang 4,0K Jun 18 2024 Data_Manja_Hannes_Probedesign

drwxr-xr-x 2 jhuang jhuang 4,0K Jun 18 2024 Data_Marc_AD_PUBLISHING

drwxr-xr-x 2 jhuang jhuang 4,0K Jun 18 2024 Data_Marc_RNA-seq_Saureus_Review

drwxr-xr-x 17 jhuang jhuang 4,0K Jun 18 2024 Data_Nicole_16S

drwxr-xr-x 3 jhuang jhuang 4,0K Jun 18 2024 Data_Nicole_cfDNA_pathogens

drwxr-xr-x 16 jhuang jhuang 4,0K Jun 18 2024 Data_Ring_and_CSF_PegivirusC_DAMIAN

drwxr-xr-x 4 jhuang jhuang 4,0K Jun 18 2024 Data_Song_Microarray

drwxr-xr-x 11 jhuang jhuang 4,0K Jun 18 2024 Data_Susanne_Omnikron

drwxr-xr-x 3 jhuang jhuang 4,0K Jun 18 2024 Data_Viro

drwxr-xr-x 2 jhuang jhuang 4,0K Jun 18 2024 Doktorarbeit

drwxr-xr-x 2 jhuang jhuang 4,0K Jun 18 2024 Poster_Rohde_20230724

drwxr-xr-x 6 jhuang jhuang 4,0K Jul 12 2024 Data_Django

drwxr-xr-x 35 jhuang jhuang 4,0K Okt 21 2024 Data_Holger_S.epidermidis_1585_5179_HD05

drwxr-xr-x 9 jhuang jhuang 4,0K Nov 18 2024 Data_Manja_RNAseq_Organoids_Virus

drwxr-xr-x 2 jhuang jhuang 4,0K Feb 21 2025 Data_Holger_MT880870_MT880872_Annotation

drwxr-xr-x 12 jhuang jhuang 4,0K Apr 8 2025 Data_Soeren_RNA-seq_2022

drwxr-xr-x 5 jhuang jhuang 4,0K Apr 11 2025 Data_Manja_RNAseq_Organoids_Merged

drwxr-xr-x 24 jhuang jhuang 4,0K Apr 25 2025 Data_Gunnar_Yersiniomics

drwxr-xr-x 10 jhuang jhuang 4,0K Jan 16 17:14 Data_Manja_RNAseq_Organoids

drwxr-xr-x 3 jhuang jhuang 4,0K Feb 17 12:11 Data_Susanne_Carotis_MS

jhuang@WS-2290C:~/DATA_C$ ls -tlrh

total 13G

-rwxr-xr-x 1 jhuang jhuang 1,7M Jun 18 2024 2022-10-27_IRI_manuscript_v03_JH.docx

-rwxr-xr-x 1 jhuang jhuang 7,1K Jun 18 2024 16304905.fasta

-rwxr-xr-x 1 jhuang jhuang 55K Jun 18 2024 '16S data manuscript_NF.docx'

-rwxr-xr-x 1 jhuang jhuang 792K Jun 18 2024 180820_2_supp_4265595_sw6zjk.docx

-rwxr-xr-x 1 jhuang jhuang 17K Jun 18 2024 180820_2_supp_4265596_sw6zjk.docx

-rwxr-xr-x 1 jhuang jhuang 12K Jun 18 2024 1a_vs_3.csv

-rwxr-xr-x 1 jhuang jhuang 90K Jun 18 2024 '2.05.01.05-A01 Urlaubsantrag-Shuting-beantragt.pdf'

-rwxr-xr-x 1 jhuang jhuang 708K Jun 18 2024 2014SawickaBBA.pdf

-rwxr-xr-x 1 jhuang jhuang 61K Jun 18 2024 20160509Manuscript_NDM_OXA_mitKomm.doc

-rwxr-xr-x 1 jhuang jhuang 289K Jun 18 2024 220607_Agenda_monthly_meeting.pdf

-rwxr-xr-x 1 jhuang jhuang 14K Jun 18 2024 '20221129 Table mutations.docx'

-rwxr-xr-x 1 jhuang jhuang 12G Jun 18 2024 230602_NB501882_0428_AHKG53BGXT.zip

-rwxr-xr-x 1 jhuang jhuang 107K Jun 18 2024 362383173.rar

-rwxr-xr-x 1 jhuang jhuang 128K Jun 18 2024 562.9459.1.fa

-rwxr-xr-x 1 jhuang jhuang 126K Jun 18 2024 562.9459.1_rc.fa

-rwxr-xr-x 1 jhuang jhuang 1,6M Jun 18 2024 ASA3P.pdf

-rwxr-xr-x 1 jhuang jhuang 21K Jun 18 2024 All_indels_annotated_vHR.xlsx

-rwxr-xr-x 1 jhuang jhuang 11K Jun 18 2024 'Amplikon_indeces_Susanne +groups.xlsx'

-rwxr-xr-x 1 jhuang jhuang 9,6K Jun 18 2024 Amplikon_indeces_Susanne.xlsx

-rwxr-xr-x 1 jhuang jhuang 68 Jun 18 2024 GAMOLA2

-rwxr-xr-x 1 jhuang jhuang 88 Jun 18 2024 Data_Susanne_Carotis_spatialRNA_PUBLISHING

-rwxr-xr-x 1 jhuang jhuang 112 Jun 18 2024 Data_Paul_Staphylococcus_epidermidis

-rwxr-xr-x 1 jhuang jhuang 118 Jun 18 2024 Data_Nicola_Schaltenberg_PICRUSt

-rwxr-xr-x 1 jhuang jhuang 100 Jun 18 2024 Data_Nicola_Schaltenberg

-rwxr-xr-x 1 jhuang jhuang 94 Jun 18 2024 Data_Nicola_Gagliani

-rwxr-xr-x 1 jhuang jhuang 96 Jun 18 2024 Data_methylome_MMc

-rwxr-xr-x 1 jhuang jhuang 78 Jun 18 2024 Data_Jingang

-rwxr-xr-x 1 jhuang jhuang 112 Jun 18 2024 Data_Indra_RNASeq_GSM2262901

-rwxr-xr-x 1 jhuang jhuang 84 Jun 18 2024 Data_Holger_VRE

-rwxr-xr-x 1 jhuang jhuang 128 Jun 18 2024 Data_Holger_Pseudomonas_aeruginosa_SNP

-rwxr-xr-x 1 jhuang jhuang 92 Jun 18 2024 Data_Hannes_ChIPSeq

-rwxr-xr-x 1 jhuang jhuang 76 Jun 18 2024 Data_Emilia_MeDIP

-rwxr-xr-x 1 jhuang jhuang 88 Jun 18 2024 Data_ChristophFR_HepE_published

-rwxr-xr-x 1 jhuang jhuang 158 Jun 18 2024 Data_Christopher_MeDIP_MMc_published

-rwxr-xr-x 1 jhuang jhuang 104 Jun 18 2024 Data_Anna_Kieler_Sepi_Staemme

-rwxr-xr-x 1 jhuang jhuang 136 Jun 18 2024 Data_Anna12_HAPDICS_final

-rwxr-xr-x 1 jhuang jhuang 96 Jun 18 2024 Data_Anastasia_RNASeq_PUBLISHING

-rwxr-xr-x 1 jhuang jhuang 169K Jun 18 2024 Aufnahmeantrag_komplett_10_2022.pdf

-rwxr-xr-x 1 jhuang jhuang 1,2M Jun 18 2024 Astrovirus.pdf

-rwxr-xr-x 1 jhuang jhuang 732 Jun 18 2024 COMMANDS

-rwxr-xr-x 1 jhuang jhuang 690 Jun 18 2024 Bacterial_pipelines.txt

-rwxr-xr-x 1 jhuang jhuang 16M Jun 18 2024 COMPSRA_uke_DEL.jar

-rwxr-xr-x 1 jhuang jhuang 239K Jun 18 2024 ChIPSeq_pipeline_desc.docx

-rwxr-xr-x 1 jhuang jhuang 385K Jun 18 2024 ChIPSeq_pipeline_desc.pdf

-rwxr-xr-x 1 jhuang jhuang 2,1M Jun 18 2024 Comparative_genomic_analysis_of_eight_novel_haloal.pdf

-rwxr-xr-x 1 jhuang jhuang 64K Jun 18 2024 CvO_Klassenliste_7_3.pdf

-rwxr-xr-x 1 jhuang jhuang 649K Jun 18 2024 'Copy of pool_b1_CGATGT_300.xlsx'

-rwxr-xr-x 1 jhuang jhuang 3,9K Jun 18 2024 Fran_16S_Exp8-17-21-27.txt

-rwxr-xr-x 1 jhuang jhuang 463 Jun 18 2024 HPI_DRIVE

-rwxr-xr-x 1 jhuang jhuang 179K Jun 18 2024 HEV_aligned.fasta

-rwxr-xr-x 1 jhuang jhuang 4,1K Jun 18 2024 INTENSO_DIR

-rwxr-xr-x 1 jhuang jhuang 14K Jun 18 2024 HPI_samples_for_NGS_29.09.22.xlsx

-rwxr-xr-x 1 jhuang jhuang 4,3K Jun 18 2024 Hotmail_to_Gmail

-rwxr-xr-x 1 jhuang jhuang 13M Jun 18 2024 Indra_Thesis_161020.pdf

-rwxr-xr-x 1 jhuang jhuang 5,2K Jun 18 2024 'LT K331A.gbk'

-rwxr-xr-x 1 jhuang jhuang 0 Jun 18 2024 LOG_p954_stat

-rwxr-xr-x 1 jhuang jhuang 684K Jun 18 2024 LOG

-rwxr-xr-x 1 jhuang jhuang 197K Jun 18 2024 Manuscript_10_02_2021.docx

-rwxr-xr-x 1 jhuang jhuang 595K Jun 18 2024 Metagenomics_Tools_and_Insights.pdf

-rwxr-xr-x 1 jhuang jhuang 14K Jun 18 2024 'Miseq Amplikon LAuf April.xlsx'

-rwxr-xr-x 1 jhuang jhuang 2,2M Jun 18 2024 NGS.tar.gz

-rwxr-xr-x 1 jhuang jhuang 586K Jun 18 2024 Nachweis_Bakterien_Viren_im_Hochdurchsatz.pdf

-rwxr-xr-x 1 jhuang jhuang 1,2K Jun 18 2024 Nicole8_Lamprecht_logs

-rwxr-xr-x 1 jhuang jhuang 24M Jun 18 2024 Nanopore.handouts.pdf

-rwxr-xr-x 1 jhuang jhuang 113K Jun 18 2024 'Norovirus paper Susanne 191105.docx'

-rwxr-xr-x 1 jhuang jhuang 503K Jun 18 2024 PhyloRNAalifold.pdf

-rwxr-xr-x 1 jhuang jhuang 19K Jun 18 2024 README_R

-rwxr-xr-x 1 jhuang jhuang 137K Jun 18 2024 README_RNAHiSwitch_DEL

-rwxr-xr-x 1 jhuang jhuang 8,3M Jun 18 2024 RNA-NGS_Analysis_modul3_NanoStringNorm.zip

-rwxr-xr-x 1 jhuang jhuang 57K Jun 18 2024 RNAConSLOptV1.2.tar.gz

-rwxr-xr-x 1 jhuang jhuang 17K Jun 18 2024 'RSV GFP5 including 3`UTR.docx'

-rwxr-xr-x 1 jhuang jhuang 238 Jun 18 2024 SNPs_on_pangenome.txt

-rwxr-xr-x 1 jhuang jhuang 55 Jun 18 2024 SERVER

-rwxr-xr-x 1 jhuang jhuang 26M Jun 18 2024 R_tutorials-master.zip

-rwxr-xr-x 1 jhuang jhuang 182K Jun 18 2024 Rawdata_Readme.pdf

-rwxr-xr-x 1 jhuang jhuang 40K Jun 18 2024 SUB10826945_record_preview.txt

-rwxr-xr-x 1 jhuang jhuang 283K Jun 18 2024 S_staphylococcus_annotated_diff_expr.xls

-rwxr-xr-x 1 jhuang jhuang 2,0K Jun 18 2024 Snakefile_list

-rwxr-xr-x 1 jhuang jhuang 160K Jun 18 2024 Source_Classification_Code.rds

-rwxr-xr-x 1 jhuang jhuang 61K Jun 18 2024 Supplementary_Table_S3.xlsx

-rwxr-xr-x 1 jhuang jhuang 617 Jun 18 2024 Untitled.ipynb

-rwxr-xr-x 1 jhuang jhuang 127M Jun 18 2024 UniproUGENE_UserManual.pdf

-rwxr-xr-x 1 jhuang jhuang 14M Jun 18 2024 Untitled1.ipynb

-rwxr-xr-x 1 jhuang jhuang 110K Jun 18 2024 Untitled2.ipynb

-rwxr-xr-x 1 jhuang jhuang 2,9K Jun 18 2024 Untitled3.ipynb

-rwxr-xr-x 1 jhuang jhuang 18K Jun 18 2024 WAC6h_vs_WAP6h_down.txt

-rwxr-xr-x 1 jhuang jhuang 100 Jun 18 2024 damian_nodbs

-rwxr-xr-x 1 jhuang jhuang 45K Jun 18 2024 WAC6h_vs_WAP6h_up.txt

-rwxr-xr-x 1 jhuang jhuang 635K Jun 18 2024 'add. Figures Hamburg_UKE.pptx'

-rwxr-xr-x 1 jhuang jhuang 3,7M Jun 18 2024 all_gene_counts_with_annotation.xlsx

-rwxr-xr-x 1 jhuang jhuang 22K Jun 18 2024 app_flask.py

-rwxr-xr-x 1 jhuang jhuang 1,8K Jun 18 2024 bengal-bay-0.1.json

-rwxr-xr-x 1 jhuang jhuang 16K Jun 18 2024 bengal3_ac3.yml

-rwxr-xr-x 1 jhuang jhuang 246K Jun 18 2024 call_shell_from_Ruby.png

-rwxr-xr-x 1 jhuang jhuang 8,1K Jun 18 2024 bengal3_ac3_.yml

-rwxr-xr-x 1 jhuang jhuang 12 Jun 18 2024 empty.fasta

-rwxr-xr-x 1 jhuang jhuang 32K Jun 18 2024 coefficients_csaw_vs_diffreps.xlsx

-rwxr-xr-x 1 jhuang jhuang 4,3K Jun 18 2024 exchange.txt