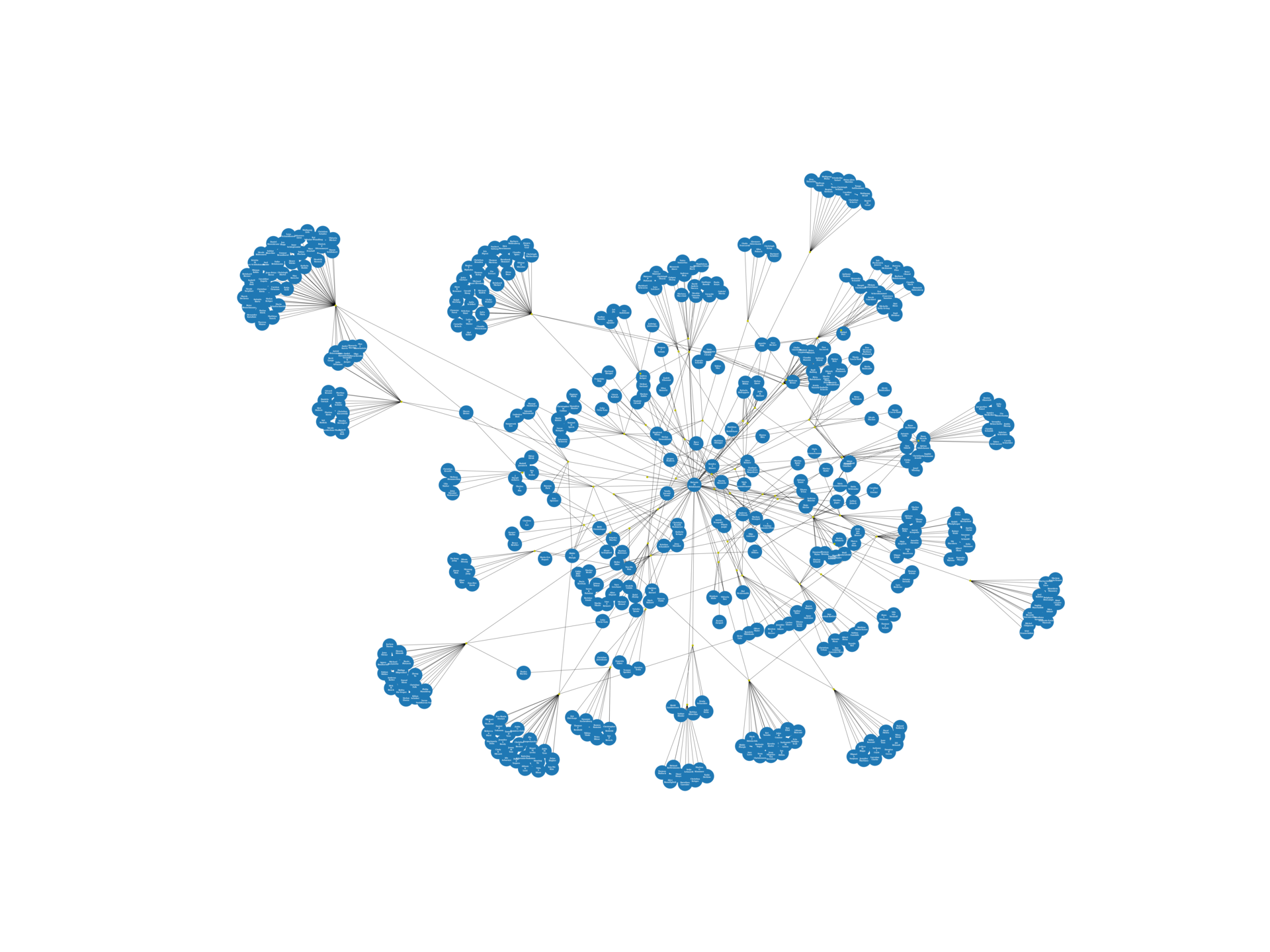

Co-Authorship Network Generator using scraped data from Google Scholar via SerpAPI

gene_x 0 like s 700 view s

Tags: processing, pipeline

-

main script coauthorship_network.py

import networkx as nx import matplotlib.pyplot as plt import bibtexparser import re #python3 get_articles_with_serpapi.py > articles_Brinkmann.txt #grep -o 'title' articles_Brinkmann.txt | wc -l # Helper function to clean special characters from strings def clean_string(s): s = s.replace('.', '') # Remove periods s = re.sub(r'[{}]', '', s) # Remove curly braces used in BibTeX formatting s = re.sub(r'\\[a-zA-Z]{1,2}', '', s) # Remove LaTeX commands (e.g., \textcopyright) return ' '.join([n for n in s.split(' ') if len(n) > 1]) # Remove single-letter names # Load the .bib file with open('articles_Brinkmann.txt', 'r') as bibtex_file: bib_database = bibtexparser.load(bibtex_file) print(len(bib_database.entries)) # Create an empty graph G = nx.Graph() #internal_authors = ["L Redecke", "T Schulz", "M Brinkmann"] #internal_authors = list(set(internal_authors)) # Initialize an empty set for internal authors internal_authors = set() # Iterate through each entry in the bibtex file for entry in bib_database.entries: # Get and clean authors authors = entry.get('authors', '').split(', ') #cleaned_authors = [clean_string(author) for author in authors] # Print authors #print(f"Authors: {cleaned_authors}") print(f"Authors: {authors}") # Add authors to the internal_authors set internal_authors.update(authors) # Convert the internal_authors set to a list #internal_authors = list(internal_authors) internal_authors = list(set(internal_authors)) print(f"Internal Authors: {internal_authors}") #print("Nodes:", G.nodes()) #print("Edges:", G.edges()) # Iterate through each entry in the bibtex file for entry in bib_database.entries: title = entry.get('title', '').replace('=', ' ') #title = clean_string(f"Paper/{title}") #print(title) authors = entry.get('authors', '').split(', ') # Authors are separated by 'and' in BibTeX #print(authors) for author in authors: #author = clean_string(f"Author/{author}") if author in internal_authors: print(author) G.add_edge(author, title) ## Try different layout engines if 'sfdp' is problematic #try: # pos = nx.nx_pydot.graphviz_layout(G, prog='sfdp') #except AssertionError: # print("Error with sfdp layout, switching to 'dot' layout.") # pos = nx.nx_pydot.graphviz_layout(G, prog='dot') # Fallback to 'dot' try: pos = nx.nx_pydot.graphviz_layout(G, prog='sfdp') except Exception as e: print("Error generating layout:", e) pos = nx.spring_layout(G) # Fallback to another layout for node in G.nodes(): if isinstance(node, str): G.nodes[node]['label'] = node.replace(' ', '_').replace('/', '_') print("Nodes in the graph:", G.nodes()) # Determine maximum length for author nodes max_len = max([len(n) for n in G.nodes() if n in internal_authors]) print(max_len) plt.figure(figsize=(96, 72)) #plot nodes for authors nx.draw_networkx_nodes(G, pos, #nodelist=[n for n in G.nodes() if n.startswith('Author/')], nodelist=[n for n in G.nodes() if n in internal_authors], node_size=max_len*200) #plot nodes for publications nx.draw_networkx_nodes(G, pos, nodelist=[n for n in G.nodes() if n not in internal_authors], node_color='y', node_size=100) nx.draw_networkx_edges(G, pos) nx.draw_networkx_labels(G, pos, labels={n: n.split('/')[-1].replace(' ', '\n') for n in G.nodes() if n in internal_authors}, font_color='w', font_size=10, font_weight='bold', font_family='serif') #font_family='sans-serif') #'serif': Uses a serif typeface (e.g., Times New Roman). #'sans-serif': Uses a sans-serif typeface (e.g., Helvetica, Arial). #'monospace': Uses a monospace typeface (e.g., Courier New). #'DejaVu Sans': A popular sans-serif typeface available in many environments. #'Arial': A widely available sans-serif typeface. #'Times New Roman': A classic serif typeface. #'Comic Sans MS': A casual sans-serif typeface. plt.axis('off') # Save the plot as a PNG file #, bbox_inches="tight" plt.savefig("co_author_network.png", format="png") #convert Figure_2.png -crop 1340x750+315+135 co_author_network_cropped_2.png plt.show() # Count the number of publications and authors publications = [n for n in G.nodes() if n not in internal_authors] #authors = [n for n in G.nodes() if n in internal_authors] # Print the counts print(f"Number of Publications: {len(publications)}") print(f"Number of Authors: {len(internal_authors)}") #68, 476 ## Optionally, print out the publications and authors themselves #print(f"Publications: {publications}") print(f"Authors: {internal_authors}") -

code of get_articles_with_serpapi.py

from serpapi import GoogleSearch #pip install google-search-results #https://github.com/serpapi/google-search-results-python #https://serpapi.com/google-scholar-author-co-authors #We are able to extract: name, link, author_id, affiliations, email, and thumbnail results. params = { "engine": "google_scholar_author", "author_id": "5AzhtgUAAAAJ", "api_key": "ed", "num" : 100 } #-- for each publication, view complete citation statistics per year, e.g. for 5AzhtgUAAAAJ:KlAtU1dfN6UC #https://scholar.google.com/citations?hl=en&view_op=view_citation&citation_for_view=5AzhtgUAAAAJ:KlAtU1dfN6UC #params = { # "engine": "google_scholar_author", # "citation_id": "5AzhtgUAAAAJ:KlAtU1dfN6UC", # "view_op": "view_citation", # "api_key": "ed" #} search = GoogleSearch(params) results = search.get_dict() print(results) ## Safely get the co_authors #co_authors = results.get("co_authors", []) #if co_authors: # print("Co-authors:", co_authors) #else: # print("No co-authors found.") ##co_authors = results["co_authors"] -

code of get_coauthors_jiabin.py

from serpapi import GoogleSearch params = { "engine": "google_scholar_author", "author_id": "P1pS4s0AAAAJ", "view_op": "list_colleagues", "api_key": "ed" } search = GoogleSearch(params) results = search.get_dict() print(results) co_authors = results["co_authors"] -

get_citations_raw.py

import requests api_key = "60" url = "https://api.scrapingdog.com/google_scholar" params = { "api_key": api_key, "query": "Melanie M. Brinkmann", "language": "en", "page": 10, "results": 100 } response = requests.get(url, params=params) if response.status_code == 200: data = response.json() print(data) else: print(f"Request failed with status code: {response.status_code}") -

post-processing of the file generated by get_articles_with_serpapi.py

#delete all header and ends: namely remove "...'articles': [" and "], cited_by= {"table"= [{citations= {"all"= 5351, "since_2019"= 2358}}, {"h_index"= {"all"= 33, "since_2019"= 25}}, {"i10_index"= {"all"= 47, "since_2019"= 42}}], graph= [{year= 2006, citations= 22}, {year= 2007, citations= 73}, {year= 2008, citations= 93}, {year= 2009, citations= 216}, {year= 2010, citations= 204}, {year= 2011, citations= 293}, {year= 2012, citations= 269}, {year= 2013, citations= 331}, {year= 2014, citations= 282}, {year= 2015, citations= 308}, {year= 2016, citations= 281}, {year= 2017, citations= 301}, {year= 2018, citations= 266}, {year= 2019, citations= 288}, {year= 2020, citations= 369}, {year= 2021, citations= 609}, {year= 2022, citations= 430}, {year= 2023, citations= 366}, {year= 2024, citations= 288}]}, 'public_access': {'link': 'https://scholar.google.com/citations?view_op=list_mandates&hl=en&user=5AzhtgUAAAAJ', 'available': 50, 'not_available': 4}}" "}, {'title':" --> "}\n\n@article{1, title=" replace 1 to actual id (1,...,82) "MM Brinkmann" --> "M Brinkmann" :-->= '-->" "link" --> link, "citation_id" --> citation_id, "authors"-->authors, "publication"-->publication, "cited_by"-->cited_by, "serpapi_link"-->serpapi_link, "graph"-->graph, "cites_id"-->cites_id, "year"-->year, "citations"-->citations, "value" --> value #manually replace the author complete name by clicking the google scholar links #remove the records still with abbreviated name in the links. #The end effect as follows: @article{1, title= "UNC93B1 delivers nucleotide-sensing toll-like receptors to endolysosomes", link= "https=//scholar.google.com/citations?view_op=view_citation&hl=en&user=5AzhtgUAAAAJ&pagesize=100&citation_for_view=5AzhtgUAAAAJ=W7OEmFMy1HYC", citation_id= "5AzhtgUAAAAJ=W7OEmFMy1HYC", authors= "You-Me Kim, Melanie M Brinkmann, Marie-Eve Paquet, Hidde L Ploegh", publication= "Nature 452 (7184), 234-238, 2008", cited_by= {value= 847, link= "https=//scholar.google.com/scholar?oi=bibs&hl=en&cites=3461748963046634721", serpapi_link= "https=//serpapi.com/search.json?cites=3461748963046634721&engine=google_scholar&hl=en", cites_id= "3461748963046634721"}, year= "2008"} @article{2, title= "Proteolytic cleavage in an endolysosomal compartment is required for activation of Toll-like receptor 9", link= "https=//scholar.google.com/citations?view_op=view_citation&hl=en&user=5AzhtgUAAAAJ&pagesize=100&citation_for_view=5AzhtgUAAAAJ=4TOpqqG69KYC", citation_id= "5AzhtgUAAAAJ=4TOpqqG69KYC", authors= "Boyoun Park, Melanie M Brinkmann, Eric Spooner, Clarissa C Lee, You-Me Kim, Hidde L Ploegh", publication= "Nature immunology 9 (12), 1407-1414, 2008", cited_by= {value= 587, link= "https=//scholar.google.com/scholar?oi=bibs&hl=en&cites=8523162291112327960", serpapi_link= "https=//serpapi.com/search.json?cites=8523162291112327960&engine=google_scholar&hl=en", cites_id= "8523162291112327960"}, year= "2008"} @article{3, title= "The interaction between the ER membrane protein UNC93B and TLR3, 7, and 9 is crucial for TLR signaling", link= "https=//scholar.google.com/citations?view_op=view_citation&hl=en&user=5AzhtgUAAAAJ&pagesize=100&citation_for_view=5AzhtgUAAAAJ=-f6ydRqryjwC", citation_id= "5AzhtgUAAAAJ=-f6ydRqryjwC", authors= "Melanie M Brinkmann, Eric Spooner, Kasper Hoebe, Bruce Beutler, Hidde L Ploegh, You-Me Kim", publication= "The Journal of cell biology 177 (2), 265-275, 2007", cited_by= {value= 562, link= "https=//scholar.google.com/scholar?oi=bibs&hl=en&cites=13542374013520997852", serpapi_link= "https=//serpapi.com/search.json?cites=13542374013520997852&engine=google_scholar&hl=en", cites_id= "13542374013520997852"}, year= "2007"} @article{4, title= "Noncanonical autophagy is required for type I interferon secretion in response to DNA-immune complexes", link= "https=//scholar.google.com/citations?view_op=view_citation&hl=en&user=5AzhtgUAAAAJ&pagesize=100&citation_for_view=5AzhtgUAAAAJ=KlAtU1dfN6UC", citation_id= "5AzhtgUAAAAJ=KlAtU1dfN6UC", authors= "Jill Henault, Jennifer Martinez, Jeffrey M Riggs, Jane Tian, Payal Mehta, Lorraine Clarke, Miwa Sasai, Eicke Latz, Melanie M Brinkmann, Akiko Iwasaki, Anthony J Coyle, Roland Kolbeck, Douglas R Green, Miguel A Sanjuan", publication= "Immunity 37 (6), 986-997, 2012", cited_by= {value= 376, link= "https=//scholar.google.com/scholar?oi=bibs&hl=en&cites=6648645242373278731", serpapi_link= "https=//serpapi.com/search.json?cites=6648645242373278731&engine=google_scholar&hl=en", cites_id= "6648645242373278731"}, year= "2012"} @article{5, title= "Granulin is a soluble cofactor for toll-like receptor 9 signaling", link= "https=//scholar.google.com/citations?view_op=view_citation&hl=en&user=5AzhtgUAAAAJ&pagesize=100&citation_for_view=5AzhtgUAAAAJ=M3ejUd6NZC8C", citation_id= "5AzhtgUAAAAJ=M3ejUd6NZC8C", authors= "Boyoun Park, Ludovico Buti, Sungwook Lee, Takashi Matsuwaki, Eric Spooner, Melanie M Brinkmann, Masugi Nishihara, Hidde L Ploegh", publication= "Immunity 34 (4), 505-513, 2011", cited_by= {value= 223, link= "https=//scholar.google.com/scholar?oi=bibs&hl=en&cites=8731573748380815185", serpapi_link= "https=//serpapi.com/search.json?cites=8731573748380815185&engine=google_scholar&hl=en", cites_id= "8731573748380815185"}, year= "2011"} #Check how many unique author names in the graphics? sed 's/^[ \t]*//;s/[ \t]*$//' author_names.txt | sort -u > author_name_uniq.txt sort -uf author_names.txt > author_name_uniq.txt cat author_names.txt | tr -cd '\11\12\15\40-\176' | sort -u > author_name_uniq.txt sed 's/^[ \t]*//;s/[ \t]*$//' author_names.txt | tr -cd '\11\12\15\40-\176' | sort -uf > author_name_uniq.txt sed 's/‐/-/g' author_names.txt | sort -u > author_name_uniq.txt cat author_name_uniq.txt | tr '[:upper:]' '[:lower:]' | sort -u > author_name_uniq2.txt

点赞本文的读者

还没有人对此文章表态

本文有评论

没有评论

看文章,发评论,不要沉默

最受欢迎文章

- Motif Discovery in Biological Sequences: A Comparison of MEME and HOMER

- Why Do Significant Gene Lists Change After Adding Additional Conditions in Differential Gene Expression Analysis?

- Calling peaks using findPeaks of HOMER

- PiCRUST2 Pipeline for Functional Prediction and Pathway Analysis in Metagenomics

- Updating Human Gene Identifiers using Ensembl BioMart: A Step-by-Step Guide

- pheatmap vs heatmap.2

- Should the inputs for GSVA be normalized or raw?

- Setup conda environments

- Kraken2 Installation and Usage Guide

- File format for single channel analysis of Agilent microarray data with Limma?

最新文章

- Setup the environment for lumicks-pylake and C_Trap-Multimer-photontrack.ipynb

- 🧬 Cadmium Resistance Gene Analysis in Staphylococcus epidermidis HD46

- MCV病毒中的LT与sT蛋白功能

- Analysis of the RNA binding protein (RBP) motifs for RNA-Seq and miRNAs (v3, simplied)

最多评论文章

- Updating Human Gene Identifiers using Ensembl BioMart: A Step-by-Step Guide

- The top 10 genes

- Retrieving KEGG Genes Using Bioservices in Python

推荐相似文章

Enhanced Visualization of Gene Presence for the Selected Genes in Bongarts_S.epidermidis_HDRNA

RNA-seq Tam on CP059040.1 (Acinetobacter baumannii strain ATCC 19606)

Variant Calling for Herpes Simplex Virus 1 from Patient Sample Using Capture Probe Sequencing